When the topic of artificial intelligence comes up today in boardrooms and at industry conferences, one short term is heard more and more often – RAG. It is no longer just a technical acronym, but a concept that is beginning to reshape how companies think about AI-powered tools.

Understanding what RAG really is has become a necessity for business leaders, because it determines whether newly implemented software will serve as a precise and up-to-date tool, or just another trendy gadget with little value to the organization. In this guide, we will explain what Retrieval-Augmented Generation actually is, how it works in practice, and why it holds such importance for business. We will also show how RAG improves the accuracy of answers generated by AI systems by allowing them to draw on always current and contextual information.

1. Understanding RAG: The Technology Transforming Business Intelligence

1.1 What is RAG (Retrieval-Augmented Generation)?

RAG technology tackles one of the biggest headaches facing modern businesses: how do you make AI systems work with current, accurate, and company-specific information? Traditional AI models only know what they learned during training, but rag ai does something different. It combines powerful language models with the ability to pull information from external databases, documents, and knowledge repositories in real-time.

Here’s the rag ai definition in simple terms: it’s retrieval and generation working as a team. When someone asks a question, the system first hunts through relevant data sources to find useful information, then uses that content to craft a comprehensive, accurate response. This means AI outputs stay current, factually grounded, and tailored to specific business situations instead of giving generic or outdated answers.

What makes RAG particularly valuable is how it handles proprietary data. Companies can plug their internal documents, customer databases, product catalogs, and operational manuals directly into the AI system. Employees and customers get responses that reflect the latest company policies, product specs, and procedural updates without needing to constantly retrain the underlying AI model.

1.2 RAG vs Traditional AI: Key Differences

Traditional AI systems work like a closed book test. They generate responses based only on what they learned during their initial training phase. This creates real problems for business applications, especially when you’re dealing with rapidly changing information, industry-specific knowledge, or proprietary company data that wasn’t part of the original training.

RAG and LLM technologies operate differently by staying connected to external information sources. While a standard language model might give you generic advice about customer service best practices, a RAG-powered system can access your company’s actual customer service protocols, recent policy changes, and current product information to provide guidance that matches your organization’s real procedures.

The difference in how they’re built is fundamental. Traditional generative AI works as a closed system, processing inputs through pre-trained parameters to produce outputs. RAG systems add extra components like retrievers, vector databases, and integration layers that enable continuous access to evolving information. This setup also supports transparency through source attribution, so users can see exactly where information came from and verify its accuracy.

2. Why RAG Technology Matters for Modern Businesses

2.1 Current Business Challenges RAG Solves

Many companies still struggle with information silos – different departments maintain their own databases and systems, making it difficult to use information effectively across the entire organization.RAG technology doesn’t dismantle silos but provides a way to navigate them efficiently. Through real-time retrieval and generation, AI can pull data from multiple sources – databases, documents, or knowledge repositories – and merge it into coherent, context-rich responses. As a result, users receive up-to-date, fact-based information without having to manually search through scattered systems or rely on costly retraining of AI models.

Another challenge is keeping AI systems current. Traditionally, this has required expensive and time-consuming retraining cycles whenever business conditions, regulations, or procedures change. RAG works differently – it leverages live data from connected sources, ensuring that AI responses always reflect the latest information without modifying the underlying model.

The technology also strengthens quality control. Every response generated by the system can be grounded in specific, verifiable sources. This is especially critical in regulated industries, where accuracy, compliance, and full transparency are essential.

3. How RAG Works: A Business-Focused Breakdown

3.1 The Four-Step RAG Process

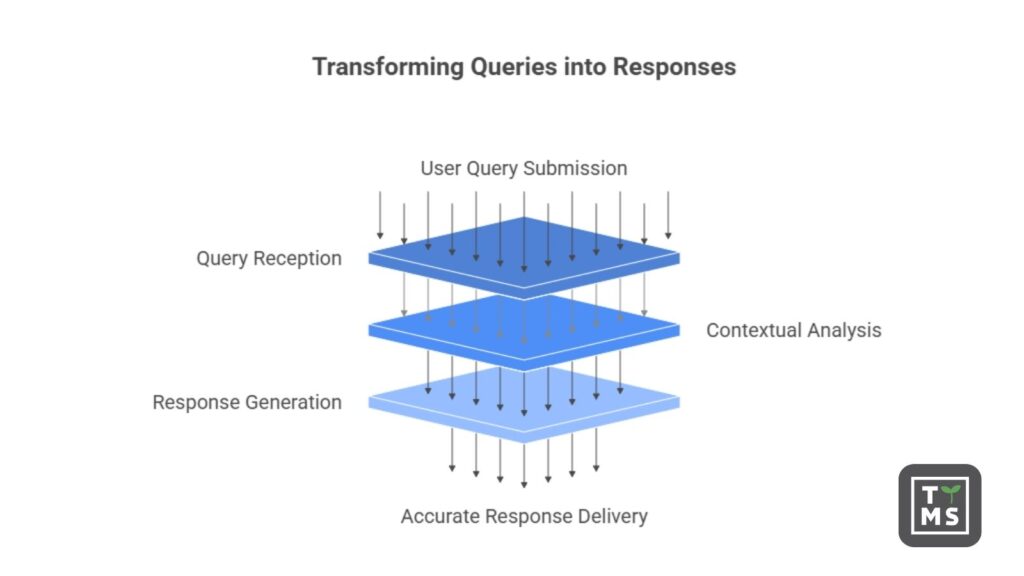

Understanding how rag works requires examining the systematic process that transforms user queries into accurate, contextually relevant responses. This process begins when users submit questions or requests through business applications, customer service interfaces, or internal knowledge management systems.

3.1.1 Data Retrieval and Indexing

The foundation of effective RAG implementation lies in comprehensive data preparation and indexing strategies. Organizations must first identify and catalog all relevant information sources including structured databases, unstructured documents, multimedia content, and external data feeds that should be accessible to the RAG system.

Information from these diverse sources undergoes preprocessing to ensure consistency, accuracy, and searchability. This preparation includes converting documents into machine-readable formats, extracting key information elements, and creating vector representations that enable semantic search capabilities. The resulting indexed information becomes immediately available for retrieval without requiring modifications to the underlying AI model.

Modern indexing approaches use advanced embedding techniques that capture semantic meaning and contextual relationships within business information. This capability enables the system to identify relevant content even when user queries don’t exactly match the terminology used in source documents, improving the breadth and accuracy of information retrieval.

3.1.2 Query Processing and Matching

When users submit queries, the system transforms their natural language requests into vector representations that can be compared against the indexed information repository. This transformation process captures semantic similarity and contextual relationships, rather than relying solely on keyword matching techniques. While embeddings allow the system to reflect user intent more effectively than keywords, it is important to note that this is a mathematical approximation of meaning, not human-level understanding.

Advanced matching algorithms evaluate similarity between query vectors and indexed content vectors to identify the most relevant information sources. The system may retrieve multiple relevant documents or data segments to ensure comprehensive coverage of the user’s information needs while maintaining focus on the most pertinent content.

Query processing can also incorporate business context and user permissions, but this depends on how the system is implemented. In enterprise environments, such mechanisms are often necessary to ensure that retrieved information complies with security policies and access controls, where different users have access to different categories of sensitive or restricted information.

3.1.3 Content Augmentation

Retrieved information is combined with the original user query to create an augmented prompt that provides the AI system with richer context for generating responses. This process structures the input so that retrieved data is highlighted and encouraged to take precedence over the AI model’s internal training knowledge, although the final output still depends on how the model balances both sources.

Prompt engineering techniques guide the AI system in using external information effectively, for example by instructing it to prioritize retrieved documents, resolve potential conflicts between sources, format outputs in specific ways, or maintain an appropriate tone for business communication.

The quality of this augmentation step directly affects the accuracy and relevance of responses. Well-designed strategies find the right balance between including enough supporting data and focusing the model’s attention on the most important elements, ensuring that generated outputs remain both precise and contextually appropriate.

3.1.4 Response Generation

The AI model synthesizes information from the augmented prompt to generate comprehensive responses that address user queries while incorporating relevant business data. This process maintains natural language flow and encourages inclusion of retrieved content, though the level of completeness depends on how effectively the system structures and prioritizes input information.

In enterprise RAG implementations, additional quality control mechanisms can be applied to improve accuracy and reliability. These may involve cross-checking outputs against retrieved documents, verifying consistency, or optimizing format and tone to meet professional communication standards. Such safeguards are not intrinsic to the language model itself but are built into the overall RAG workflow.

Final responses frequently include source citations or references, enabling users to verify accuracy and explore supporting details. This transparency strengthens trust in AI-generated outputs while supporting compliance, audit requirements, and quality assurance processes.

3.2 RAG Architecture Components

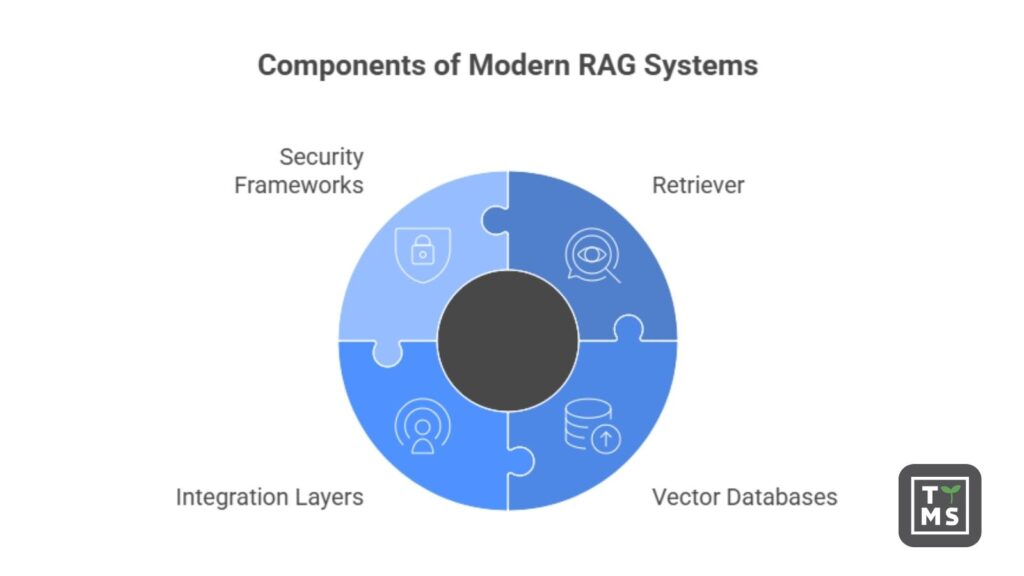

Modern RAG systems combine several core components that deliver reliable, accurate, and scalable business intelligence. The retriever identifies the most relevant fragments of information from indexed sources using semantic search and similarity matching.

Vector databases act as the storage and retrieval backbone, enabling fast similarity searches across large volumes of mainly unstructured content, with structured data often transformed into text for processing. These databases are designed for high scalability without performance loss.

Integration layers connect RAG with existing business applications through APIs, platform connectors, and middleware, ensuring that it operates smoothly within current workflows. Security frameworks and access controls are also built into these layers to maintain data protection and compliance standards.

3.3 Integration with Existing Business Systems

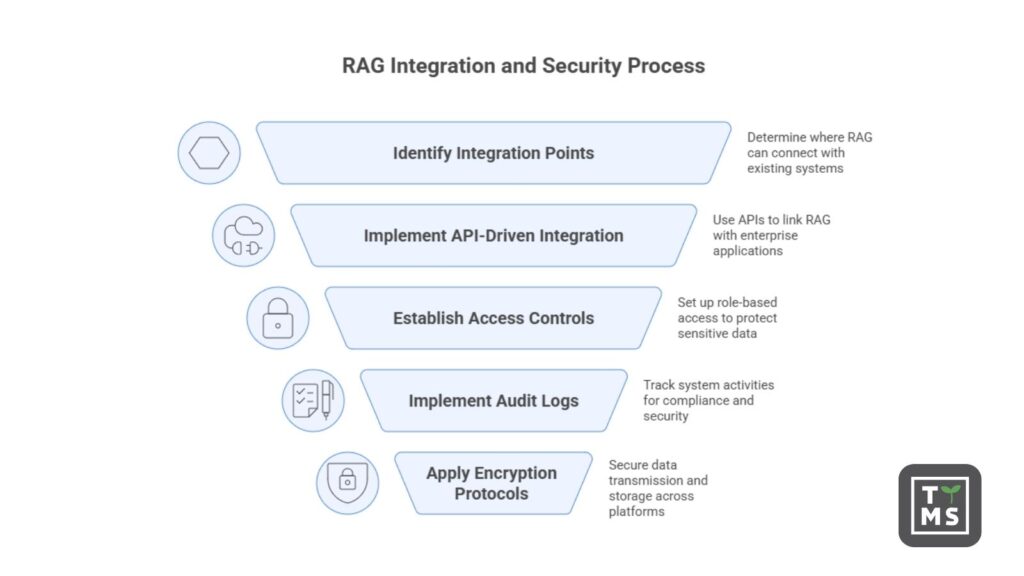

Successful RAG deployment depends on how well it integrates with existing IT infrastructure and business workflows. Organizations should assess their current technology stack to identify integration points and potential challenges.

API-driven integration allows RAG systems to access CRM, ERP, document management, and other enterprise applications without major system redesign. This reduces disruption and maximizes the value of existing technology investments.

Because RAG systems often handle sensitive information, role-based access controls, audit logs, and encryption protocols are essential to maintain compliance and protect data across connected platforms.

4. Business Applications and Use Cases

4.1 AI4Legal – RAG in service of law and compliance

AI4Legal was created for lawyers and compliance departments. By combining internal documents with legal databases, it enables efficient analysis of regulations, case law, and legal frameworks. This tool not only speeds up the preparation of legal opinions and compliance reports but also minimizes the risk of errors, as every answer is anchored in a verified source.

4.2 AI4Content – intelligent content creation with RAG

AI4Content supports marketing and content teams that face the daily challenge of producing large volumes of materials. It generates texts consistent with brand guidelines, rooted in the business context, and free of factual mistakes. This solution eliminates tedious editing work and allows teams to focus on creativity.

4.3 AI4E-learning – personalized training powered by RAG

AI4E-learning addresses the growing need for personalized learning and employee development. Based on company procedures and documentation, it generates quizzes, courses, and educational resources tailored to the learner’s profile. As a result, training becomes more engaging, while the process of creating content takes significantly less time.

4.4 AI4Knowledge Base – intelligent knowledge management for enterprises

At the heart of knowledge management lies AI4Knowledge Base, an intelligent hub that integrates dispersed information sources within an organization. Employees no longer need to search across multiple systems – they can simply ask a question and receive a reliable answer. This solution is particularly valuable in large companies and customer support teams, where quick access to information translates into better decisions and smoother operations.

4.5 AI4Localisation – automated translation and content localization

For global needs, AI4Localisation automates translation and localization processes. Using translation memories and corporate glossaries, it ensures terminology consistency and accelerates time-to-market for materials across new regions. This tool is ideal for international organizations where translation speed and quality directly impact customer communication.

5. Benefits of Implementing RAG in Business

5.1 More accurate and reliable answers

RAG ensures AI responses are based on verified sources rather than outdated training data. This reduces the risk of mistakes that could harm operations or customer trust. Every answer can be traced back to its source, which builds confidence and helps meet audit requirements. Most importantly, all users receive consistent information instead of varying responses.

5.2 Real-time access to information

With RAG, AI can use the latest data without retraining the model. Any updates to policies, offers, or regulations are instantly reflected in responses. This is crucial in fast-moving industries, where outdated information can lead to poor decisions or compliance issues.

5.3 Better customer experience

Customers get fast, accurate, and personalized answers that reflect current product details, services, or account information. This reduces frustration and builds loyalty. RAG-powered self-service systems can even handle complex questions, while support teams resolve issues faster and more effectively.

5.4 Lower costs and higher efficiency

RAG automates time-consuming tasks like information searches or report preparation. Companies can manage higher workloads without hiring more staff. New employees get up to speed faster by accessing knowledge through conversational AI instead of lengthy training programs. Maintenance costs also drop, since updating a knowledge base is simpler than retraining a model.

5.5 Scalability and flexibility

RAG systems grow with your business, handling more data and users without losing quality. Their modular design makes it easy to add new data sources or interfaces. They also combine knowledge across departments, providing cross-functional insights that drive agility and better decision-making.

6. Common Challenges and Solutions

6.1 Data Quality and Management Issues

The effectiveness of RAG implementations depends heavily on the quality, accuracy, and currency of underlying information sources. Poor data quality can undermine system performance and user trust, making comprehensive data governance essential for successful RAG deployment and operation.

Organizations must establish clear data quality standards, regular validation processes, and update procedures to maintain information accuracy across all sources accessible to RAG systems. This governance includes identifying authoritative sources, establishing update responsibilities, and implementing quality control checkpoints.

Data consistency challenges arise when information exists across multiple systems with different formats, terminology, or update schedules. RAG implementations require standardization efforts and integration strategies that reconcile these differences while maintaining information integrity and accessibility.

6.2 Integration Complexity

Connecting RAG systems to diverse business platforms and data sources can present significant technical and organizational challenges. Legacy systems may lack modern APIs, security protocols may need updating, and data formats may require transformation to support effective RAG integration.

Phased implementation approaches help manage integration complexity by focusing on high-value use cases and gradually expanding system capabilities. This strategy enables organizations to gain experience with RAG technology while managing risk and resource requirements effectively.

Standardized integration frameworks and middleware solutions can simplify connection challenges while providing flexibility for future expansion. These approaches reduce technical complexity while ensuring compatibility with existing business systems and security requirements.

6.3 Security and Privacy Concerns

RAG systems require access to sensitive business information, creating potential security vulnerabilities if not properly designed and implemented. Organizations must establish comprehensive security frameworks that protect data throughout the retrieval, processing, and response generation workflow.

Access control mechanisms ensure that RAG systems respect existing permission structures and user authorization levels. This capability becomes particularly important in enterprise environments where different users should have access to different types of information based on their roles and responsibilities.

Audit and compliance requirements may necessitate detailed logging of information access, user interactions, and system decisions. RAG implementations must include appropriate monitoring and reporting capabilities to support regulatory compliance and internal governance requirements.

6.4 Performance and Latency Challenges

Real-time information retrieval and processing can impact system responsiveness, particularly when accessing large information repositories or complex integration environments. Organizations must balance comprehensive information access with acceptable response times for user interactions.

Optimization strategies include intelligent caching, pre-processing of common queries, and efficient vector database configurations that minimize retrieval latency. These approaches maintain system performance while ensuring comprehensive information access for user queries.

Scalability planning becomes important as user adoption increases and information repositories grow. RAG systems must be designed to handle increased demand without degrading performance or compromising information accuracy and relevance.

6.5 Change Management and User Adoption

Successful RAG implementation requires user acceptance and adaptation of new workflows that incorporate AI-powered information access. Resistance to change can limit system value realization even when technical implementation is successful.

Training and education programs help users understand RAG capabilities and learn effective interaction techniques. These programs should focus on practical benefits and demonstrate how RAG systems improve daily work experiences rather than focusing solely on technical features.

Continuous feedback collection and system refinement based on user experiences improve adoption rates while ensuring that RAG implementations meet actual business needs rather than theoretical requirements. This iterative approach builds user confidence while optimizing system performance.

7. Future of RAG in Business (2025 and Beyond)

7.1 Emerging Trends and Technologies

The RAG technology landscape continues evolving with innovations that enhance business applicability and value creation potential.Multimodal RAG systems that process text, images, audio, and structured data simultaneously are expanding application possibilities across industries requiring comprehensive information synthesis from diverse sources. AI4Knowledge Base by TTMS is precisely such a tool, enabling intelligent integration and analysis of knowledge in multiple formats.

Hybrid RAG architectures that combine semantic search with vector-based methods will drive real-time, context-aware responses, enhancing the precision and usefulness of enterprise AI applications. These solutions enable more advanced information retrieval and processing capabilities to address complex business intelligence requirements.

Agent-based RAG architectures introduce autonomous decision-making capabilities, allowing AI systems to execute complex workflows, learn from interactions, and adapt to evolving business needs. Personalized RAG and on-device AI will deliver highly contextual outputs processed locally to reduce latency, safeguard privacy, and optimize efficiency.

7.2 Expert Predictions

Experts predict that RAG will soon become a standard across industries, as it enables organizations to use their own data without exposing it to public chatbots. Yet AI hallucinations “are here to stay” – these tools can reduce mistakes, but they cannot replace critical thinking and fact-checking.

Healthcare applications will see particularly strong growth, as RAG systems enable personalized diagnostics by integrating real-time patient data with medical literature, reducing diagnostic errors. Financial services will benefit from hybrid RAG improvements in fraud detection by combining structured transaction data and unstructured online sources for more accurate risk analysis.

A good example of RAG’s high effectiveness for the medical field is the study by YH Ke et al., which demonstrated its value in the context of surgery — the LLM-RAG model with GPT-4 achieved 96.4% accuracy in determining a patient’s fitness for surgery, outperforming both humans and non-RAG models.

7.3 Preparation Strategies for Businesses

Organizations that want to fully unlock the potential of RAG (Retrieval-Augmented Generation) should begin with strong foundations. The key lies in building transparent data governance principles, enhancing information architecture, investing in employee development, and adopting tools that already have this technology implemented.

In this process, technology partnerships play a crucial role. Collaboration with an experienced provider – such as TTMS – helps shorten implementation time, reduce risks, and leverage proven methodologies. Our AI solutions, such as AI4Legal and AI4Content, are prime examples of how RAG can be effectively applied and tailored to specific industry requirements.

The future of business intelligence belongs to organizations that can seamlessly integrate RAG into their daily operations without losing sight of business objectives and user value. Those ready to embrace this evolution will gain a significant competitive advantage: faster and more accurate decision-making, improved operational efficiency, and enhanced customer experiences through intelligent knowledge access and synthesis. Do you need to integrate RAG? Contact us now!