Generative AI is a double-edged sword for businesses. Recent headlines warn that companies are “getting into trouble because of AI.” High-profile incidents show what can go wrong: A Polish contractor lost a major road maintenance contract after submitting AI-generated documents full of fictitious data. In Australia, a leading firm had to refund part of a government fee when its AI-assisted report was found to contain a fabricated court quote and references to non-existent research. Even lawyers were sanctioned for filing a brief with fake case citations from ChatGPT. And a fintech that replaced hundreds of staff with chatbots saw customer satisfaction plunge, forcing it to rehire humans. These cautionary tales underscore real risks – from AI hallucinations and errors to legal liabilities, financial losses, and reputational damage. The good news is that such pitfalls are avoidable. This expert guide offers practical legal, technological, and operational steps to help your company use AI responsibly and safely, so you can innovate without landing in trouble.

1. Understanding the Risks of Generative AI in Business

Before diving into solutions, it’s important to recognize the major AI-related risks that have tripped up companies. Knowing what can go wrong helps you put guardrails in place. Key pitfalls include:

- AI “hallucinations” (false outputs): Generative AI can produce information that sounds convincing but is completely made-up. For example, an AI tool invented fictitious legal interpretations and data in a bid document – these “AI hallucinations” misled the evaluators and got the company disqualified. Similarly, Deloitte’s AI-generated report included a fake court judgment quote and references to studies that didn’t exist. Relying on unverified AI output can lead to bad decisions and contract losses.

- Inaccurate reports and analytics: If employees treat AI outputs as error-free, mistakes can slip into business reports, financial analysis, or content. In Deloitte’s case, inadequate oversight of an AI-written report led to public embarrassment and a fee refund. AI is a powerful tool, but as one expert noted, “AI isn’t a truth-teller; it’s a tool” – without proper safeguards, it may output inaccuracies.

- Legal liabilities and lawsuits: Using AI without regard for laws and ethics can invite litigation. The now-famous example is the New York lawyers who were fined for submitting a court brief full of fake citations generated by ChatGPT. Companies could also face IP or privacy lawsuits if AI misuses data. In Poland, authorities made it clear that a company is accountable for any misleading information it presents – even if it came from an AI. In other words, you can’t blame the algorithm; the legal responsibility stays with you.

- Financial losses: Mistakes from unchecked AI can directly hit the bottom line. An incorrect AI-generated analysis might lead to a poor investment or strategic error. We’ve seen firms lose lucrative contracts and pay back fees because AI introduced errors. Near 60% of workers admit to making AI-related mistakes at work, so the risk of costly errors is very real if there’s no safety net.

- Reputational damage: When AI failures become public, they erode trust with customers and partners. A global consulting brand had its reputation dented by the revelation of AI-made errors in its deliverable. On the consumer side, companies like Starbucks have faced public skepticism over “robot baristas” as they introduce AI assistants, prompting them to reassure that AI won’t replace the human touch. And fintech leader Klarna, after boasting of an AI-only customer service, had to reverse course and admit the quality issues hurt their brand. It only takes one AI fiasco to go viral for a company’s image to suffer.

These risks are real, but they are also manageable. The following sections offer a practical roadmap to harness AI’s benefits while avoiding the landmines that led to the above incidents.

2. Legal and Contractual Safeguards for Responsible AI

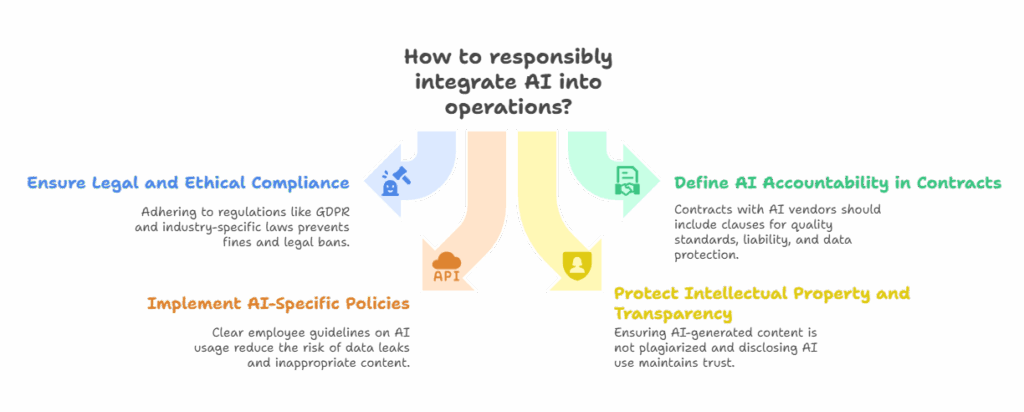

2.1. Stay within the lines of law and ethics

Before deploying AI in your operations, ensure compliance with all relevant regulations. For instance, data protection laws (like GDPR) apply to AI usage – feeding customer data into an AI tool must respect privacy rights. Industry-specific rules may also limit AI use (e.g. in finance or healthcare). Keep an eye on emerging regulations: the EU’s AI Act, for example, will require that AI systems are transparent, safe, and under human control. Non-compliance could bring hefty fines or legal bans on AI systems. Engage your legal counsel or compliance officer early when adopting AI, so you identify and mitigate legal risks in advance.

2.2 Use contracts to define AI accountability

When procuring AI solutions or hiring AI vendors, bake risk protection into your contracts. Define quality standards and remedies if the AI outputs are flawed. For example, if an AI service provides content or decisions, require clauses for human review and a warranty against grossly incorrect output. Allocate liability – the contract should spell out who is responsible if the AI causes damage or legal violations. Similarly, ensure any AI vendor is contractually obligated to protect your data (no unauthorized use of your data to train their models, etc.) and to follow applicable laws. Contractual safeguards won’t prevent mistakes, but they create recourse and clarity, which is crucial if something goes wrong.

2.3 Include AI-specific policies in employee guidelines

Your company’s code of conduct or IT policy should explicitly address AI usage. Outline what employees can and cannot do with AI tools. For example, forbid inputting confidential or sensitive business information into public AI services (to avoid data leaks), unless using approved, secure channels. Require that any AI-generated content used in work must be verified for accuracy and appropriateness. Make it clear that automated outputs are suggestions, not gospel, and employees are accountable for the results. By setting these rules, you reduce the chance of well-meaning staff inadvertently creating a legal or PR nightmare. This is especially important since studies show many workers are using AI without clear guidance – nearly half of employees in one survey weren’t even sure if their AI use was allowed. A solid policy educates and protects both your staff and your business.

2.4 Protect intellectual property and transparency

Legally and ethically, companies must be careful about the source of AI-generated material. If your AI produces text or images, ensure it’s not plagiarizing or violating copyrights. Use AI models that are licensed for commercial use, or that clearly indicate which training data they used. Disclose AI-generated content where appropriate – for instance, if an AI writes a report or social media post, you might need to indicate it’s AI-assisted to maintain transparency and trust. In contracts with clients or users, consider disclaimers that certain outputs were AI-generated and are provided with no warranty, if that applies. The goal is to avoid claims of deception or IP infringement. Always remember: if an AI tool gives you content, treat it as if an unknown author gave it to you – you would perform due diligence before publishing it. Do the same with AI outputs.

3. Technical Best Practices to Prevent AI Errors

3.1 Validate all AI outputs with human review or secondary systems

The simplest safeguard against AI mistakes is a human in the loop. Never let critical decisions or external communications go out solely on AI’s word. As one expert put it after the Deloitte incident: “The responsibility still sits with the professional using it… check the output, and apply their judgment rather than copy and paste whatever the system produces.” In practice, this means institute a review step: if AI drafts an analysis or email, have a knowledgeable person vet it. If AI provides data or code, test it or cross-check it. Some companies use dual layers of AI – one generates, another evaluates – but ultimately, human judgment must approve. This human oversight is your last line of defense to catch hallucinations, biases, or context mistakes that AI might miss.

3.2 Test and tune your AI systems before full deployment

Don’t toss an AI model into mission-critical work without sandbox testing. Use real-world scenarios or past data to see how the AI performs. Does a generative AI tool stay factual when asked about your domain, or does it start spewing nonsense if it’s uncertain? Does an AI decision system show any bias or odd errors under certain inputs? By piloting the AI on a small scale, you can identify failure modes. Adjust the system accordingly – this could mean fine-tuning the model on your proprietary data to improve accuracy, or configuring stricter parameters. For instance, if you use an AI chatbot for customer service, test it against a variety of customer queries (including edge cases) and have your team review the answers. Only when you’re satisfied that it meets your accuracy and tone standards should you scale it up. And even then, keep it monitored (more on that below).

3.3 Provide AI with curated data and context.

One reason AI outputs go off the rails is lack of context or training on unreliable data. You can mitigate this. If you’re using an AI to answer questions or generate reports in your domain, consider a retrieval augmented approach: supply the AI with a database of verified information (your product documents, knowledge base, policy library) so it draws from correct data rather than guessing. This can greatly reduce hallucinations since the AI has a factual reference. Likewise, filter the training data for any in-house AI models to remove obvious inaccuracies or biases. The aim is to “teach” the AI the truth as much as possible. Remember, AI will confidently fill gaps in its knowledge with fabrications if allowed. By limiting its playground to high-quality sources, you narrow the room for error.

3.4 Implement checks for sensitive or high-stakes outputs.

Not all AI mistakes are equal – a typo in an internal memo is one thing; a false statement in a financial report is another. Identify which AI-generated outputs in your business are high-stakes (e.g. public-facing content, legal documents, financial analyses). For those, add extra scrutiny. This could be multi-level approval (several experts must sign off), or using software tools that detect anomalies. For example, there are AI-powered fact-checkers and content moderation tools that can flag claims or inappropriate language in AI text. Use them as a first pass. Also, set up threshold triggers: if an AI system expresses low confidence or is handling an out-of-scope query, it should automatically defer to a human. Many AI providers let you adjust confidence settings or have an escalation rule – take advantage of these features to prevent unchecked dubious outputs.

3.5 Continuously monitor and update your AI

Treat an AI model like a living system that needs maintenance. Monitor its performance over time. Are error rates creeping up? Are there new types of questions or inputs where it struggles? Regularly audit the outputs – perhaps monthly quality assessments or sampling a percentage of interactions for review. Also, keep the AI model updated: if you find it repeatedly makes a certain mistake, retrain it with corrected data or refine its prompt. If regulations or company policies change, make sure the AI knows (for example, update its knowledge base or rules). Ongoing audits can catch issues early, before they lead to a major incident. In sensitive use cases, you might even invite external auditors or use bias testing frameworks to ensure the AI stays fair and accurate. The goal is to not “set and forget” your AI. Just as you’d service important machinery, periodically service your AI models.

4. Operational Strategies and Human Oversight

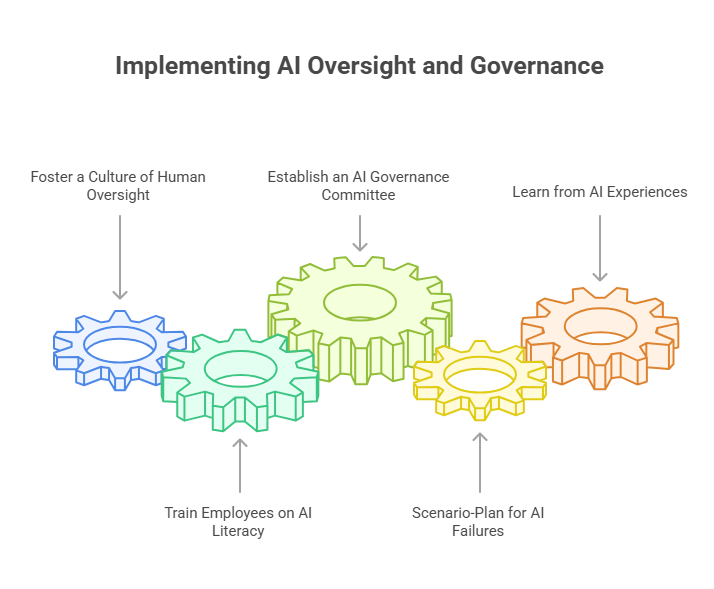

4.1 Foster a culture of human oversight

However advanced your AI, make it standard practice that humans oversee its usage. This mindset starts at the top: leadership should reinforce that AI is there to assist, not replace human judgment. Encourage employees to view AI as a junior analyst or co-pilot – helpful, but in need of supervision. For example, Starbucks introduced an AI assistant for baristas, but explicitly framed it as a tool to enhance the human barista’s service, not a “robot barista” replacement. This messaging helps set expectations that humans are ultimately in charge of quality. In daily operations, require sign-offs: e.g. a manager must approve any AI-generated client deliverable. By embedding oversight into processes, you greatly reduce the risk of unchecked AI missteps.

4.2 Train employees on AI literacy and guidelines

Even tech-savvy staff may not fully grasp AI’s limitations. Conduct training sessions on what generative AI can and cannot do. Explain concepts like hallucination with vivid examples (such as the fake cases ChatGPT produced, leading to real sanctions). Educate teams on identifying AI errors – for instance, checking sources for factual claims or noticing when an answer seems too general or “off.” Also, train them on the company’s AI usage policy: how to handle data, which tools are approved, and the procedure for reviewing AI outputs. The more AI becomes part of workflows, the more you need everyone to understand the shared responsibility in using it correctly. Empower employees to flag any odd AI behavior and to feel comfortable asking for a human review at any point. Front-line awareness is your early warning system for potential AI issues.

4.3 Establish an AI governance committee or point person

Just as organizations have security officers or compliance teams, it’s wise to designate people responsible for AI oversight. This could be a formal AI Ethics or AI Governance Committee that meets periodically. Or it might be assigning an “AI champion” or project manager for each AI system who tracks its performance and handles any incidents. Governance bodies should set the standards for AI use, review high-risk AI projects before launch, and keep leadership informed about AI initiatives. They can also stay updated on external developments (new regulations, industry best practices) and adjust company policies accordingly. The key is to have accountability and expertise centered, rather than letting AI adoption sprawl in a vacuum. A governance group acts as a safeguard to ensure all the tips in this guide are being followed across the organization.

4.4 Scenario-plan for AI failures and response

Incorporate AI-related risks into your business continuity and incident response plans. Ask “what if” questions: What if our customer service chatbot gives offensive or wrong answers and it goes viral? What if an employee accidentally leaks data through an AI tool? By planning ahead, you can establish protocols: e.g. have a PR statement ready addressing AI missteps, so you can respond swiftly and transparently if needed. Decide on a rollback plan – if an AI system starts behaving unpredictably, who has authority to pull it from production or revert to manual processes? As part of oversight, do drills or tests of these scenarios, just like fire drills. It’s better to practice and hope you never need it, than to be caught off-guard. Companies that survive tech hiccups often do so because they reacted quickly and responsibly. With AI, a prompt correction and honest communication can turn a potential fiasco into a demonstration of your commitment to accountability.

4.5 Learn from others and from your own AI experiences

Keep an eye on case studies and news of AI in business – both successes and failures. The incidents we discussed (from Exdrog’s tender loss to Klarna’s customer service pivot) each carry a lesson. Periodically review what went wrong elsewhere and ask, “Could that happen here? How would we prevent or handle it?” Likewise, conduct post-mortems on any AI-related mistakes or near-misses in your own company. Maybe an internal report had to be corrected due to AI error – dissect why it happened and improve the process. Encourage a no-blame culture for reporting AI issues or mistakes; people should feel comfortable admitting an error was caused by trusting AI too much, so everyone can learn from it. By continuously learning, you build a resilient organization that navigates the evolving AI landscape effectively.

5. Conclusion: Safe and Smart AI Adoption

AI technology in 2025 is more accessible than ever to businesses – and with that comes the responsibility to use it wisely. Companies that fall into AI trouble often do so not because AI is malicious, but because it was used carelessly or without sufficient oversight. As the examples show, shortcuts like blindly trusting AI outputs or replacing human judgment wholesale can lead straight to pitfalls. On the other hand, businesses that pair AI innovation with robust checks and balances stand to reap huge benefits without the scary headlines. The overarching principle is accountability: no matter what software or algorithm you deploy, the company remains accountable for the outcome. By implementing the legal safeguards, technical controls, and human-centric practices outlined above, you can confidently integrate AI into your operations. AI can indeed boost efficiency, uncover insights, and drive growth – as long as you keep it on a responsible leash. With prudent strategies, your firm can leverage generative AI as a powerful ally, not a liability. In the end, “how not to get in trouble with AI” boils down to a simple ethos: innovate boldly, but govern diligently. The future belongs to companies that do both.

Ready to harness AI safely and strategically? Discover how TTMS helps businesses implement responsible, high-impact AI solutions at ttms.com/ai-solutions-for-business.

FAQ

What are AI “hallucinations” and how can we prevent them in our business?

AI hallucinations are instances when generative AI confidently produces incorrect or entirely fictional information. The AI isn’t lying on purpose – it’s generating plausible-sounding answers based on patterns, which can sometimes mean fabricating facts that were never in its training data. For example, an AI might cite laws or studies that don’t exist (as happened in a Polish company’s bid where the AI invented fake tax interpretations) or make up customer data in a report. To prevent hallucinations from affecting your business, always verify AI-generated content. Treat AI outputs as a first draft. Use fact-checking procedures: if AI provides a statistic or legal reference, cross-verify it from a trusted source. You can also limit hallucinations by using AI models that allow you to plug in your own knowledge base – this way the AI has authoritative information to draw from, rather than guessing. Another tip is to ask the AI to provide its sources or confidence level; if it can’t, that’s a red flag. Ultimately, preventing AI hallucinations comes down to a mix of choosing the right tools (models known for reliability, possibly fine-tuned on your data) and maintaining human oversight. If you instill a rule that “no AI output goes out unchecked,” the risk of hallucinations leading you astray will drop dramatically.

Which laws or regulations about AI should companies be aware of in 2025?

AI governance is a fast-evolving space, and by 2025 several jurisdictions have introduced or proposed regulations. In the European Union, the EU AI Act is a landmark regulation (expected to fully take effect soon) that classifies AI uses by risk and imposes requirements on high-risk AI systems – such as mandatory human oversight, transparency, and robustness testing. Companies operating in the EU will need to ensure their AI systems comply (or face fines that can reach into millions of euros or a percentage of global revenue for serious violations). Even outside the EU, there’s movement: for instance, authorities in the U.S. (like the FTC) have warned businesses against using AI in deceptive or unfair ways, implying that existing consumer protection and anti-discrimination laws apply to AI outcomes. Data privacy laws (GDPR in Europe, CCPA in California, etc.) also impact AI – if your AI processes personal data, you must handle that data lawfully (e.g., ensure you have consent or legitimate interest, and that you don’t retain it longer than needed). Intellectual property law is another area: if your AI uses copyrighted material in training or output, you must navigate IP rights carefully. Furthermore, sector-specific regulators are issuing guidelines – for example, medical regulators insist that AI aiding in diagnosis be thoroughly validated, and financial regulators may require explainability for AI-driven credit decisions to ensure no unlawful bias. It’s wise for companies to consult legal experts about the jurisdictions they operate in and keep an eye on new legislation. Also, use industry best practices and ethical AI frameworks as guiding lights even where formal laws lag behind. In summary, key legal considerations in 2025 include data protection, transparency and consent, accountability for AI decisions, and sectoral compliance standards. Being proactive on these fronts will help you avoid not only legal penalties but also the reputational hit of a public regulatory reprimand.

Will AI replace human jobs in our company, or how do we balance AI and human roles?

This is a common concern. The short answer: AI works best as an augmentation to human teams, not a wholesale replacement – especially in 2025. While AI can automate routine tasks and accelerate workflows, there are still many things humans do better (complex judgment calls, creative thinking, emotional understanding, and handling novel situations, to name a few). In fact, some companies that rushed to replace employees with AI have learned this the hard way. A well-known example is Klarna, a fintech company that eliminated 700 customer service roles in favor of an AI chatbot, only to find customer satisfaction plummeted; they had to rehire staff and switch to a hybrid AI-human model when automation alone couldn’t meet customers’ needs. The lesson is that completely removing the human element can hurt service quality and flexibility. To strike the right balance, identify tasks where AI genuinely excels (like data entry, basic Q&A, initial drafting of content) and use it there, but keep humans in the loop for oversight and for tasks requiring empathy, critical thinking, or expertise. Many forward-thinking companies are creating “AI-assisted” roles instead of pure AI replacements – for example, a marketer uses AI to generate campaign ideas, which she then curates and refines; a customer support agent handles complex cases while an AI handles FAQs and escalates when unsure. This not only preserves jobs but often makes those jobs more interesting (since AI handles drudge work). It’s also important to reskill and upskill employees so they can work effectively with AI tools. The goal should be to elevate human workers with AI, not eliminate them. In sum, AI will change job functions and require adaptation, but companies that blend human creativity and oversight with machine efficiency will outperform those that try to hand everything over to algorithms. As Starbucks’ leadership noted regarding their AI initiatives, the focus should be on using AI to empower employees for better customer service, not to create a “robot workforce”. By keeping that perspective, you maintain morale, trust, and quality – and your humans and AIs each do what they do best.

What should an internal AI use policy for employees include?

An internal AI policy is essential now that employees in various departments might use tools like ChatGPT, Copilot, or other AI software in their day-to-day work. A good AI use policy should cover several key points:

- Approved AI tools: List which AI applications or services employees are allowed to use for company work. This helps avoid shadow AI usage on unvetted apps. For example, you might approve a certain ChatGPT Enterprise version that has enhanced privacy, but disallow using random free AI websites that haven’t been assessed for security.

- Data protection guidelines: Clearly state what data can or cannot be input into AI systems. A common rule is “no sensitive or confidential data in public AI tools.” This prevents accidental leaks of customer information, trade secrets, source code, etc. (There have been cases of employees pasting confidential text into AI tools and unknowingly sharing it with the tool provider or the world.) If you have an in-house AI that’s secure, define what’s acceptable to use there as well.

- Verification requirements: Instruct employees to verify AI outputs just as they would a junior employee’s work. For instance, if an AI drafts an email or a report, the employee responsible must read it fully, fact-check any claims, and edit for tone before sending it out. The policy should make it clear that AI is an assistant, not an authoritative source. As evidence of why this matters, you might even cite the statistic that ~60% of workers have seen AI cause errors in their work – so everyone must stay vigilant and double-check.

- Ethical and legal compliance: The policy should remind users that using AI doesn’t exempt them from company codes of conduct or laws. For example, say you use an AI image generator – the resulting image must still adhere to licensing laws and not contain inappropriate content. Or if using AI for hiring recommendations, one must ensure it doesn’t introduce bias (and follows HR laws). In short, employees should apply the same ethical standards to AI output as they would to human work.

- Attribution and transparency: If employees use AI to help create content (like reports, articles, software code), clarify whether and how to disclose that. Some companies encourage noting when text or code was AI-assisted, at least internally, so that others reviewing the work know to scrutinize it. At the very least, employees should not present AI-generated work as solely their own without review – because if an error surfaces, the “I relied on AI” excuse won’t fly (the company will still be accountable for the error).

- Support and training: Let employees know what resources are available. If they have questions about using AI tools appropriately, whom should they ask? Do you have an AI task force or IT support that can assist? Encouraging open dialogue will make the policy a living part of company culture rather than just a document of dos and don’ts.

Once your AI use policy is drafted, circulate it and consider a brief training so everyone understands it. Update the policy periodically as new tools emerge or as regulations change. Having these guidelines in place not only prevents mishaps but also gives employees confidence to use AI in a way that’s aligned with the company’s values and risk tolerance.

How can we safely integrate AI tools without exposing sensitive data or security risks?

Data security is a top concern when using AI tools, especially those running in the cloud. Here are steps to ensure you don’t trade away privacy or security in the process of adopting AI:

- Use official enterprise versions or self-hosted solutions: Many AI providers offer business-grade versions of their tools (for example, OpenAI has ChatGPT Enterprise) which come with guarantees like not using your data to train their models, enhanced encryption, and compliance with standards. Opt for these when available, rather than the free or consumer versions, for any business-sensitive work. Alternatively, explore on-premise or self-hosted AI models that run in your controlled environment so that data never leaves your infrastructure.

- Encrypt and anonymize sensitive data: If you must use real data with an AI service, consider anonymizing it (remove personally identifiable information or trade identifiers) and encrypt communications. Also, check that the AI tool has encryption in transit and at rest. Never input things like full customer lists, financial records, or source code into an AI without clearing it through security. One strategy is to use test or dummy data when possible, or break data into pieces that don’t reveal the whole picture.

- Vendor security assessment: Treat an AI service provider like any other software vendor. Do they have certifications (such as SOC 2, ISO 27001) indicating strong security practices? What is their data retention policy – do they store the prompts and outputs, and if so, for how long and how is it protected? Has the vendor had any known breaches or leaks? A quick background check can save a lot of pain. If the vendor can’t answer these questions or give you a Data Processing Agreement, that’s a red flag.

- Limit integration scope: When integrating AI into your systems, use the principle of least privilege. Give the AI access only to the data it absolutely needs. For example, if an AI assistant helps answer customer emails, it might need customer order data but not full payment info. By compartmentalizing access, you reduce the impact if something goes awry. Also log all AI system activities – know who is using it and what data is going in and out.

- Monitor for unusual activity: Incorporate your AI tools into your IT security monitoring. If an AI system starts making bulk data requests or if there’s a spike in usage at odd hours, it could indicate misuse (either internal or an external hack). Some companies set up data loss prevention (DLP) rules to catch if employees are pasting large chunks of sensitive text into web-based AI tools. It might sound paranoid, but given reports that a majority of employees have tried sharing work data with AI tools (often not realizing the risk), a bit of monitoring is prudent.

- Regular security audits and updates: Keep the AI software up to date with patches, just like any other software, to fix security vulnerabilities. If you build a custom AI model, ensure the platform it runs on is secured and audited. And periodically review who has access to the AI tools and the data they handle – remove accounts that no longer need it (like former employees or team members who changed roles).

By taking these precautions, you can enjoy the efficiency and insights of AI without compromising on your company’s data security or privacy commitments. Always remember that any data handed to a third-party AI is data you no longer fully control – so hand it over with caution or not at all. When in doubt, consult your cybersecurity team to evaluate the risks before integrating a new AI tool.