Artificial intelligence is experiencing a real boom, and with it the demand for energy needed to power its infrastructure is growing rapidly. Data centers, where AI models are trained and run, are becoming some of the largest new electricity consumers in the world. In 2024-2025, record investments in data centers were recorded – it is estimated that in 2025 alone, as much as USD 580 billion was spent globally on AI-focused data center infrastructure. This has translated into a sharp increase in electricity consumption at both global and local scales, creating a range of challenges for the IT and energy sectors. Below, we summarize hard data, statistics and trends from 2024-2025 as well as forecasts for 2026, focusing on energy consumption by data centers (both AI model training and their inference), the impact of this phenomenon on the energy sector (energy mix, renewables), and the key decisions facing managers implementing AI.

1. AI boom and rising energy consumption in data centers (2024-2025)

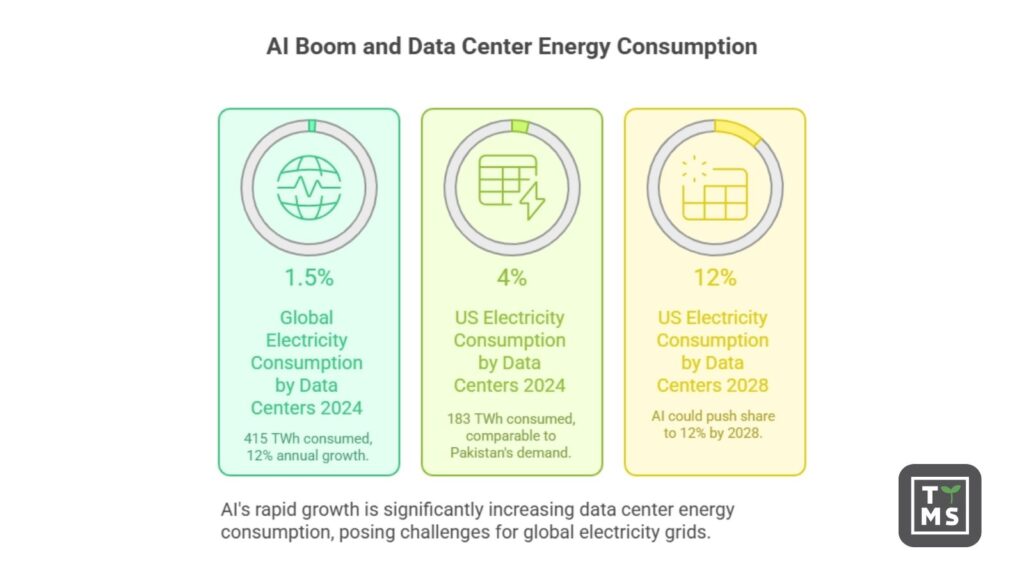

The development of generative AI and large language models has caused an explosion in demand for computing power. Technology companies are investing billions to expand data centers packed with graphics processing units (GPUs) and other AI accelerators. As a result, global electricity consumption by data centers reached around 415 TWh in 2024, which already accounts for approx. 1.5% of total global electricity consumption. In the United States alone, data centers consumed about 183 TWh in 2024, i.e. more than 4% of national electricity consumption – comparable to the annual energy demand of all of Pakistan. The growth pace is enormous – globally, data center electricity consumption has been growing by about 12% per year over the past five years, and the AI boom is accelerating this growth even further.

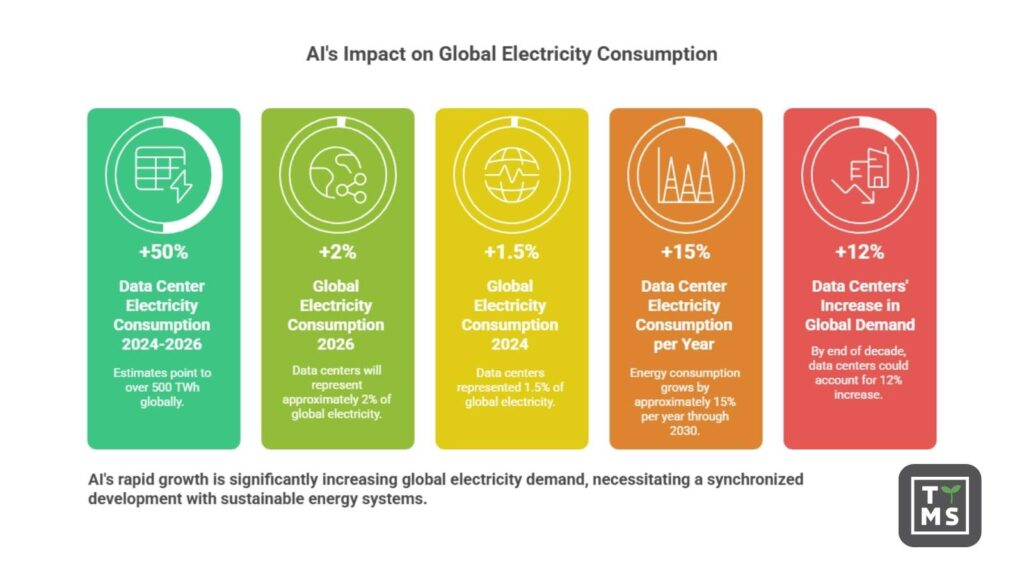

Already in 2023-2024, the impact of AI on infrastructure expansion became visible: the installed capacity of newly built data centers in North America alone reached 6,350 MW by the end of 2024, more than twice as much as a year earlier. An average large AI-focused data center consumes as much electricity as 100,000 households, while the largest facilities currently under construction may require 20 times more. It is therefore no surprise that total energy consumption by data centers in the United States has already exceeded 4% of the energy mix – according to an analysis by the Department of Energy, AI could push this share as high as 12% as early as 2028. On a global scale, it is expected that by 2030, energy consumption by data centers will double, approaching 945 TWh (IEA, base scenario). This level is equivalent to the current energy demand of all of Japan.

2. Training vs. inference – where does AI consume the most electricity?

In the context of AI, it is worth distinguishing two main types of data center workloads: model training and their inference, i.e. the operation of the model handling user queries. Training the most advanced models is extremely energy-intensive – for example, training one of the largest language models in 2023 consumed approximately 50 GWh of energy, equivalent to three days of powering the entire city of San Francisco. Another government report estimated the power required to train a leading AI model at 25 MW, noting that year after year the power requirements for training may double. These figures illustrate the scale – a single training session of a large model consumes as much energy as thousands of average households over the course of a year.

By contrast, inference (i.e. using a trained model to provide answers, generate images, etc.) takes place at massive scale across many applications simultaneously. Although a single query to an AI model consumes only a fraction of the energy required for training, on a global scale inference is responsible for 80–90% of total AI energy consumption. To illustrate: a single question asked to a chatbot such as ChatGPT can consume as much as 10 times more energy than a Google search. When billions of such queries are processed every day, the cumulative energy cost of inference begins to exceed the cost of one-off training runs. In other words, AI “in action” (production) already consumes more electricity than AI “in training”, which has significant implications for infrastructure planning.

Engineers and scientists are attempting to mitigate this trend through model and hardware optimization. Over the past decade, the energy efficiency of AI chips has increased significantly – GPUs can now perform 100 times more computations per watt of energy than in 2008. Despite these improvements, the growing complexity of models and their widespread adoption mean that total power consumption is growing faster than efficiency gains. Leading companies are reporting year-over-year increases of more than 100% in demand for AI computing power, which directly translates into higher electricity consumption.

3. The impact of AI on the energy sector and the energy source mix

The growing demand for energy from data centers poses significant challenges for the energy sector. Large, energy-intensive server farms can locally strain power grids, forcing infrastructure expansion and the development of new generation capacity. In 2023, data centers in the state of Virginia (USA) consumed as much as 26% of all electricity in the state. Similarly high shares were recorded, among others, in Ireland – 21% of national electricity consumption in 2022 was attributable to data centers, and forecasts indicate as much as a 32% share by 2026. Such a high concentration of energy demand in a single sector creates the need for modernization of transmission networks and increased reserve capacity. Grid operators and local authorities warn that without investment, overloads may occur, and the costs of expansion are passed on to end consumers. In the PJM region in the USA (covering several states), it is estimated that providing capacity for new data centers increased energy market costs by USD 9.3 billion, translating into an additional ~$18 per month on household electricity bills in some counties.

Where does the energy powering AI data centers come from? At present, a significant share of electricity comes from traditional fossil fuels. Globally, around 56% of the energy consumed by data centers comes from fossil fuels (approximately 30% coal and 26% natural gas), while the remainder comes from zero-emission sources – renewables (27%) and nuclear energy (15%). In the United States, natural gas dominated in 2024 (over 40%), with approximately 24% from renewables, 20% from nuclear power, and 15% from coal. However, this mix is expected to change under the influence of two factors: ambitious climate targets set by technology companies and the availability of low-cost renewable energy.

The largest players (Google, Microsoft, Amazon, Meta) have announced plans for emissions neutrality – for example, Google and Microsoft aim to achieve net-zero emissions by 2030. This forces radical changes in how data centers are powered. Already, renewables are the fastest-growing energy source for data centers – according to the IEA, renewable energy production for data centers is growing at an average rate of 22% per year and is expected to cover nearly half of additional demand by 2030. Tech giants are investing heavily in wind and solar farms and signing power purchase agreements (PPAs) for green energy supplies. Since the beginning of 2025, leading AI companies have signed at least a dozen large solar energy contracts, each adding more than 100 MW of capacity for their data centers. Wind projects are developing in parallel – for example, Microsoft’s data center in Wyoming is powered entirely by wind energy, while Google purchases wind power for its data centers in Belgium.

Nuclear energy is making a comeback as a stable power source for AI. Several U.S. states are planning to reactivate shut-down nuclear power plants specifically to meet the needs of data centers – preparations are underway to restart the Three Mile Island (Pennsylvania) and Duane Arnold (Iowa) reactors by 2028, in cooperation with Microsoft and Google. In addition, technology companies have invested in the development of small modular reactors (SMRs) – Amazon supported the startup X-Energy, Google purchased 500 MW of SMR capacity from Kairos, and data center operator Switch ordered energy from an Oklo reactor backed by OpenAI. SMRs are expected to begin operation after 2030, but hyperscalers are already securing future supplies from these zero-emission sources.

Despite the growing share of renewables and nuclear power, in the coming years natural gas and coal will remain important for covering the surge in demand driven by AI. The IEA forecasts that by 2030 approximately 40% of additional energy consumption by data centers will still be supplied by gas- and coal-based sources. In some countries (e.g. China and parts of Asia), coal continues to dominate the power mix for data centers. This creates climate challenges – analyses indicate that although data centers currently account for only about ~0.5% of global CO₂ emissions, they are one of the few sectors in which emissions are still rising, while many other sectors are expected to decarbonize. There are growing warnings that the expansion of energy-intensive AI may make it more difficult to achieve climate goals if it is not balanced with clean energy.

4. What will AI-driven data center energy demand look like in 2026?

From the perspective of 2026, further rapid growth in energy consumption driven by artificial intelligence is expected. If current trends continue, data centers will consume significantly more energy in 2026 than in 2024 – estimates point to over 500 TWh globally, which would represent approximately 2% of global electricity consumption (compared to 1.5% in 2024). In the years 2024–2026 alone, the AI sector could generate additional demand amounting to hundreds of TWh. The International Energy Agency emphasizes that AI is the most important driver of growth in data center electricity demand and one of the key new energy consumers on a global scale. In the IEA base scenario, assuming continued efficiency improvements, energy consumption by data centers grows by approximately 15% per year through 2030. However, if the AI boom accelerates (more models, users, and deployments across industries), this growth could be even faster. There are scenarios in which, by the end of the decade, data centers could account for as much as 12% of the increase in global electricity demand.

The year 2026 will likely bring further investments in AI infrastructure. Many cloud and colocation providers have planned the opening of new data center campuses over the next 1–2 years to meet growing demand. Governments and regions are actively competing to host such facilities, offering incentives and expedited permitting processes to investors, as already observed in 2024–25. On the other hand, environmental awareness is increasing, making it possible that more stringent regulations will emerge in 2026. Some countries and states are debating requirements for data centers to partially rely on renewable energy sources or to report their carbon footprint and water consumption. Local moratoria on the construction of additional energy-intensive server farms are also possible if the grid is unable to support them – such ideas have already been proposed in regions with high concentrations of data centers (e.g. Northern Virginia).

From a technological perspective, 2026 may bring new generations of more energy-efficient AI hardware (e.g. next-generation GPUs/TPUs) as well as broader adoption of Green AI initiatives aimed at optimizing models for lower power consumption. However, given the scale of demand, total energy consumption by AI will almost certainly continue to grow – the only question is how fast. The direction is clear: the industry must synchronize the development of AI with the development of sustainable energy systems to avoid a conflict between technological ambitions and climate goals.

5. Challenges for companies: energy costs, sustainability, and IT strategy

The rapid growth in energy demand driven by AI places managers and executives in front of several key strategic decisions:

- Rising energy costs: Higher electricity consumption means higher bills. Companies implementing AI at scale must account for significant energy expenditures in their budgets. Forecasts indicate that without efficiency improvements, power costs may consume an increasing share of IT spending. For example, in the United States, the expansion of data centers could raise average household electricity bills by 8% by 2030, and by as much as 25% in the most heavily burdened regions. For companies, this creates pressure to optimize consumption – whether through improved efficiency (better cooling, lower PUE) or by shifting workloads to regions with cheaper energy.

- Sustainability and CO₂ emissions: Corporate ESG targets are forcing technology leaders to pursue climate neutrality, which is difficult amid rapidly growing energy consumption. Large companies such as Google and Meta have already observed that the expansion of AI infrastructure has led to a surge in their CO₂ emissions despite earlier reductions. Managers therefore need to invest in emissions offsetting and clean energy sources. It is becoming the norm for companies to enter into long-term renewable energy contracts or even to invest directly in solar farms, wind farms, or nuclear projects to secure green energy for their data centers. There is also a growing trend toward the use of alternative sources – including trials of powering server farms with hydrogen, geothermal energy, or experimental nuclear fusion (e.g. Microsoft’s contract for 50 MW from the future Helion Energy fusion power plant) – all of which are elements of power supply diversification and decarbonization strategies.

- IT architecture choices and efficiency: IT decision-makers face the dilemma of how to deliver computing power for AI in the most efficient way. There are several options – from optimizing the models themselves (e.g. smaller models, compression, smarter algorithms) to specialized hardware (ASICs, next-generation TPUs, optical memory, etc.). The deployment model choice is also critical: cloud vs on-premises. Large cloud providers often offer data centers with very high energy efficiency (PUE close to 1.1) and the ability to dynamically scale workloads, improving hardware utilization and reducing energy waste. On the other hand, companies may consider their own data centers located where energy is cheaper or where renewable energy is readily available (e.g. regions with surplus renewable generation). AI workload placement strategy – deciding which computational tasks run in which region and when – is becoming a new area of cost optimization. For example, shifting some workloads to data centers operating at night on wind energy or in cooler climates (lower cooling costs) can generate savings.

- Reputational and regulatory risk: Public awareness of AI’s energy footprint is growing. Companies must be prepared for questions from investors and the public about how “green” their artificial intelligence really is. A lack of sustainability initiatives may result in reputational damage, especially if competitors can demonstrate carbon-neutral AI services. In addition, new regulations can be expected – ranging from mandatory disclosure of energy and water consumption by data centers to efficiency standards or emissions limits. Managers should proactively monitor these regulatory developments and engage in industry self-regulation initiatives to avoid sudden legal constraints.

In summary, the growing energy needs of AI are a phenomenon that, between 2024 and 2026, has evolved from a barely noticeable curiosity into a strategic challenge for both the IT sector and the energy industry. Hard data shows an exponential rise in electricity consumption – AI is becoming a significant energy consumer worldwide. The response to this trend must be innovation and planning: the development of more efficient technologies, investment in clean energy, and smart workload management strategies. Leaders face the task of finding a balance between driving the AI revolution and responsible energy stewardship – so that artificial intelligence drives progress without overloading the planet.

6. Is your AI architecture ready for rising energy and infrastructure costs?

AI is no longer just a software decision – it is an infrastructure, cost, and energy decision. At TTMS, we help large organizations assess whether their AI and cloud architectures are ready for real-world scale, including growing energy demand, cost control, and long-term sustainability. If your teams are moving AI from pilot to production, now is the right moment to validate your architecture before energy and infrastructure constraints become a business risk.

Learn how TTMS supports enterprises in designing scalable, cost-efficient, and production-ready AI architectures – talk to our experts.

Why is AI dramatically increasing energy consumption in data centers?

AI significantly increases energy consumption because it relies on extremely compute-intensive workloads, particularly large-scale inference running continuously in production environments. Unlike traditional enterprise applications, AI systems often operate 24/7, process massive volumes of data, and require specialized hardware such as GPUs and AI accelerators that consume far more power per rack. While model training is energy-intensive, inference at scale now accounts for the majority of AI-related electricity use. As AI becomes embedded in everyday business processes, energy demand grows structurally rather than temporarily, turning electricity into a core dependency of AI-driven organizations.

How does AI-driven energy demand affect data center location and cloud strategy?

Energy availability, grid capacity, and electricity pricing are becoming critical factors in data center location decisions. Regions with constrained grids or high energy costs may struggle to support large-scale AI deployments, while areas with abundant renewable energy or stable baseload power gain strategic importance. This directly influences cloud strategy, as companies increasingly evaluate where AI workloads run, not just how they run. Hybrid and multi-region architectures are now used not only for resilience and compliance, but also to optimize energy cost, carbon footprint, and long-term scalability.

Will energy costs materially impact the ROI of AI investments?

Yes, energy costs are increasingly becoming a material component of AI return on investment. As AI workloads scale, electricity consumption can rival or exceed traditional infrastructure costs such as hardware depreciation or software licensing. In regions experiencing rapid data center growth, rising power prices and grid expansion costs may further increase operational expenses. Organizations that fail to model energy consumption realistically risk underestimating the true cost of AI initiatives, which can distort financial forecasts and strategic planning.

Can renewable energy realistically keep up with AI-driven demand growth?

Renewable energy is expanding rapidly and plays a crucial role in powering AI infrastructure, but it is unlikely to fully offset AI-driven demand growth in the short term. While many technology companies are investing heavily in wind, solar, and long-term power purchase agreements, the pace of AI adoption is exceptionally fast. As a result, fossil fuels and nuclear energy are expected to remain part of the energy mix for data centers through at least the end of the decade. Long-term sustainability will depend on a combination of renewable expansion, grid modernization, energy storage, and improvements in AI efficiency.

What strategic decisions should executives make today to prepare for AI-related energy constraints?

Executives should treat energy as a strategic input to AI, not a secondary operational concern. This includes incorporating energy costs into AI business cases, aligning AI growth plans with sustainability goals, and assessing the resilience of energy supply in key regions. Decisions around cloud providers, workload placement, and hardware architecture should explicitly consider energy efficiency and long-term availability. Organizations that proactively integrate AI strategy with energy and sustainability planning will be better positioned to scale AI responsibly and competitively.