Sort by topics

Salesforce for the Logistics Industry: Digital Support for Sales, Service, and Partner Teams

Modern logistics companies, 3PL operators, and freight forwarders operate in an environment where speed of response, data transparency, and reliable communication have become key competitive advantages. Operational systems alone—TMS, WMS, or ERP—are no longer sufficient to build consistent customer and partner experiences at every stage of collaboration. This is where Salesforce for logistics comes in—a tool that streamlines sales processes, improves service delivery, and facilitates information exchange with partners. This article demonstrates how a CRM system can become real support for the transport, forwarding, and logistics (TFL) industry—without interfering with operational processes—and what specific benefits its implementation brings. 1. Why Does the Logistics Industry Need a Unified CRM? In logistics companies, TMS, WMS, and ERP systems handle core operational processes: transport planning, warehouse management, billing, and resource control. CRM in logistics plays a different, complementary role—it supports sales and customer service areas (front-office) by organizing information essential for managing commercial relationships and making business decisions. With Salesforce, sales teams have access to consistent data on customers, contracts, and collaboration history without needing to access operational systems directly. CRM integration with TMS, WMS, and ERP eliminates manual information exchange, improves cross-departmental transparency, and supports smooth sales processes. This approach allows organizations to build a unified view of customer relationships (Customer 360) while maintaining full autonomy of systems responsible for logistics operations. 2. Salesforce Solutions Dedicated to Logistics Companies Salesforce provides a suite of tools that support sales and service departments, facilitate communication with shippers and consignees, and enable the creation of self-service portals. 2.1 Sales Cloud – Automation of Quoting and Sales in Logistics Sales Cloud supports key commercial processes: contact management, sales pipeline monitoring, and contract control. For a logistics operator, this means: Easier tracking of quote requests and rapid pricing preparation. Customer segmentation by cargo type, routing, or volume. Transparent performance reporting for different service lines (ocean freight, air freight, road transport, warehousing). 2.2 Service Cloud – Efficient Claims and Incident Management Service Cloud serves as a central system for managing submissions: claims, shipment status inquiries, or incidents. It enables case creation with automatic assignment to appropriate teams and SLA definition. Standardization: Knowledge base and service scripts support rapid resolution of recurring issues. Oversight: The system provides better insight into communication history and enables easier customer service quality reporting. 2.3 Experience Cloud – Self-Service Portals for Shippers and Partners Experience Cloud allows creation of dedicated portals that function as document centers. Customers can independently download bills of lading, invoices, proof of delivery (POD), and track shipment statuses. This reduces the number of routine inquiries to the service department and accelerates document flow in B2B relationships. 2.4 AI, Automation, and IoT – Intelligent Decision Support in TFL AI functionalities (e.g., Salesforce Einstein) enable proactive risk detection and optimization of commercial activities. Integration with IoT data (telemetry, temperature sensors, GPS) allows transmission of important signals about cargo or fleet status to the CRM. The CRM uses this data for automatic customer notifications or initiating service processes, while advanced data analytics remains in specialized systems. 2.5 Implementation, Integration, and Managed Services CRM implementation success depends on proper process design and correct data mapping from TMS/WMS systems. This stage includes permission configuration, information migration, and user training. The Managed Services model ensures continuity after project launch, managing updates and developing the system in line with changes in the logistics business. 2.6 Salesforce Platform – Custom-Built Applications When standard features are insufficient, the platform allows creation of dedicated applications, such as custom quote forms or reporting automation specific to large logistics contracts. These extensions integrate with operational systems but do not replace them, offering flexibility without interfering with IT infrastructure. 3. Key Benefits of Implementing Salesforce CRM in Logistics Companies 3.1 Full Visibility of Customer Relationships and Communication Integrated CRM consolidates contact history, quotes, contracts, and cases in one place, allowing sales representatives and service teams to quickly gain context before customer conversations. This centralization facilitates identification of recurring issues, evaluation of sales effectiveness, and tracking of contract terms and SLA commitments, resulting in shorter response times and higher service quality. 3.2 Higher Customer Service Quality and Faster Claims Resolution Centralized case management enables automatic case creation and escalation, progress tracking, and access to complete incident documentation. As a result, claims and exceptions are resolved more efficiently, improving trust and reducing the risk of contract loss. 3.3 Operational Optimization Through Automation and Data Utilization Through automation of routine tasks (e.g., notifications, status updates, document generation) and CRM data analysis, organizations can shift resources from administrative work to value-adding activities. CRM information also supports commercial and strategic decisions—identifying highest-value customer segments or areas requiring service improvements. 3.4 Scalability and Flexibility in Feature Development The Salesforce platform enables functionality development as the company grows without requiring operational system rebuilds. The ability to create custom applications, integrations, and automation allows rapid response to market changes, implementation of new sales models, and adjustment of service processes at relatively low cost and implementation time. 4. Why Partner with TTMS – Your Salesforce Partner for the Logistics Industry At TTMS, we help logistics companies leverage Salesforce as a front-office that genuinely supports sales, customer service, and partners. We combine industry experience with technological expertise, ensuring CRM works in full harmony with TMS/WMS/ERP—without interfering with operational processes. 4.1 How We Work We focus on practical, measurable implementations. Every project begins with a brief audit and joint priority setting. We then design integration architecture and configure Sales Cloud, Service Cloud, and Experience Cloud for logistics specifics. Where necessary, we create extensions and automation, and after implementation, we provide ongoing support (Managed Services). 4.2 What We Deliver in Practice Integrations with TMS/WMS/ERP that provide sales and service teams with current data on customers, orders, and statuses. Streamlined sales processes—logistics pipeline, rapid quoting, CPQ, margin control. Better customer service through SLA, claims handling, self-service portals, and automation. Data security and quality—appropriate roles, auditing, compliance with industry standards. Continuous system development so CRM scales with the business. 4.3 Why Partner with TTMS? Because we don’t implement generic CRM—we deliver solutions tailored to logistics realities. We focus on implementation speed, user simplicity, and concrete KPIs that demonstrate project value—from shortened quoting time to reduced service department inquiries. If you wish, we’ll prepare a preliminary action plan with recommended integration scope. Contact us now! Can Salesforce replace a TMS or WMS system? No, Salesforce is not designed for operations management (route planning, inventory levels). It serves as a front-office system that integrates data from TMS/WMS so sales and customer service departments have full visibility into customer relationships without accessing operational systems. What data from logistics systems should be integrated with CRM? Most commonly integrated are shipment statuses, order history, volume data, contract terms, and documents (invoices, POD). This allows sales representatives to see in the CRM whether a given customer is increasing turnover or has open claims. Does Salesforce implementation require changing current processes in a freight forwarding company? Implementation is an opportunity for optimization, but Salesforce is flexible enough to adapt to existing, proven processes. The goal is work automation, not complication. How does Experience Cloud help in relationships with logistics partners? It allows creation of a portal where partners (e.g., carriers or consignees) can independently update statuses, submit documents, or download orders. This eliminates hundreds of emails and phone calls daily. How long does Salesforce implementation take in a logistics company? Implementation time depends on integration scope. Initial modules (e.g., Sales Cloud) can be launched in a few weeks, while full integration with ERP/TMS systems typically takes 3 to 6 months.

Read7 Must-Have Certifications to Look for in a Reliable IT Partner

Not all IT partners are created equal. In regulated, high-risk and AI-driven environments, certifications are no longer a “nice to have”. They are hard proof that a software company can deliver securely, responsibly and at scale. For enterprise clients and public institutions, the right certifications often determine whether a vendor is even eligible to participate in strategic projects. Below are seven essential certifications and authorizations that define a mature, enterprise-ready IT partner – including a groundbreaking new standard that is setting the future benchmark for responsible AI development. 1. Why These Certifications Matter When Choosing an IT Partner These certifications are not accidental or aspirational. They represent the most commonly required standards in enterprise tenders, public-sector procurements and regulated IT projects across Europe. Together, they cover the core expectations placed on modern technology partners: information security, quality assurance, service continuity, regulatory compliance, sustainability, workforce safety and, increasingly, responsible artificial intelligence governance. In many large-scale projects, the absence of even one of these certifications can disqualify a vendor at the pre-selection stage. This makes the list not a marketing statement, but a practical reflection of what organizations actually demand when selecting long-term, strategic IT partners. 1.1 ISO/IEC 27001 – Information Security Management System ISO/IEC 27001 defines how an organization identifies, assesses and controls risks related to information security. It focuses specifically on protecting information assets such as client data, intellectual property and critical systems against unauthorized access, loss or disruption. For IT partners, this certification confirms that security is managed as a dedicated discipline – with formal risk assessments, incident response procedures and continuous monitoring. Working with an ISO 27001-certified vendor reduces exposure to data breaches, regulatory penalties and security-driven operational downtime, particularly in projects involving sensitive or confidential information. 1.2 ISO 14001 – Environmental Management System ISO 14001 confirms that an organization actively manages its environmental impact. In IT services, this includes responsible resource usage, sustainable infrastructure practices and compliance with environmental regulations. For enterprise and public-sector clients, this certification signals that sustainability is embedded into operational decision-making, not treated as a marketing afterthought. 1.3 MSWiA Concession – Authorization for Security-Sensitive Software Projects The MSWiA (Polish Ministry of Interior and Administration) concession is a Polish government authorization required for companies delivering software solutions for police, military and other security-related institutions. It defines strict operational, organizational and personnel standards. In practice, this authorization covers work involving classified information, restricted-access systems and elements of critical national infrastructure. Possession of this concession proves that an IT partner is trusted to operate in environments where confidentiality, national security and procedural discipline are critical. 1.4 ISO 9001 – Quality Management System ISO 9001 governs how an organization ensures consistent quality in the way work is planned, executed and improved. Unlike security or service standards, it focuses on process discipline, repeatability and accountability across the entire delivery lifecycle. In software development, this translates into predictable project execution, clearly defined responsibilities, transparent communication and measurable outcomes. An ISO 9001-certified IT partner demonstrates that quality is not dependent on individual teams or people, but is embedded systemically across projects and client engagements. 1.5 ISO/IEC 20000 – IT Service Management System ISO/IEC 20000 addresses how IT services are operated and supported once they are in production. It defines best practices for service design, delivery, monitoring and continuous improvement, with a strong emphasis on availability, reliability and service continuity. This certification is particularly critical for managed services, long-term outsourcing and mission-critical systems, where operational stability matters as much as development capability. An ISO/IEC 20000-certified IT partner proves that IT services are managed as ongoing, business-critical operations rather than one-off technical deliverables. 1.6 ISO 45001 – Occupational Health and Safety Management System ISO 45001 defines how organizations protect employee health and safety. In IT, this includes workload management, operational resilience and creating stable working conditions for delivery teams. For clients, it indirectly translates into lower project risk, reduced staff turnover and higher continuity in complex, long-running initiatives. 1.7 ISO/IEC 42001 – Artificial Intelligence Management System 1.7.1 Setting a New Benchmark for Responsible AI ISO/IEC 42001 is the world’s first international standard dedicated exclusively to the management of artificial intelligence systems. It defines how organizations should design, develop, deploy and maintain AI in a trustworthy, transparent and accountable way. ISO/IEC 42001 directly supports key requirements of the EU AI Act, including structured AI risk management, defined human oversight mechanisms, lifecycle control and documentation of AI systems. TTMS is the first Polish company to receive certification under ISO/IEC 42001, confirmed through an audit conducted by TÜV Nord Poland. This places the company among the earliest operational adopters of this standard in Europe. The certification validates that TTMS’s Artificial Intelligence Management System (AIMS) meets international requirements for responsible AI governance, risk management and regulatory alignment. 1.7.2 Why ISO/IEC 42001 Matters Trust and credibility – AI systems are developed with formal governance, transparency and accountability. Risk-aware innovation – AI-related risks are identified, assessed and mitigated without slowing down delivery. Regulatory readiness – The framework supports alignment with evolving legal requirements, including the EU AI Act. Market leadership – Early adoption signals maturity and readiness for enterprise-scale AI projects. 1.7.3 What This Means for Clients and Partners Under ISO/IEC 42001, all AI components developed or integrated by TTMS are governed by a unified management system. This includes documentation, ethical oversight, lifecycle control and continuous monitoring. For organizations selecting an IT partner, this translates into lower compliance risk, stronger protection of users and data, and higher confidence that AI-enabled solutions are built responsibly from day one. 2. A Fully Integrated Management System Together, these seven certifications and authorizations operate within a comprehensive Integrated Management System (IMS). This means that security, quality, service delivery, sustainability, workforce safety and – increasingly critical – artificial intelligence governance are managed as interconnected processes rather than isolated compliance initiatives. For decision-makers comparing IT partners, this level of integration is not about checklists or logos. It significantly reduces organizational risk, increases operational consistency and enables vendors to deliver complex, regulated and future-proof digital solutions at scale, across long-term engagements. 3. Why Integrated Certification Matters for Clients In practice, this level of certification and integration delivers tangible benefits for clients: Reduced due diligence effort – certified processes shorten vendor assessment and compliance verification. Fewer client-side audits – independent third-party certification replaces repeated internal controls. Faster project onboarding – standardized governance accelerates contractual and operational startup. Lower compliance risk – regulatory, security and operational controls are embedded by default. Greater delivery predictability – projects run on proven, repeatable frameworks rather than ad hoc practices. In day-to-day cooperation, certified and integrated management systems simplify client onboarding, standardize reporting and reduce the scope and frequency of client-side audits. They also provide a stable foundation for clearly defined SLAs, escalation paths and compliance reporting, enabling faster project start-up and smoother long-term delivery. Ultimately, this level of certification significantly reduces the risks most often associated with selecting an IT partner. It limits dependency on individual people rather than processes, lowers the likelihood of unpredictable delivery models and minimizes the danger of vendor lock-in caused by undocumented or opaque practices. For decision-makers, certified and integrated management systems provide assurance that projects are governed by structure, transparency and continuity – not by improvisation. 4. From Certification to Execution Certifications matter only if they translate into real operational practices. At TTMS, quality, security and compliance frameworks are not treated as formal requirements, but as working management systems embedded into daily delivery. If your organization is evaluating an IT partner or looking to strengthen its own governance, quality management and compliance capabilities, TTMS supports clients across regulated industries in designing, implementing and operating certified management systems. Learn more about how we approach quality and integrated management in practice: Quality Management Services at TTMS FAQ Why are ISO certifications important when choosing an IT partner? ISO certifications provide independent verification that an IT partner operates according to internationally recognized standards. They reduce operational, security and compliance risks while increasing predictability and trust in long-term cooperation. Is ISO/IEC 27001 enough to ensure data security in IT projects? ISO/IEC 27001 is a strong foundation, but it works best as part of a broader management system. When combined with service management, quality and AI governance standards, it ensures security is embedded across the entire delivery lifecycle. What makes ISO/IEC 42001 different from other ISO standards? ISO/IEC 42001 is the first standard focused solely on artificial intelligence. It addresses AI-specific risks such as bias, transparency, accountability and regulatory compliance, which are not fully covered by traditional management systems. Why should enterprises care about AI management standards now? As AI becomes embedded in business-critical systems, regulatory scrutiny and ethical expectations are increasing. AI management standards help organizations avoid legal exposure while building sustainable, trustworthy AI solutions. How do multiple certifications benefit clients in real projects? Multiple certifications ensure that security, quality, service reliability, compliance and responsible innovation are managed consistently. For clients, this means fewer surprises, lower risk and higher confidence throughout the project lifecycle.

ReadTTMS at World Defense Show 2026 in Riyadh

Transition Technologies MS participated in World Defense Show 2026, held on 8-12 February in Riyadh, Saudi Arabia – one of the most significant global events dedicated to the defense and security sector. The exhibition confirmed a clear direction of technological development across the industry. Modern defense is increasingly shaped not only by hardware platforms, but by software, advanced analytics and artificial intelligence embedded directly into operational systems. Among the dominant themes observed during the event were: the growing deployment of hybrid VTOL unmanned aerial systems combining operational flexibility with extended range, the rapid expansion of virtual and simulation-based training environments using VR and AR technologies, deeper integration of AI into command support, fire control and situational awareness systems, and the continued evolution of integrated C2 and C4ISR architectures, particularly in the context of counter-UAS and air defense capabilities. A strong emphasis was also placed on autonomy and cost-effective air defense solutions, reflecting the operational challenges posed by the large-scale use of unmanned platforms in contemporary conflicts. For TTMS, World Defense Show 2026 provided an opportunity to engage in discussions on AI-driven decision support systems, advanced training platforms, and software layers supporting integrated defense architectures. The event enabled valuable exchanges with international partners and opened new perspectives for cooperation in complex, mission-critical environments. Participation in WDS 2026 reinforced the view that the future battlefield will be increasingly digital, interconnected and software-defined – and that effective defense transformation requires not only advanced platforms, but intelligent systems integrating data, sensors and operational decision-making.

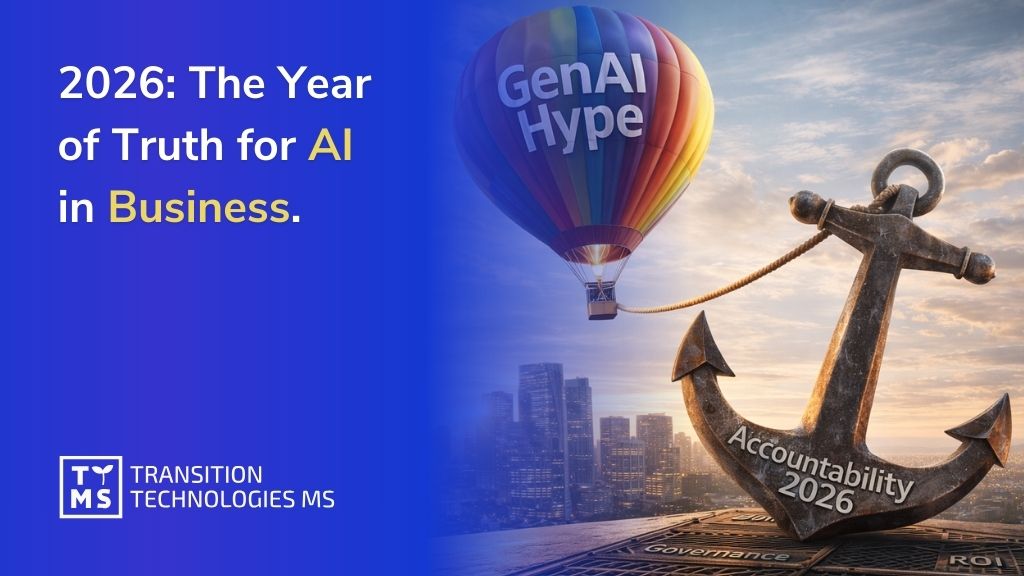

Read2026: The Year of Truth for AI in Business – Who Will Pay for the Experiments of 2023–2025?

1. Introduction: From Hype to Hard Truths For the past three years, artificial intelligence adoption in business has been driven by whirlwind hype and experimentation. Companies poured billions into generative AI pilots, eager to transform “literally everything” with AI. 2025, in particular, was the peak of this AI gold rush, as many firms moved from experiments to real deployments. Yet the reality lagged behind the promises – AI’s true impact remained uneven and hard to quantify, often because the surrounding systems and processes weren’t ready to support lasting results. As the World Economic Forum aptly noted, “If 2025 has been the year of AI hype, 2026 might be the year of AI reckoning”. In 2026, the bill for those early AI experiments is coming due in the form of technical debt, security risks, regulatory scrutiny, and investor impatience. 2026 represents a pivotal shift: the era of unchecked AI evangelism is giving way to an era of AI evaluation and accountability. The question businesses must answer now isn’t “Can AI do this?” but rather “How well can it do it, at what cost, and who bears the risk?”. This article examines how the freewheeling AI experiments of 2023-2025 created hidden costs and risks, and why 2026 is shaping up to be the year of truth for AI in business – a year when hype meets reality, and someone has to pay the price. 2. 2023-2025: A Hype-Driven AI Experimentation Era In hindsight, the years 2023 through 2025 were an AI wild west for many organizations. Generative AI (GenAI) tools like ChatGPT, Copilots, and custom models burst onto the scene, promising to revolutionize coding, content creation, customer service, and more. Tech giants and startups alike invested unprecedented sums in AI development and infrastructure, fueling a frenzy of innovation. Across nearly every industry, AI was touted as a transformative force, and companies raced to pilot new AI use cases to avoid being left behind. However, this rush came with a stark contradiction. Massive models and big budgets grabbed headlines, but the “lived reality” for businesses often fell short of the lofty promises. By late 2025, many organizations struggled to point to concrete improvements from their AI initiatives. The problem wasn’t that AI technology failed – in many cases, the algorithms worked as intended. Rather, the surrounding business processes and support systems were not prepared to turn AI outputs into durable value. Companies lacked the data infrastructure, change management, and integration needed to realize AI’s benefits at scale, so early pilots rarely matured into sustained ROI. Enthusiasm for AI nonetheless remained sky-high. Early missteps and patchy results did little to dampen the “AI race” mentality. If anything, failures shifted the conversation toward making AI work better. As one analysis put it, “Those moments of failure did not diminish enthusiasm – they matured initial excitement into a stronger desire for [results]”. By 2025, AI had moved decisively from sandbox to real-world deployment, and executives entered 2026 still convinced that AI is an imperative – but now wiser about the challenges ahead. 3. The Mounting Technical & Security Debt from Rapid AI Adoption One of the hidden costs of the 2023-2025 AI rush is the significant technical debt and security debt that many organizations accumulated. In the scramble to deploy AI solutions quickly, shortcuts were taken – especially in areas like AI-generated code and automated workflows – that introduced long-term maintenance burdens and vulnerabilities. AI coding assistants dramatically accelerated software development, enabling developers to churn out code up to 2× faster. But this velocity came at a price. Studies found that AI-generated code often favors quick fixes over sound architecture, leading to bugs, security vulnerabilities, duplicated code, and unmanageable complexity piling up in codebases. As one report noted, “the immense velocity gain inherently increases the accumulation of code quality liabilities, specifically bugs, security vulnerabilities, structural complexity, and technical debt”. Even as AI coding tools improve, the sheer volume of output overwhelms human code review processes, meaning bad code slips through. The result: a growing backlog of “structurally weak” code and latent defects that organizations must now pay to refactor and secure. Forrester researchers predict that by 2026, 75% of technology decision-makers will be grappling with moderate to severe technical debt, much of it due to the speed-first, AI-assisted development approach of the preceding years. This technical debt isn’t just a developer headache – it’s an enterprise risk. Systems riddled with AI-introduced bugs or poorly maintained AI models can fail in unpredictable ways, impacting business operations and customer experiences. Security leaders are likewise sounding alarms about “security debt” from rapid GenAI adoption. In the rush to automate tasks and generate code/content with AI, many companies failed to implement proper security guardrails. Common issues include: Unvetted AI-generated code with hidden vulnerabilities (e.g. insecure APIs or logic flaws) being deployed into production systems. Attackers can exploit these weaknesses if not caught. “Shadow AI” usage by employees – workers using personal ChatGPT or other AI accounts to process company data – leading to sensitive data leaks. For example, in 2023, Samsung engineers accidentally leaked confidential source code to ChatGPT, prompting the company to ban internal use of generative AI until controls were in place. Samsung’s internal survey found 65% of participants saw GenAI tools as a security risk, citing the inability to retrieve data once it’s on external AI servers. Many firms have since discovered employees pasting client data or source code into AI tools without authorization, creating compliance and IP exposure issues. New attack vectors via AI integrations. As companies wove AI into products and workflows, they sometimes created fresh vulnerabilities. Threat actors are now leveraging generative AI to craft more sophisticated cyberattacks at machine speed, from convincing phishing emails to code exploits. Meanwhile, AI services integrated into apps could be manipulated (via prompt injection or data poisoning) unless properly secured. The net effect is that security teams enter 2026 with a backlog of AI-related risks to mitigate. Regulators, customers, and auditors are increasingly expecting “provable security controls across the AI lifecycle (data sourcing, training, deployment, monitoring, and incident response)”. In other words, companies must now pay down the security debt from their rapid AI uptake by implementing stricter access controls, data protection measures, and AI model security testing. Even cyber insurance carriers are reacting – some insurers now require evidence of AI risk management (like adversarial red-teaming of AI models and bias testing) before providing coverage. Bottom line: The experimentation era accelerated productivity but also spawned hidden costs. In 2026, businesses will have to invest time and money to clean up “AI slop” – refactoring shaky AI-generated code, patching vulnerabilities, and instituting controls to prevent data leaks and abuse. Those that don’t tackle this technical and security debt will pay in other ways, whether through breaches, outages, or stymied innovation. 4. The Governance Gap: AI Oversight Didn’t Keep Up Another major lesson from the 2023-2025 AI boom is that AI adoption raced ahead of governance. In the frenzy to deploy AI solutions, many organizations neglected to establish proper AI governance, audit trails, and internal controls. Now, in 2026, that oversight gap is becoming painfully clear. During the hype phase, exciting AI tools were often rolled out with minimal policy guidance or risk assessment. Few companies had frameworks in place to answer critical questions like: Who is responsible for AI decision outcomes? How do we audit what the AI did? Are we preventing bias, IP misuse, or compliance violations by our AI systems? The result is that many firms operated on AI “trust” without “verify.” For instance, employees were given AI copilots to generate code or content, but organizations lacked audit logs or documentation of what the AI produced and whether humans reviewed it. Decision-making algorithms were deployed without clear accountability or human-in-the-loop checkpoints. In a PwC survey, nearly half of executives admitted that putting Responsible AI principles into practice has been a challenge. While a strong majority agree that “responsible AI” is crucial for ROI and efficiency, operationalizing those principles (through bias testing, transparency, control mechanisms) lagged behind. In fact, AI adoption has spread faster than the governance models to manage its unique risks. Companies eagerly implemented AI agents and automated decision systems, “spreading faster than governance models can address their unique needs”. This governance gap means many organizations entered 2026 with AI systems running in production that have no rigorous oversight or documentation, creating risk of errors or ethical lapses. The early rush to AI often prioritized speed over strategy, as one tech legal officer observed. “The early rush to adopt AI prioritized speed over strategy, leaving many organizations with little to show for their investments,” says Ivanti’s Chief Legal Officer, noting that companies are now waking up to the consequences of this lapse. Those consequences include fragmented, siloed AI projects, inconsistent standards, and “innovation theater” – lots of AI pilot activity with no cohesive strategy or measurable value to the business. Crucially, lack of governance has become a board-level issue by 2026. Corporate directors and investors are asking management: What controls do you have over your AI? Regulators, too, expect to see formal AI risk management and oversight structures. In the U.S., the SEC’s Investor Advisory Committee has even called for enhanced disclosures on how boards oversee AI governance as part of managing cybersecurity risks. This means companies could soon have to report how they govern AI use, similar to how they disclose financial controls or data security practices. The governance gap of the last few years has left many firms playing catch-up. Audit and compliance teams in 2026 are now scrambling to inventory all AI systems in use, set up AI audit trails, and enforce policies (e.g. requiring human review of AI outputs in high-stakes decisions). Responsible AI frameworks that were mostly talk in 2023-24 are (hopefully) becoming operational in 2026. As PwC predicts, “2026 could be the year when companies overcome this challenge and roll out repeatable, rigorous RAI (Responsible AI) practices”. We are likely to see new governance mechanisms take hold: from AI model registers and documentation requirements, to internal AI ethics committees, to tools for automated bias detection and monitoring. The companies that close this governance gap will not only avoid costly missteps but also be better positioned to scale AI in a safe, trusted manner going forward. 5. Speed vs. Readiness: The Deployment-Readiness Gap Widens One striking issue in the AI boom was the widening gap between how fast companies deployed AI and how prepared their organizations were to manage its consequences. Many businesses leapt from zero to AI at breakneck speed, but their people, processes, and strategies lagged behind, creating a performance paradox: AI was everywhere, yet tangible business value was often elusive. By the end of 2025, surveys revealed a sobering statistic – up to 95% of enterprise generative AI projects had failed to deliver measurable ROI or P&L impact. In other words, only a small fraction of AI initiatives actually moved the needle for the business. The MIT Media Lab found that “95% of organizations see no measurable returns” from AI in the knowledge sector. This doesn’t mean AI can’t create value; rather, it underscores that most companies weren’t ready to capture value at the pace they deployed AI. The reasons for this deployment-readiness gap are multi-fold: Lack of integration with workflows: Deploying an AI model is one thing; redesigning business processes to exploit that model is another. Many firms “introduced AI without aligning it to legacy processes or training staff,” leading to an initial productivity dip known as the AI productivity paradox. AI outputs appeared impressive in demos, but front-line employees often couldn’t easily incorporate them into daily work, or had to spend extra effort verifying AI results (what some call “AI slop” or low-quality output that creates more work). Skills and culture lag: Companies deployed AI faster than they upskilled their workforce to use and oversee these tools. Employees were either fearful of the new tech or not trained to collaborate with AI systems effectively. As Gartner analyst Deepak Seth noted, “we still don’t understand how to build the team structure where AI is an equal member of the team”. Many organizations lacked AI fluency among staff and managers, resulting in misuse or underutilization of the technology. Scattered, unprioritized efforts: Without a clear AI strategy, some companies spread themselves thin over dozens of AI experiments. “Organizations spread their efforts thin, placing small sporadic bets… early wins can mask deeper challenges,” PwC observes. With AI projects popping up everywhere (often bottom-up from enthusiastic employees), leadership struggled to scale the ones that mattered. The absence of a top-down strategy meant many AI projects never translated into enterprise-wide impact. The result of these factors was that by 2025, many businesses had little to show for their flurry of AI activity. As Ivanti’s Brooke Johnson put it, companies found themselves with “underperforming tools, fragmented systems, and wasted budgets” because they moved so fast without a plan. This frustration is now forcing a change in 2026: a shift from “move fast and break things” to “slow down and get it right.” Already, we see leading firms adjusting their approach. Rather than chasing dozens of AI use cases, they are identifying a few high-impact areas and focusing deeply (the “go narrow and deep” approach). They are investing in change management and training so that employees actually adopt the AI tools provided. Importantly, executives are injecting more discipline and oversight into AI initiatives. “There is – rightfully – little patience for ‘exploratory’ AI investments” in 2026, notes PwC; every dollar now needs to “fuel measurable outcomes”, and frivolous pilots are being pruned. In other words, AI has to earn its keep now. The gap between deployment and readiness is closing at companies that treat AI as a strategic transformation (led by senior leadership) rather than a series of tech demos. Those still stuck in “innovation theater” will find 2026 a harsh wake-up call – their AI projects will face scrutiny from CFOs and boards asking “What value is this delivering?” Success in 2026 will favor the organizations that balance innovation with preparation, aligning AI projects to business goals, fortifying them with the right processes and talent, and phasing deployments at a pace the organization can absorb. The days of deploying AI for AI’s sake are over; now it’s about sustainable, managed AI that the organization is ready to leverage. 6. Regulatory Reckoning: AI Rules and Enforcement Arrive Regulators have taken notice of the AI free-for-all of recent years, and 2026 marks the start of a more forceful regulatory response worldwide. After a period of policy debate in 2023-2024, governments are now moving from guidelines to enforcement of AI rules. Businesses that ignored AI governance may find themselves facing legal and financial consequences if they don’t adapt quickly. In the European Union, a landmark law – the EU AI Act – is coming into effect in phases. Adopted in late 2023, this comprehensive regulation imposes requirements based on AI risk levels. Notably, by August 2, 2026, companies deploying AI in the EU must comply with specific transparency rules and controls for “high-risk AI systems.” Non-compliance isn’t an option unless you fancy huge fines – penalties can go up to €35 million or 7% of global annual turnover (whichever is higher) for serious violations. This is a clear signal that the era of voluntary self-regulation is over in the EU. Companies will need to document their AI systems, conduct risk assessments, and ensure human oversight for high-risk applications (e.g. AI in healthcare, finance, HR, etc.), or face hefty enforcement. EU regulators have already begun flexing their muscles. The first set of AI Act provisions kicked in during 2025, and regulators in member states are being appointed to oversee compliance. The European Commission is issuing guidance on how to apply these rules in practice. We also see related moves like Italy’s AI law (aligned with the EU Act) and a new Code of Practice on AI-generated content transparency being rolled out. All of this means that by 2026, companies operating in Europe need to have their AI house in order – keeping audit trails, registering certain AI systems in an EU database, providing user disclosures for AI-generated content, and more – or risk investigations and fines. North America is not far behind. While the U.S. hasn’t passed a sweeping federal AI law as of early 2026, state-level regulations and enforcements are picking up speed. For example, Colorado’s AI Act (enacted 2024) takes effect in June 2026, imposing requirements on AI developers and users to avoid algorithmic discrimination, implement risk management programs, and conduct impact assessments for AI involved in important decisions. Several other states (California, New York, Illinois, etc.) have introduced AI laws targeting specific concerns like hiring algorithms or AI outputs that impersonate humans. This patchwork of state rules means companies in the U.S. must navigate compliance carefully or face state attorney general actions. Indeed, 2025 already saw the first signs of AI enforcement in the U.S.: In May 2025, the Pennsylvania Attorney General reached a settlement with a property management company after its use of an AI rental decision tool led to unsafe housing conditions and legal violations. In July 2025, the Massachusetts AG fined a student loan company $2.5 million over allegations that its AI-powered system unfairly delayed or mismanaged student loan relief. These cases are likely the tip of the iceberg – regulators are signaling that companies will be held accountable for harmful outcomes of AI, even using existing consumer protection or anti-discrimination laws. The U.S. Federal Trade Commission has also warned it will crack down on deceptive AI practices and data misuse, launching inquiries into chatbot harms and children’s safety in AI apps. Across the Atlantic, the UK is shifting from principles to binding rules as well. After initially favoring a light-touch, pro-innovation stance, the UK government indicated in 2025 that sector regulators will be given explicit powers to enforce AI requirements in areas like data protection, competition, and safety. By 2026, we can expect the UK to introduce more concrete compliance obligations (though likely less prescriptive than the EU’s approach). For business leaders, the message is clear: the regulatory landscape for AI is rapidly solidifying in 2026. Companies need to treat AI compliance with the same seriousness as data privacy (GDPR) or financial reporting. This includes: conducting AI impact assessments, ensuring transparency (e.g. informing users when AI is used), maintaining documentation and audit logs of AI system decisions, and implementing processes to handle AI-related incidents or errors. Those who fail to do so may find regulators making an example of them – and the fines or legal damages will effectively “make them pay” for the lax practices of the past few years. 7. Investor Backlash: Demanding ROI and Accountability It’s not just regulators – investors and shareholders have also lost patience with AI hype. By 2026, the stock market and venture capitalists alike are looking for tangible returns on AI investments, and they are starting to punish companies that over-promised and under-delivered on AI. In 2025, AI was the belle of the ball on Wall Street – AI-heavy tech stocks soared, and nearly every earnings call featured some AI angle. But as 2026 kicks off, analysts are openly asking AI players to “show us the money.” A report summarized the mood with a dating analogy: “In 2025, AI took investors on a really nice first date. In 2026… it’s time to start footing the bill.”. The grace period for speculative AI spending is ending, and investors expect to see clear ROI or cost savings attributable to AI initiatives. Companies that can’t quantify value may see their valuations marked down. We are already seeing the market sorting AI winners from losers. Tom Essaye of Sevens Report noted in late 2025 that the once “unified enthusiasm” for all things AI had become “fractured”, with investors getting choosier. “The industry is moving into a period where the market is aggressively sorting winners and losers,” he observed. For example, certain chipmakers and cloud providers that directly benefit from AI workloads boomed, while some former software darlings that merely marketed themselves as AI leaders have seen their stocks stumble as investors demand evidence of real AI-driven growth. Even big enterprise software firms like Oracle, which rode the AI buzz, faced more scrutiny as investors asked for immediate ROI from AI efforts. This is a stark change from 2023, when a mere mention of “AI strategy” could boost a company’s stock price. Now, companies must back up the AI story with numbers – whether it’s increased revenue, improved margins, or new customers attributable to AI. Shareholders are also pushing companies on the cost side of AI. Training large AI models and running them at scale is extremely expensive (think skyrocketing cloud bills and GPU purchases). In 2026’s tighter economic climate, boards and investors won’t tolerate open-ended AI spending without a clear business case. We may see some investor activism or tough questioning in annual meetings: e.g., “You spent $100M on AI last year – what did we get for it?” If the answer is ambiguous, expect backlash. Conversely, firms that can articulate and deliver a solid AI payoff will be rewarded with investor confidence. Another aspect of investor scrutiny is corporate governance around AI (as touched on earlier). Sophisticated investors worry that companies without proper AI governance may face reputational or legal disasters (which hurt shareholder value). This is why the SEC and investors are calling for board-level oversight of AI. It won’t be surprising if in 2026 some institutional investors start asking companies to conduct third-party audits of their AI systems or to publish AI risk reports, similar to sustainability or ESG reports. Investor sentiment is basically saying: we believe AI can be transformative, but we’ve been through hype cycles before – we want to see prudent management and real returns, not just techno-optimism. In summary, 2026 is the year AI hype meets financial reality. Companies will either begin to reap returns on their AI investments or face tough consequences. Those that treated the past few years as an expensive learning experience must now either capitalize on that learning or potentially write off failed projects. For some, this reckoning could mean stock price corrections or difficulty raising funds if they can’t demonstrate a path to profitability with AI. For others who have sound AI strategies, 2026 could be the year AI finally boosts the bottom line and vindicates their investments. As one LinkedIn commentator quipped, “2026 won’t be defined by hype. It will be defined by accountability – especially by cost and return on investment.” 8. Case Studies: AI Maturity Winners and Losers Real-world examples illustrate how companies are faring as the experimental AI tide goes out. Some organizations are emerging as AI maturity winners – they invested in governance and alignment early, and are now seeing tangible benefits. Others are struggling or learning hard lessons, having to backtrack on rushed AI deployments that didn’t pan out. On the struggling side, a cautionary tale comes from those who sprinted into AI without guardrails. The Samsung incident mentioned earlier is a prime example. Eager to boost developer productivity, Samsung’s semiconductor division allowed engineers to use ChatGPT – and within weeks, internal source code and sensitive business plans were inadvertently leaked to the public chatbot. The fallout was swift: Samsung imposed an immediate ban on external AI tools until it could implement proper data security measures. This underscores that even tech-savvy companies can trip up without internal AI policies. Many other firms in 2023-24 faced similar scares (banks like JPMorgan temporarily banned ChatGPT use, for instance), realizing only after a leak or an embarrassing output that they needed to enforce AI usage guidelines and logging. The cost here is mostly reputational and operational – these companies had to pause promising AI applications until they cleaned up procedures, costing them time and momentum. Another “loser” scenario is the media and content companies that embraced AI too quickly. In early 2023, several digital publishers (BuzzFeed, CNET, etc.) experimented with AI-written articles to cut costs. It backfired when readers and experts found factual errors and plagiarism in the AI content, leading to public backlash and corrections. CNET, for example, quietly had to halt its AI content program after significant mistakes were exposed, undermining trust. These cases highlight that rushing AI into customer-facing outputs without rigorous review can damage a brand and erode customer trust – a hard lesson learned. On the flip side, some companies have navigated the AI boom adeptly and are now reaping rewards: Ernst & Young (EY), the global consulting and tax firm, is a showcase of AI at scale with governance. EY early on created an “AI Center of Excellence” and established policies for responsible AI use. The result? By 2025, EY had 30 million AI-enabled processes documented internally and 41,000 AI “agents” in production supporting their workflows. One notable agent, EY’s AI-driven tax advisor, provides up-to-date tax law information to employees and clients – an invaluable tool in a field with 100+ regulatory changes per day. Because EY paired AI deployment with training (upskilling thousands of staff) and controls (every AI recommendation in tax gets human sign-off), they have seen efficiency gains without losing quality. EY’s leadership claims these AI tools have significantly boosted productivity in back-office processing and knowledge management, giving them a competitive edge. This success wasn’t accidental; it came from treating AI as a strategic priority and investing in enterprise-wide readiness. DXC Technology, an IT services company, offers another success story through a human-centric AI approach. DXC integrated AI as a “co-pilot” for its cybersecurity analysts. They deployed an AI agent as a junior analyst in their Security Operations Center to handle routine tier-1 tasks (like classifying incoming alerts and documenting findings). The outcome has been impressive: DXC cut investigation times by 67.5% and freed up 224,000 analyst hours in a year. Human analysts now spend those hours on higher-value work such as complex threat hunting, while mundane tasks are efficiently automated. DXC credits this to designing AI to complement (not replace) humans, and giving employees oversight responsibilities to “spot and correct the AI’s mistakes”. Their AI agent operates within a well-monitored workflow, with clear protocols for when to escalate to a human. The success of DXC and EY underscores that when AI is implemented with clear purpose, guardrails, and employee buy-in, it can deliver substantial ROI and risk reduction. In the financial sector, Morgan Stanley gained recognition for its careful yet bold AI integration. The firm partnered with OpenAI to create an internal GPT-4-powered assistant that helps financial advisors sift through research and internal knowledge bases. Rather than rushing it out, Morgan Stanley spent months fine-tuning the model on proprietary data and setting up compliance checks. The result was a tool so effective that within months of launch, 98% of Morgan’s advisor teams were actively using it daily, dramatically improving their productivity in answering client queries. Early reports suggested the firm anticipated over $1 billion in ROI from AI in the first year. Morgan Stanley’s stock even got a boost amid industry buzz that they had cracked the code on enterprise AI value. Their approach – start with a targeted use case (research Q&A), ensure data is clean and permissions are handled, and measure impact – is becoming a template for successful AI rollout in other banks. These examples illustrate a broader point: the “winners” in 2026 are those treating AI as a long-term capability to be built and managed, not a quick fix or gimmick. They invested in governance, employee training, and aligning AI to business strategy. The “losers” rushed in for short-term gains or buzz, only to encounter pitfalls – be it embarrassed executives having to roll back a flawed AI system, or angry customers and regulators on the doorstep. As 2026 unfolds, we’ll likely see more of this divergence. Some companies will quietly scale back AI projects that aren’t delivering (essentially writing off the sunk costs of 2023-25 experiments). Others will double-down but with a new seriousness: instituting AI steering committees, hiring Chief AI Officers or similar roles to ensure proper oversight, and demanding that every AI project has clear metrics for success. This period will separate the leaders from the laggards in AI maturity. And as the title suggests, those who led with hype will “pay” – either in cleanup costs or missed opportunities – while those who paired innovation with responsibility will thrive. 9. Conclusion: 2026 and Beyond – Accountability, Maturity, and Sustainable AI The year 2026 heralds a new chapter for AI in business – one where accountability and realism trump hype and experimentation. The free ride is over: companies can no longer throw AI at problems without owning the outcomes. The experiments of 2023-2025 are yielding a trove of lessons, and the bill for mistakes and oversights is coming due. Who will pay for those past experiments? In many cases, businesses themselves will pay, by investing heavily now to bolster security, retrofit governance, and refine AI models that were rushed out. Some will pay in more painful ways – through regulatory fines, legal liabilities, or loss of market share to more disciplined competitors. Senior leaders who championed flashy AI initiatives will be held to account for their ROI. Boards will ask tougher questions. Regulators will demand evidence of risk controls. Investors will fund only those AI efforts that demonstrate clear value or at least a credible path to it. Yet, 2026 is not just about reckoning – it’s also about the maturation of AI. This is the year where AI can prove its worth under real-world constraints. With hype dissipating, truly valuable AI innovations will stand out. Companies that invested wisely in AI (and managed its risks) may start to enjoy compounding benefits, from streamlined operations to new revenue streams. We might look back on 2026 as the year AI moved from the “peak of inflated expectations” to the “plateau of productivity,” to borrow Gartner’s hype cycle terms. For general business leaders, the mandate going forward is clear: approach AI with eyes wide open. Embrace the technology – by all indications it will be as transformative as promised in the long run – but do so with a framework for accountability. This means instituting proper AI governance, investing in employee skills and change management, monitoring outcomes diligently, and aligning every AI project with strategic business goals (and constraints). It also means being ready to hit pause or pull the plug on AI deployments that pose undue risk or fail to deliver value, no matter how shiny the technology. The reckoning of 2026 is ultimately healthy. It marks the transition from the “move fast and break things” era of AI to a “move smart and build things that last” era. Companies that internalize this shift will not only avoid the costly pitfalls of the past, they will also position themselves to harness AI’s true power sustainably – turning it into a trusted engine of innovation and efficiency within well-defined guardrails. Those that don’t adjust may find themselves paying the price in more ways than one. As we move beyond 2026, one hopes that the lessons of the early 2020s will translate into a new balance: where AI’s incredible potential is pursued with both boldness and responsibility. The year of truth will have served its purpose if it leaves the business world with clearer-eyed optimism – excited about what AI can do, yet keenly aware of what it takes to do it right. 10. From AI Reckoning to Responsible AI Execution For organizations entering this new phase of AI accountability, the challenge is no longer whether to use AI, but how to operationalize it responsibly, securely, and at scale. Turning AI from an experiment into a sustainable business capability requires more than tools – it demands governance, integration, and real-world execution experience. This is where TTMS supports business leaders. Through its AI solutions for business, TTMS helps organizations move beyond pilot projects and hype-driven deployments toward production-ready, enterprise-grade AI systems. The focus is on aligning AI with business processes, mitigating technical and security debt, embedding governance and compliance by design, and ensuring that AI investments deliver measurable outcomes. In a year defined by accountability, execution quality is what separates AI leaders from AI casualties. 👉 https://ttms.com/ai-solutions-for-business/ FAQ: AI’s 2026 Reckoning – Key Questions Answered Why is 2026 called the “year of truth” for AI in business? Because many organizations are moving from experimentation to accountability. In 2023-2025, it was easy to launch pilots, buy licenses, and announce “AI initiatives” without proving impact or managing the risks properly. In 2026, boards, investors, customers, and regulators increasingly expect evidence: measurable outcomes, clear ownership, and documented controls. This shift turns AI from a trendy capability into an operational discipline. If AI is embedded in key processes, leaders must answer for errors, bias, security incidents, and financial performance. In practice, “year of truth” means companies will be judged not on how much AI they use, but on how well they govern it and whether it reliably improves business results. What does it mean when people say AI is no longer a competitive advantage? It means access to AI has become widely available, so simply “using AI” doesn’t set a company apart anymore. The differentiator is now execution: how well AI is integrated into real workflows, how consistently it delivers quality, and how safely it operates at scale. Two companies can deploy the same tools, but get very different outcomes depending on their data readiness, process design, and organizational maturity. Leaders who treat AI like infrastructure – with standards, monitoring, and continuous improvement – usually outperform those who treat it like a series of isolated pilots. Competitive advantage shifts from the model itself to the surrounding system: governance, change management, and the ability to turn AI outputs into decisions and actions that create value. How can rapid GenAI adoption increase security risk instead of reducing it? GenAI can accelerate delivery, but it can also accelerate mistakes. When teams generate code faster, they may ship more changes, more often, and with less time for reviews or threat modeling. This can increase misconfigurations, insecure patterns, and hidden vulnerabilities that only show up later, when attackers exploit them. GenAI also creates new exposure routes when employees paste sensitive data into external tools, or when AI features are connected to business systems without strong access controls. Over time, these issues accumulate into “security debt” – a growing backlog of risk that becomes expensive to fix under pressure. The core problem isn’t that GenAI is “unsafe by nature”, but that organizations often adopt it faster than they build the controls needed to keep it safe. hat should business leaders measure to know whether AI is really working? Leaders should measure outcomes, not activity. Useful metrics depend on the use case, but typically include time-to-completion, error rate, cost per transaction, customer satisfaction, and cycle time from idea to delivery. For AI in software engineering, look at deployment frequency together with stability indicators like incident rate, rollback frequency, and time-to-repair, because speed without reliability is not success. For AI in customer operations, measure resolution rates, escalations to humans, compliance breaches, and rework. It’s also critical to measure adoption and trust: how often employees use the tool, how often they override it, and why. Finally, treat governance as measurable too: do you have audit trails, role-based access, documented model changes, and a clear owner accountable for outcomes? What does “AI governance” look like in practice for a global organization? AI governance is the set of rules, roles, and controls that make AI predictable, safe, and auditable. In practice, it starts with a clear inventory of where AI is used, what data it touches, and what decisions it influences. It includes policies for acceptable use, risk classification of AI systems, and defined approval steps for high-impact deployments. It also requires ongoing monitoring: quality checks, bias testing where relevant, security testing, and incident response plans when AI outputs cause harm. Governance is not a one-time document – it’s an operating model with accountability, documentation, and continuous improvement. For global firms, governance also means aligning practices across regions and functions while respecting local regulations and business realities, so that AI can scale without chaos.

ReadData security in a remote collaboration model – access and abuse risks

IT outsourcing to countries outside the European Union and the United States has long ceased to be something exotic. For many companies, it has become a normal element of day-to-day IT operations. Access to large teams of specialists, the ability to scale quickly, and lower costs have led to centers located in Asia, especially India, taking over a significant part of technology projects delivered for organizations in Europe and North America. Today, these are no longer only auxiliary tasks. Offshore teams are increasingly responsible for maintaining critical systems, working with sensitive data, and handling processes that have a direct impact on business continuity. The greater the scale of such cooperation, the greater the responsibility transferred to external teams. This, in turn, means growing exposure to information security risks. In practice, many of these threats are not visible at the stage of selecting a provider or negotiating the contract. They only become apparent during day-to-day operations, when formal arrangements start to function in the reality of remote work, staff turnover, and limited control over the environment in which data is processed. Importantly, modern security incidents in IT outsourcing are increasingly less often the result of obvious technological shortcomings. Much more frequently, their source lies in the way work is organized. Broadly granted permissions, simplified access procedures, and processes vulnerable to internal abuse create an environment in which legitimate access becomes the main risk vector. In such a model, the threat shifts from technology to people, operational decisions, and the way access to systems and data is managed. 1. Why the international IT service model changes the cyber risk profile India has for years been one of the main reference points for global IT outsourcing. This is primarily due to the scale of the available teams and the level of technical competence, which makes it possible to handle large volumes of similar tasks in an orderly and predictable way. For many international organizations, this means the ability to deliver projects quickly and provide stable operational support without having to expand their own structures. That same scale which provides efficiency also affects how work and access rights are organized. In distributed teams, permissions to systems are often granted broadly and for longer periods so as not to block continuity of operations. User roles are standardized, and access is granted to entire groups, which simplifies team management but at the same time limits precise control over who uses which resources and to what extent. The repeatability of processes additionally means that the way work is performed becomes easy to predict, and some decisions are made automatically. On top of this model comes pressure to meet performance indicators. Response time, number of handled tickets, or system availability become priorities, which in practice leads to simplifying procedures and bypassing part of the control mechanisms. From a security perspective, this means increased risk of access abuse and activities that go beyond real operational needs. Under such conditions, incidents rarely take the form of classic external attacks. Much more often, they are the result of errors, lack of ongoing oversight, or deliberate actions undertaken within permissions that were formally granted in line with procedure. 2. Cyber threats in a distributed model – a broader business context In recent years, digital fraud, phishing, and other forms of abuse have become a global problem affecting organizations regardless of industry or location. More and more often, they are not the result of breaking technical safeguards but of exploiting operational knowledge, established patterns of action, and access to systems. In this context, international IT outsourcing should be analyzed without oversimplification and without shifting responsibility onto specific markets. The example of India clearly shows that what really matters is not the physical location of teams, but the common denominator of operational models based on large scale, work according to predefined scripts, and broad access to data. These are the very elements that create an environment in which process repeatability and operational pressure can lead to lower vigilance and the automation of decisions, including those related to security. In such conditions, the line between a simple operational error and a full-scale security incident becomes very thin. A single event that would have limited impact in another context can quickly escalate into systemic consequences. From a business perspective, this means the need to look at cyber threats in IT outsourcing in a broader way than just through the lens of technology and location, and much more through the way work is organized and access to data is managed. 3. Data access as the main risk vector In IT outsourcing, the biggest security issues increasingly seldom begin in the code or the application architecture itself. In practice, the starting point is much more often access to data and systems. This is especially true for environments in which many teams work in parallel on the same resources and the scope of granted permissions is broad and difficult to control on an ongoing basis. Under such conditions, it is access management – rather than code quality – that most strongly determines the level of real risk. The most sensitive areas are those where access to production systems is part of everyday work. This applies to technical support teams, both first and second line, which have direct contact with user data and live environments. A similar situation occurs in quality assurance teams, where tests are often run on data that is very close to production data. Additionally, there are back-office processes related to customer service, covering financial systems, contact data, and identification information. In these areas, a single compromised access can have consequences far beyond the original scope of permissions. It can open the way for further privilege escalation, data copying, or leveraging existing trust to carry out effective social engineering attacks against end users. Importantly, such incidents rarely require advanced technical techniques. They often rely on legitimately granted permissions, insufficient monitoring, and trust mechanisms embedded in everyday operational processes. 3.1 Why technical support (L1/L2) is particularly sensitive First- and second-line support teams often have broad access to systems – not because it is strictly necessary to perform their tasks, but because granting permissions “in advance” is operationally easier than managing contextual access. In practice, this means that a helpdesk employee may be able to view customer data, reset administrator passwords, or access infrastructure management tools. Additionally, high turnover in such teams means that offboarding processes are often delayed or incomplete. As a result, a situation may arise in which a former employee still has an active account with permissions to production systems – even though their cooperation with the provider has formally ended. 3.2 QA teams and production data – an underestimated risk Quality assurance teams often work on copies of production data or on test environments that contain real customer data. Although formally these are “test data”, in practice they may include full sets of personal information, transaction data, or sensitive business data. The problem is that test environments are rarely subject to the same rigorous oversight as production systems. They often lack mechanisms such as encryption at rest, detailed access logging, or user activity monitoring. This makes data in QA environments an easier target than data in production systems – while incidents often remain invisible from the client’s perspective. 3.3 Back-office processes – operational knowledge as a weapon Employees handling back-office administrative processes have not only technical access but also operational knowledge: they know procedures, communication patterns, organizational structures, and how systems work. This makes them potentially effective participants in social engineering attacks – both as victims and, in extreme cases, as conscious or unconscious accomplices in abuse. Combined with KPI pressure, work according to rigid scripts, and limited awareness of the broader security context, these processes become vulnerable to manipulation, data exfiltration, and incidents based on trust and routine. 4. Security certificates vs. real data protection Outsourcing providers very often meet formal security requirements and hold the relevant certificates. The problem is that certification does not control how permissions are used in everyday work. In distributed work environments, persistent challenges include high employee turnover, delays in revoking permissions, remote work, and limited monitoring of user activity. As a result, a gap arises between declared compliance and the actual level of data protection. 5. When IT outsourcing increases exposure to cyber threats Cooperation with an external partner in an IT outsourcing model can increase exposure to cyber threats, but only when the way it is organized does not take real security conditions into account. This applies, among other things, to situations where access to systems is granted permanently and is not subject to regular review. Over time, permissions begin to function independently of the actual scope of duties and are treated as part of the fixed working environment rather than as a conscious operational decision. A significant problem is also limited visibility into how data and systems are used on the provider’s side. If user activity monitoring, log analysis, and ongoing operational control are outside the direct oversight of the contracting organization, the ability to detect irregularities early is significantly reduced. Additionally, responsibility for information security is often blurred between the client and the provider, which makes it harder to respond clearly in ambiguous or disputed situations. Under such conditions, even a single incident can quickly spread to a broader part of the organization. The access of one user or one technical account may be enough as a starting point for privilege escalation and abuses affecting many systems at once. What’s worse, such events are often detected late – only when real operational, financial, or reputational damage has already occurred and the room for maneuver on the organization’s side is already heavily constrained. 6. How companies reduce digital security risk in IT outsourcing More and more companies are concluding that information security in a remote model cannot be effectively protected solely with classic technical safeguards. Distributed teams, work across multiple time zones, and access to systems from different locations all mean that an approach based only on network perimeter protection is no longer sufficient. As a result, organizations are shifting their attention to where risk most often emerges, namely to the way access to data and systems is managed. In practice, this means more deliberate restriction of permissions and splitting access into smaller, precisely defined scopes. Users receive only the rights that are necessary to perform specific tasks, rather than full access derived from their role or job title. At the same time, the importance of activity monitoring is growing, including observing unusual behavior, repeated deviations from standard working patterns, and attempts to reach resources that are not related to current responsibilities. An increasingly common approach is also a model based on the absence of implicit trust, known as zero trust. It assumes that every access request should be verified regardless of where the user is located, what role they perform, and from where they work. This is complemented by separating sensitive processes across different teams and regions so that a single access point does not allow full control over the entire process or a complete set of data. Ultimately, however, what matters most is whether these assumptions actually work in everyday operations. If they remain only written in documents or declared at the policy level, they do not translate into real risk reduction. Only consistent enforcement of rules, regular access reviews, and genuine visibility into user actions make it possible to reduce an organization’s vulnerability to security incidents. 7. Conclusions IT outsourcing itself is not a threat to an organization’s security. This applies to cooperation with teams in India as well as in other regions of the world. The problem begins when the scale of operations grows faster than awareness of risks related to cybersecurity. In environments where many teams have broad access to data and the pace of work is driven by high operational pressure, even minor gaps in access management or oversight can lead to serious consequences. From the perspective of globally operating organizations, IT outsourcing should not be treated solely as a way to reduce costs or increase operational efficiency. It is increasingly becoming a component of a broader data security and digital risk management strategy. In practice, this means the need to consciously design cooperation models, clearly define responsibilities, and implement mechanisms that provide real control over access to systems and data, regardless of where and by whom they are processed. 8. Why it is worth working with TTMS in the area of IT outsourcing Secure IT outsourcing is not only a matter of technical competences. Equally important is the approach to risk management, access control, and shared responsibility on both sides of the cooperation. TTMS supports globally operating organizations in building outsourcing models that are scalable and efficient while at the same time providing real control over the security of data and systems. By working with TTMS, companies gain a partner that understands that digital security does not begin at the moment of incident response. It starts much earlier, at the stage of designing processes, roles, and scopes of responsibility. That is why in practice we place strong emphasis on precisely defining access rights, logically segmenting sensitive processes, and ensuring operational transparency that allows clients to continuously understand how their data and systems are being used. TTMS acts as a global partner that combines experience in building outsourcing teams with a practical approach to cybersecurity and regulatory compliance. Our goal is to create cooperation models that support business growth instead of generating hidden operational risks. If IT outsourcing is to be a stable foundation for growth, the key factor becomes choosing a partner for whom data security is an integral part of daily work, not an add-on to the service offering. Zapraszamy do kontaktu z TTMS, aby porozmawiać o modelu outsourcingu IT dopasowanym do rzeczywistych potrzeb biznesowych oraz wyzwań związanych z bezpieczeństwem cyfrowym. Does IT outsourcing to third countries increase the risk of data misuse? Outsourcing IT can increase the risk of data misuse if an organization loses real control over system access and how it is used. The location of the team, for example in India, is not the deciding factor in the level of risk. What matters most is how permissions are granted, user activity monitoring, and ongoing operational oversight. In practice, a well-designed collaboration model can be more secure than local teams operating without clear access control rules. Why social engineering threats are significant in IT outsourcing? Social engineering threats play a major role in IT outsourcing because many incidents are not based on technical vulnerabilities in systems. Far more often, they exploit legitimate access, knowledge of procedures, and the predictability of operational processes. Working according to repetitive patterns and high pressure for efficiency make employees susceptible to manipulation. Under such conditions, an attack does not need to look like a break-in to be effective. Which areas of IT outsourcing are most vulnerable to digital threats? The greatest risk concerns areas where access to systems and data is essential for daily work. These include technical support teams, particularly first and second line, which have contact with production systems and user data. Quality control teams working in test environments also show high vulnerability, where data very similar to production data is often used. Administrative processes related to customer service also remain a significant risk point. Are security certificates enough to protect data? Security certificates are an important element in building trust and confirming compliance with specific standards. However, they do not replace day-to-day operational practice. Real data security depends on how access is granted, how user activity is monitored, and whether the organization has ongoing visibility into what is happening in the systems. Without these elements, certificates remain a formal safeguard that does not always protect against real incidents. How to reduce digital security risk in outsourcing IT? Risk reduction begins with conscious management of access to data and systems. This includes both permission segmentation and regular reviews of who is using resources and to what extent. Continuous activity monitoring and clear assignment of responsibility between client and supplier are also crucial. Increasingly, organizations are also implementing an approach based on zero trust, which assumes verification of every access regardless of user location and role.

ReadBiggest 10 IT Problems in Business with Solutions for 2026