Microsoft’s In-House AI Move: MAI-1 and MAI-Voice-1 Signal a Shift from OpenAI

August 2025 – Microsoft has unveiled two internally developed AI models – MAI-1 (a new large language model) and MAI-Voice-1 (a speech generation model) – marking a strategic pivot toward technological independence from OpenAI. After years of leaning on OpenAI’s models (and investing around $13 billion in that partnership since 2019), Microsoft’s AI division is now striking out on its own with homegrown AI capabilities. This move signals that despite its deep ties to OpenAI, Microsoft is positioning itself to have more direct control over the AI technology powering its products – a development with big implications for the industry.

A Strategic Pivot Away from OpenAI

Microsoft’s announcement of MAI-1 and MAI-Voice-1 – made in late August 2025 – is widely seen as a bid for greater self-reliance in AI. Industry observers note that this “proprietary” turn represents a pivot away from dependence on OpenAI. For years, OpenAI’s GPT-series models (like GPT-4) have been the brains behind many Microsoft products (from Azure OpenAI services to GitHub Copilot and Bing’s chat). However, tensions have emerged in the collaboration. OpenAI has grown into a more independent (and highly valued) entity, and Microsoft reportedly “openly criticized” OpenAI’s GPT-4 as “too expensive and slow” for certain consumer needs. Microsoft even quietly began testing other AI models for its Copilot services, signaling concern about over-reliance on a single partner.

In early 2024, Microsoft hired Mustafa Suleyman (co-founder of DeepMind and former Inflection AI CEO) to lead a new internal AI team – a clear sign it intended to develop its own models. Suleyman has since emphasized “optionality” in Microsoft’s AI strategy: the company will use the best models available – whether from OpenAI, open-source, or its own lab – routing tasks to whichever model is most capable. The launch of MAI-1 and MAI-Voice-1 puts substance behind that strategy. It gives Microsoft a viable in-house alternative to OpenAI’s tech, even as the two remain partners. In fact, Microsoft’s AI leadership describes these models as augmenting (not immediately replacing) OpenAI’s – for now. But the long-term trajectory is evident: Microsoft is preparing for a post-OpenAI future in which it isn’t beholden to an external supplier for core AI innovations. As one Computerworld analysis put it, Microsoft didn’t hire a visionary AI team “simply to augment someone else’s product” – it’s laying groundwork to eventually have its own AI foundation.

Meet MAI-1 and MAI-Voice-1: Microsoft’s New AI Models

MAI-Voice-1 is Microsoft’s first high-performance speech generation model. The company says it can generate a full minute of natural-sounding audio in under one second on a single GPU, making it “one of the most efficient speech systems” available. In practical terms, MAI-Voice-1 gives Microsoft a fast, expressive text-to-speech engine under its own roof. It’s already powering user-facing features: for example, the new Copilot Daily service has an AI news host that reads top stories to users in a natural voice, and a Copilot Podcasts feature can create on-the-fly podcast dialogues from text prompts – both driven by MAI-Voice-1’s capabilities. Microsoft touts the model’s high fidelity and expressiveness across single- and multi-speaker scenarios. In an era where voice interfaces are rising, Microsoft clearly views this as strategic tech (the company even said “voice is the interface of the future” for AI companions). Notably, OpenAI’s own foray into audio has been Whisper, a model for speech-to-text transcription – but OpenAI hasn’t productized a comparable text-to-speech model. With MAI-Voice-1, Microsoft is filling that gap by offering AI that can speak to users with human-like intonation and speed, without relying on a third-party engine.

MAI-1 (Preview) is Microsoft’s new large language model (LLM) for text, and it represents the company’s first internally trained foundation model. Under the hood, MAI-1 uses a mixture-of-experts architecture and was trained (and post-trained) on roughly 15,000 NVIDIA H100 GPUs. (For context, that is a substantial computing effort, though still more modest than the 100,000+ GPU clusters reportedly used to train some rival frontier models.) The model is designed to excel at instruction-following and helpful responses to everyday queries – essentially, the kind of general-purpose assistant tasks that GPT-4 and similar models handle. Microsoft has begun publicly testing MAI-1 in the wild: it was released as MAI-1-preview on LMArena, a community benchmarking platform where AI models can be compared head-to-head by users. This allows Microsoft to transparently gauge MAI-1’s performance against other AI models (competitors and open models alike) and iterate quickly. According to Microsoft, MAI-1 is already showing “a glimpse of future offerings inside Copilot” – and the company is rolling it out selectively into Copilot (Microsoft’s AI assistant suite across Windows, Office, and more) for tasks like text generation. In coming weeks, certain Copilot features will start using MAI-1 for handling user queries, with Microsoft collecting feedback to improve the model. In short, MAI-1 is not yet replacing OpenAI’s GPT-4 within Microsoft’s products, but it’s on a path to eventually play a major role. It gives Microsoft the ability to tailor and optimize an LLM specifically for its ecosystem of “Copilot” assistants.

How do these models stack up against OpenAI’s? In terms of capabilities, OpenAI’s GPT-4 (and the newly released GPT-5) still set the bar in many domains, from advanced reasoning to code generation. Microsoft’s MAI-1 is a first-generation effort by comparison, and Microsoft itself acknowledges it is taking an “off-frontier” approach – aiming to be a close second rather than the absolute cutting edge. “It’s cheaper to give a specific answer once you’ve waited for the frontier to go first… that’s our strategy, to play a very tight second,” Suleyman said of Microsoft’s model efforts. The architecture choices also differ: OpenAI has not disclosed GPT-4’s architecture, but it is believed to be a giant transformer model utilizing massive compute resources. Microsoft’s MAI-1 explicitly uses a mixture-of-experts design, which can be more compute-efficient by activating different “experts” for different queries. This design, plus the somewhat smaller training footprint, suggests Microsoft may be aiming for a more efficient, cost-effective model – even if it’s not (yet) the absolute strongest model on the market. Indeed, one motivation for MAI-1 was likely cost/control: Microsoft found that using GPT-4 at scale was expensive and sometimes slow, impeding consumer-facing uses. By owning a model, Microsoft can optimize it for latency and cost on its own infrastructure.

On the voice side, OpenAI’s Whisper model handles speech recognition (transcribing audio to text), whereas Microsoft’s MAI-Voice-1 is all about speech generation (producing spoken audio from text). This means Microsoft now has an in-house solution for giving its AI a “voice” – an area where it previously relied on third-party text-to-speech services or less flexible solutions. MAI-Voice-1’s standout feature is its speed and efficiency (near real-time audio generation), which is crucial for interactive voice assistants or reading long content aloud. The quality is described as high fidelity and expressive, aiming to surpass the often monotone or robotic outputs of older-generation TTS systems. In essence, Microsoft is assembling its own full-stack AI toolkit: MAI-1 for text intelligence, and MAI-Voice-1 for spoken interaction. These will inevitably be compared to OpenAI’s GPT-4 (text) and the various voice AI offerings in the market – but Microsoft now has the advantage of deeply integrating these models into its products and tuning them as it sees fit.

Implications for Control, Data, and Compliance

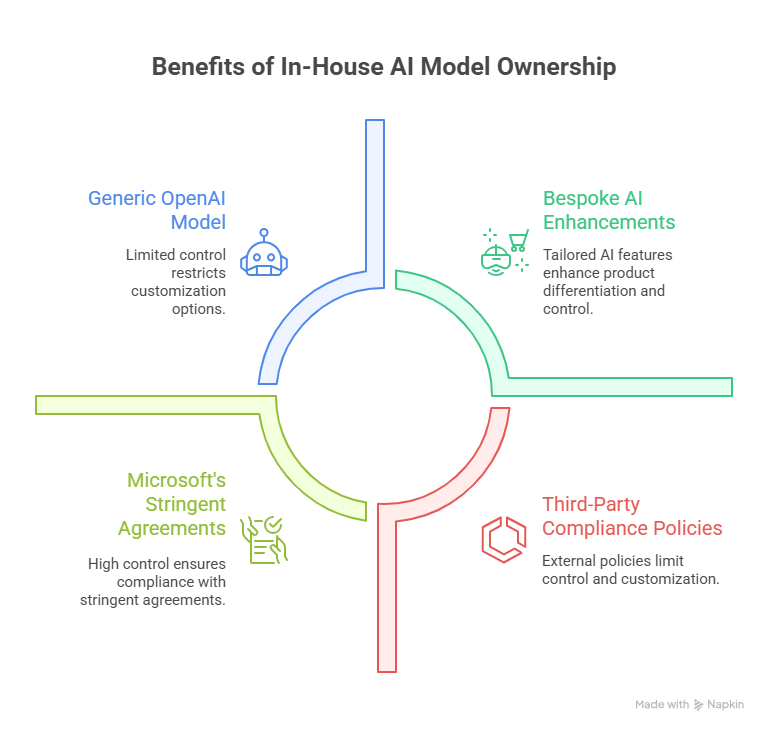

Beyond technical specs, Microsoft’s in-house AI push is about control – over the technology’s evolution, data, and alignment with company goals. By developing its own models, Microsoft gains a level of ownership that was impossible when it solely depended on OpenAI’s API. As one industry briefing noted, “Owning the model means owning the data pipeline, compliance approach, and product roadmap.” In other words, Microsoft can now decide how and where data flows in the AI system, set its own rules for governance and regulatory compliance, and evolve the AI functionality according to its own product timeline, not someone else’s. This has several tangible implications:

- Data governance and privacy: With an in-house model, sensitive user data can be processed within Microsoft’s own cloud boundaries, rather than being sent to an external provider. Enterprises using Microsoft’s AI services may take comfort that their data is handled under Microsoft’s stringent enterprise agreements, without third-party exposure. Microsoft can also more easily audit and document how data is used to train or prompt the model, aiding compliance with data protection regulations. This is especially relevant as new AI laws (like the EU’s AI Act) demand transparency and risk controls – having the AI “in-house” could simplify compliance reporting since Microsoft has end-to-end visibility into the model’s operation.

- Product customization and differentiation: Microsoft’s products can now get bespoke AI enhancements that a generic OpenAI model might not offer. Because Microsoft controls MAI-1’s training and tuning, it can infuse the model with proprietary knowledge (for example, training on Windows user support data to make a better helpdesk assistant) or optimize it for specific scenarios that matter to its customers. The Copilot suite can evolve with features that leverage unique model capabilities Microsoft builds (for instance, deeper integration with Microsoft 365 data or fine-tuned industry versions of the model for enterprise customers). This flexibility in shaping the roadmap is a competitive differentiator – Microsoft isn’t limited by OpenAI’s release schedule or feature set. As Launch Consulting emphasized to enterprise leaders, relying on off-the-shelf AI means your capabilities are roughly the same as your competitors’; owning the model opens the door to unique features and faster iterations.

- Compliance and risk management: By controlling the AI models, Microsoft can more directly enforce compliance with ethical AI guidelines and industry regulations. It can build in whatever content filters or guardrails it deems necessary (and adjust them promptly as laws change or issues arise), rather than being subject to a third party’s policies. For enterprises in regulated sectors (finance, healthcare, government), this control is vital – they need to ensure AI systems comply with sector-specific rules. Microsoft’s move could eventually allow it to offer versions of its AI that are certified for compliance, since it has full oversight. Moreover, any concerns about how AI decisions are made (transparency, bias mitigation, etc.) can be addressed by Microsoft’s own AI safety teams, potentially in a more customized way than OpenAI’s one-size-fits-all approach. In short, Microsoft owning the AI stack could translate to greater trust and reliability for enterprise customers who must answer to regulators and risk officers.

It’s worth noting that Microsoft is initially applying MAI-1 and MAI-Voice-1 in consumer-facing contexts (Windows, Office 365 Copilot for end-users) and not immediately replacing the AI inside enterprise products. Suleyman himself commented that the first goal was to make something that works extremely well for consumers – leveraging Microsoft’s rich consumer telemetry and data – essentially using the broad consumer usage to train and refine the models. However, the implications for enterprise clients are on the horizon. We can expect that as these models mature, Microsoft will integrate them into its Azure AI offerings and enterprise Copilot products, offering clients the option of Microsoft’s “first-party” models in addition to OpenAI’s. For enterprise decision-makers, Microsoft’s pivot sends a clear message: AI is becoming core intellectual property, and owning or selectively controlling that IP can confer advantages in data governance, customization, and compliance that might be hard to achieve with third-party AI alone.

Build Your Own or Buy? Lessons for Businesses

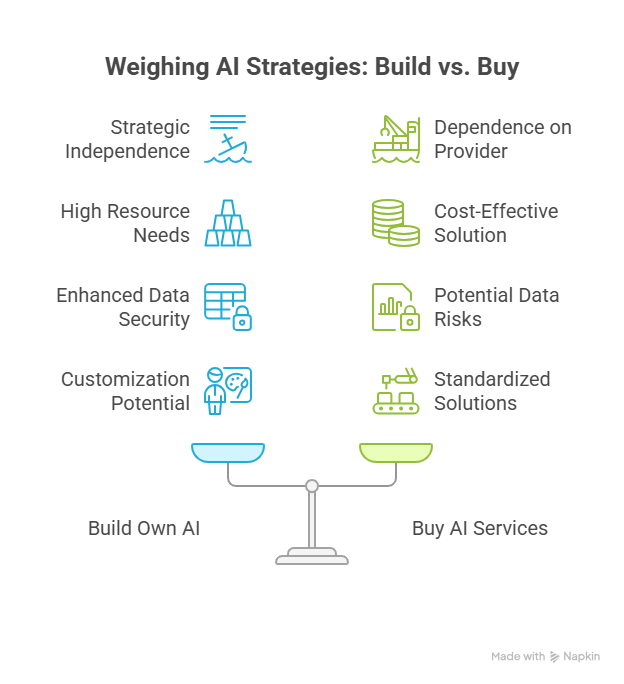

Microsoft’s bold move raises a key question for other companies: Should you develop your own AI models, or continue relying on foundation models from providers like OpenAI or Anthropic? The answer will differ for each organization, but Microsoft’s experience offers some valuable considerations for any business crafting its AI strategy:

- Strategic control vs. dependence: Microsoft’s case illustrates the risk of over-dependence on an external AI provider. Despite a close partnership, Microsoft and OpenAI had diverging interests (even reportedly clashing over what Microsoft gets out of its big investment). If an AI capability is mission-critical to your business or product, relying solely on an outside vendor means your fate is tied to their decisions, pricing, and roadmap changes. Building your own model (or acquiring the talent to) gives you strategic independence. You can prioritize the features and values important to you without negotiating with a third party. However, it also means shouldering all the responsibility for keeping that model state-of-the-art.

- Resources and expertise required: On the flip side, few companies have the deep pockets and AI research muscle that Microsoft does. Training cutting-edge models is extremely expensive – Microsoft’s MAI-1 used 15,000 high-end GPUs just for its preview model, and the leading frontier models use even larger compute budgets. Beyond hardware, you need scarce AI research talent and large-scale data to train a competitive model. For most enterprises, it’s simply not feasible to replicate what OpenAI, Google, or Microsoft are doing at the very high end. If you don’t have the scale to invest in tens of millions (or more likely, hundreds of millions) of dollars in AI R&D, leveraging a pre-built foundation model might yield a far better ROI. Essentially, build if AI is a core differentiator you can substantially improve – but buy if AI is a means to an end and others can provide it more cheaply.

- Privacy, security, and compliance needs: A major driver for some companies to consider “rolling their own” AI is data sensitivity and compliance. If you operate in a field with strict data governance (say, patient health data, or confidential financial info), sending data to a third-party AI API – even with promises of privacy – might be a non-starter. An in-house model that you can deploy in a secure environment (or at least a model from a vendor willing to isolate your data) could be worth the investment. Microsoft’s move shows an example of prioritizing data control: by handling AI internally, they keep the whole data pipeline under their policies. Other firms, too, may decide that owning the model (or using an open-source model locally) is the safer path for compliance. That said, many AI providers are addressing this by offering on-premises or dedicated instances – so explore those options as well.

- Need for customization and differentiation: If the available off-the-shelf AI models don’t meet your specific needs or if using the same model as everyone else diminishes your competitive edge, building your own can be attractive. Microsoft clearly wanted AI tuned for its Copilot use cases and product ecosystem – something it can do more freely with in-house models. Likewise, other companies might have domain-specific data or use cases (e.g. a legal AI assistant, or an industrial AI for engineering data) where a general model underperforms. In such cases, investing in a proprietary model or at least a fine-tuned version of an open-source model could yield superior results for your niche. We’ve seen examples like Bloomberg GPT – a financial domain LLM trained on finance data – which a company built to get better finance-specific performance than generic models. Those successes hint that if your data or use case is unique enough, a custom model can provide real differentiation.

- Hybrid approaches – combine the best of both: Importantly, choosing “build” versus “buy” isn’t all-or-nothing. Microsoft itself is not abandoning OpenAI entirely; the company says it will “continue to use the very best models from [its] team, [its] partners, and the latest innovations from the open-source community” to power different features. In practice, Microsoft is adopting a hybrid model – using its own AI where it adds value, but also orchestrating third-party models where they excel, thereby delivering the best outcomes across millions of interactions. Other enterprises can adopt a similar strategy. For example, you might use a general model like OpenAI’s for most tasks, but switch to a privately fine-tuned model when handling proprietary data or domain-specific queries. There are even emerging tools to help route requests to different models dynamically (the way Microsoft’s “orchestrator” does). This approach allows you to leverage the immense investment big AI providers have made, while still maintaining options to plug in your own specialty models for particular needs.

Bottom line: Microsoft’s foray into building MAI-1 and MAI-Voice-1 underscores that AI has become a strategic asset worth investing in – but it also demonstrates the importance of balancing innovation with practical business needs. Companies should re-evaluate their build-vs-buy AI strategy, especially if control, privacy, or differentiation are key drivers. Not every organization will choose to build a giant AI model from scratch (and most shouldn’t). Yet every organization should consider how dependent it wants to be on external AI providers and whether owning certain AI capabilities could unlock more value or mitigate risks. Microsoft’s example shows that with sufficient scale and strategic need, developing one’s own AI is not only possible but potentially transformative. For others, the lesson may be to negotiate harder on data and compliance terms with AI vendors, or to invest in smaller-scale bespoke models that complement the big players.

In the end, Microsoft’s announcement is a landmark in the AI landscape: a reminder that the AI ecosystem is evolving from a few foundation-model providers toward a more heterogeneous field. For business leaders, it’s a prompt to think of AI not just as a service you consume, but as a capability you cultivate. Whether that means training your own models, fine-tuning open-source ones, or smartly leveraging vendor models, the goal is the same – align your AI strategy with your business’s unique needs for agility, trust, and competitive advantage in the AI era.

Supporting Your AI Journey: Full-Spectrum AI Solutions from TTMS

As the AI ecosystem evolves, TTMS offers AI Solutions for Business – a comprehensive service line that guides organizations through every stage of their AI strategy, from deploying pre-built models to developing proprietary ones. Whether you’re integrating AI into existing workflows, automating document-heavy processes, or building large-scale language or voice models, TTMS has capabilities to support you. For law firms, our AI4Legal specialization helps automate repetitive tasks like contract drafting, court transcript analysis, and document summarizations—all while maintaining data security and compliance. For customer-facing and sales-driven sectors, our Salesforce AI Integration service embeds generative AI, predictive insights, and automation directly into your CRM, helping improve user experience, reduce manual workload, and maintain control over data. If Microsoft’s move to build its own models signals one thing, it’s this: the future belongs to organizations that can both buy and build intelligently – and TTMS is ready to partner with you on that path.

Why is Microsoft creating its own AI models when it already partners with OpenAI?

Microsoft values the access it has to OpenAI’s cutting-edge models, but building MAI-1 and MAI-Voice-1 internally gives it more control over costs, product integration, and regulatory compliance. By owning the technology, Microsoft can optimize for speed and efficiency, protect sensitive data within its own infrastructure, and develop features tailored specifically to its ecosystem. This reduces dependence on a single provider and strengthens Microsoft’s long-term strategic position.

How do Microsoft’s MAI-1 and MAI-Voice-1 compare with OpenAI’s models?

MAI-1 is a large language model designed to rival GPT-4 in text-based tasks, but Microsoft emphasizes efficiency and integration rather than pushing absolute frontier performance. MAI-Voice-1 focuses on ultra-fast, natural-sounding speech generation, which complements OpenAI’s Whisper (speech-to-text) rather than duplicating it. While OpenAI still leads in some benchmarks, Microsoft’s models give it flexibility to innovate and align development closely with its own products.

What are the risks for businesses in relying solely on third-party AI providers?

Total dependence on external AI vendors creates exposure to pricing changes, roadmap shifts, or availability issues outside a company’s control. It can also complicate compliance when sensitive data must flow through a third party’s systems. Businesses risk losing differentiation if they rely on the same model that competitors use. Microsoft’s decision highlights these risks and shows why strategic independence in AI can be valuable.

hat lessons can other enterprises take from Microsoft’s pivot?

Not every company can afford to train a model on thousands of GPUs, but the principle is scalable. Organizations should assess which AI capabilities are core to their competitive advantage and consider building or fine-tuning models in those areas. For most, a hybrid approach – combining foundation models from providers with domain-specific custom models – strikes the right balance between speed, cost, and control. Microsoft demonstrates that owning at least part of the AI stack can pay dividends in trust, compliance, and differentiation.

Will Microsoft continue to use OpenAI’s technology after launching its own models?

Yes. Microsoft has been clear that it will use the best model for the task, whether from OpenAI, the open-source community, or its internal MAI family. The launch of MAI-1 and MAI-Voice-1 doesn’t replace OpenAI overnight; it creates options. This “multi-model” strategy allows Microsoft to route workloads dynamically, ensuring it can balance performance, cost, and compliance. For business leaders, it’s a reminder that AI strategies don’t need to be all-or-nothing – flexibility is a strength.