Deepfake Detection Breakthrough: Universal Detector Achieves 98% Accuracy

Imagine waking up to a viral video of your company’s CEO making outrageous claims – except it never happened. This nightmare scenario is becoming all too real as deepfakes (AI-generated fake videos or audio) grow more convincing. In response, researchers have unveiled a new universal deepfake detector that can spot synthetic videos with an unprecedented 98% accuracy. The development couldn’t be more timely, as businesses seek ways to protect their brand reputation and trust in an era when seeing is no longer believing.

A powerful new AI tool can analyze videos and detect subtle signs of manipulation, helping companies distinguish real footage from deepfakes. The latest “universal” detector boasts cross-platform capabilities, flagging both fake videos and AI-generated audio with remarkable precision. It marks a significant advance in the fight against AI-driven disinformation.

What is the 98% Accurate Universal Deepfake Detector and How Does It Work?

The newly announced deepfake detector is an AI-driven system designed to identify fake video and audio content across virtually any platform. Developed by a team of researchers (notably at UC San Diego in August 2025), it represents a major leap forward in deepfake detection technology. Unlike earlier tools that were limited to specific deepfake formats, this “universal” detector works on both AI-generated speech and manipulated video footage. In other words, it can catch a lip-synced synthetic video of an executive and an impersonated voice recording with the same solution.

Under the hood, the detector uses advanced machine learning techniques to sniff out the subtle “fingerprints” that generative AI leaves on fake content. When an image or video is created by AI rather than a real camera, there are tiny irregularities at the pixel level and in motion patterns that human eyes can’t easily see. The detector’s neural network has been trained to recognize these anomalies at the sub-pixel scale. For example, real videos have natural color correlations and noise characteristics from camera sensors, whereas AI-generated frames might have telltale inconsistencies in texture or lighting. By focusing on these hidden markers, the system can discern AI fakery without relying on obvious errors.

Critically, this new detector doesn’t just focus on faces or one part of the frame – it scans the entire scene (backgrounds, movements, audio waveform, etc.) for anything that “doesn’t fit.” Earlier deepfake detectors often zeroed in on facial glitches (like unnatural eye blinking or odd skin textures) and could fail if no face was visible. In contrast, the universal model analyzes multiple regions per frame and across consecutive frames, catching subtle spatial and temporal inconsistencies that older methods missed. It’s a transformer-based AI model that essentially learns what real vs. fake looks like in a broad sense, instead of using one narrow trick. This breadth is what makes it universal – as one researcher put it, “It’s one model that handles all these scenarios… that’s what makes it universal”.

Training Data and Testing: Building a Better Fake-Spotter

Achieving 98% accuracy required feeding the detector a huge diet of both real and fake media. The researchers trained the system on an extensive range of AI-generated videos produced by different generator programs – from deepfake face-swaps to fully AI-created clips. For instance, they used samples from tools like Stable Diffusion’s video generator, Video-Crafter, and CogVideo to teach the AI what various fake “fingerprints” look like. By learning from many techniques, the model doesn’t get fooled by just one type of deepfake. Impressively, the team reported that the detector can even adapt to new deepfake methods after seeing only a few examples. This means if a brand-new AI video generator comes out next month, the detector could learn its telltale signs without needing a complete retraining.

The results of testing this system have been record-breaking. In evaluations, the detector correctly flagged AI-generated videos about 98.3% of the time. This is a significant jump in accuracy compared to prior detection tools, which often struggled to get above the low 90s. In fact, the researchers benchmarked their model against eight different existing deepfake detection systems, and the new model outperformed all of them (the others ranged around 93% accuracy or lower). Such a high true-positive rate is a major milestone in the arms race against deepfakes. It suggests the AI can spot almost all fake content thrown at it, across a wide variety of sources.

Of course, “98% accuracy” isn’t 100%, and that remaining 2% error rate does matter. With millions of videos uploaded online daily, even a small false-negative rate means some deepfakes will slip through, and a false-positive rate could flag some real videos incorrectly. Nonetheless, this detector’s performance is currently best-in-class. It gives organizations a fighting chance to catch malicious fakes that would have passed undetected just a year or two ago. As deepfake generation gets more advanced, detection had to step up – and this tool shows it’s possible to significantly close the gap.

How Is This Detector Different from Past Deepfake Detection Methods?

Previous deepfake detection methods were often specialized and easier to evade. One key difference is the new detector’s broad scope. Earlier detectors typically focused on specific artifacts – for example, one system might look for unnatural facial movements, while another analyzed lighting mismatches on a person’s face. These worked for certain deepfakes but failed for others. Many classic detectors also treated video simply as a series of individual images, trying to spot signs of Photoshop-style edits frame by frame. That approach falls apart when dealing with fully AI-generated video, which doesn’t have obvious cut-and-paste traces between frames. By contrast, the 98% accurate detector looks at the bigger picture (pun intended): it examines patterns over time and across the whole frame, not just isolated stills.

Another major advancement is the detector’s ability to handle various formats and even modalities. Past solutions usually targeted one type of media at a time – for instance, a tool might detect face-swap video deepfakes but do nothing about an AI-cloned voice in an audio clip. The new universal detector can tackle both video and audio in one system, which is a game-changer. So if a deepfake involves a fake voice over a real video, or vice versa, older detectors might miss it, whereas this one catches the deception in either stream.

Additionally, the architecture of this detector is more sophisticated. It employs a constrained neural network that homes in on anomalies in data distributions rather than searching for a predefined list of errors. Think of older methods like using a checklist (“Are the eyes blinking normally? Is the heartbeat visible on the neck?”) – effective until the deepfake creators fix those specific issues. The new method is more like an all-purpose lie detector for media; it learns the underlying differences between real and fake content, which are harder for forgers to eliminate. Also, unlike many legacy detectors that heavily relied on seeing a human face, this model doesn’t care if the content has people, objects, or scenery. For example, if someone fabricated a video of an empty office with fake background details, previous detectors might not notice anything since no face is present. The universal detector would still scrutinize the textures, shadows, and motion in the scene for unnatural signs. This makes it resilient against a broader array of deepfake styles.

In summary, what sets this new detector apart is its universality and robustness. It’s essentially a single system that covers many bases: face swaps, entirely synthetic videos, fake voices, and more. Earlier generations of detectors were more narrow – they solved part of the problem. This one combines lessons from all those earlier efforts into a comprehensive tool. That breadth is vital because deepfake threats are evolving too. By solving the cross-platform compatibility issues that plagued older systems, the detector can maintain high accuracy even as deepfake techniques diversify. It’s the difference between a patchwork of local smoke detectors and a building-wide fire alarm system.

Why This Matters for Brand Safety and Reputational Risk

For businesses, deepfakes aren’t just an IT problem – they’re a serious brand safety and reputation risk. We live in a time where a single doctored video can go viral and wreak havoc on a company’s credibility. Imagine a fake video showing your CEO making unethical remarks or a bogus announcement of a product recall; such a hoax could send stock prices tumbling and customers fleeing before the truth gets out. Unfortunately, these scenarios have moved from hypothetical to real. Corporate targets are already in the crosshairs of deepfake fraudsters. In 2019, for example, criminals used an AI voice clone to impersonate a CEO and convinced an employee to wire $243,000 to a fraudulent account. By 2024, a multinational firm in Hong Kong was duped by an even more elaborate deepfake – a video call with a fake “CEO” and colleagues – resulting in a $25 million loss. The number of deepfake attacks against companies has surged, with AI-generated voices and videos duping financial firms out of millions and putting corporate security teams on high alert.

Beyond direct financial theft, deepfakes pose a huge reputational threat. Brands spend years building trust, which a single viral deepfake can undermine in minutes. There have been cases of fake videos of political leaders and CEOs circulating online – even if debunked eventually, the damage in the interim can be significant. Consumers might question, “Was that real?” about any shocking video involving your brand. This uncertainty erodes the baseline of trust that businesses rely on. That’s why a detection tool with very high accuracy matters: it gives companies a fighting chance to identify and respond to fraudulent media quickly, before rumors and misinformation take on a life of their own.

From a brand safety perspective, having a nearly foolproof deepfake detector is like having an early-warning radar for your reputation. It can help verify the authenticity of any suspicious video or audio featuring your executives, products, or partners. For example, if a doctored video of your CEO appears on social media, the detector could flag it within moments, allowing your team to alert the platform and your audience that it’s fake. Consider how valuable that is – it could be the difference between a contained incident and a full-blown PR crisis. In industries like finance, news media, and consumer goods, where public confidence is paramount, such rapid detection is a lifeline. As one industry report noted, this kind of tool is a “lifeline for companies concerned about brand reputation, misinformation, and digital trust”. It’s becoming essential for any organization that could be a victim of synthetic content abuse.

Deepfakes have also introduced new vectors for fraud and misinformation that traditional security measures weren’t prepared for. Fake audio messages of a CEO asking an employee to transfer money, or a deepfake video of a company spokesperson giving false information about a merger, can bypass many people’s intuitions because we are wired to trust what we see and hear. Brand impersonation through deepfakes can mislead customers – for instance, a fake video “announcement” could trick people into a scam investment or phishing scheme using the company’s good name. The 98% accuracy detector, deployed properly, acts as a safeguard against these malicious uses. It won’t stop deepfakes from being made (just as security cameras don’t stop crimes by themselves), but it significantly boosts the chance of catching a fake in time to mitigate the harm.

Incorporating Deepfake Detection into Business AI and Cybersecurity Strategies

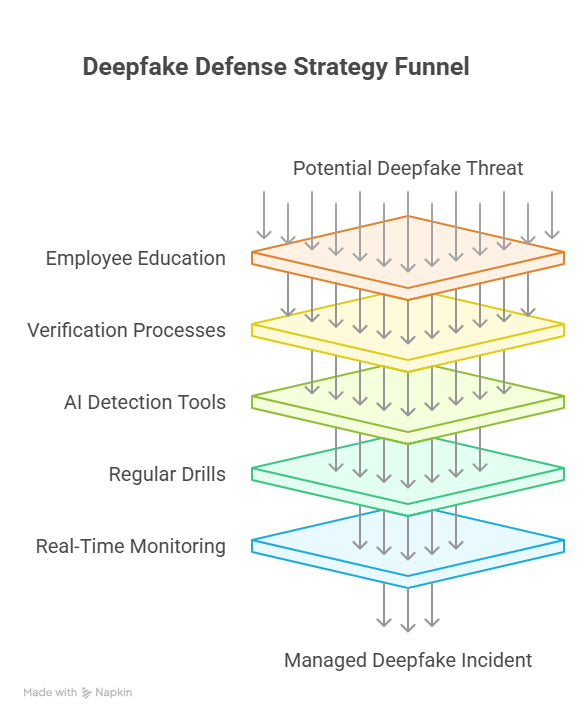

Given the stakes, businesses should proactively integrate deepfake detection tools into their overall security and risk management framework. A detector is not just a novelty for the IT department; it’s quickly becoming as vital as spam filters or antivirus software in the corporate world. Here are some strategic steps and considerations for companies looking to defend against deepfake threats:

- Employee Education and Policies: Train staff at all levels to be aware of deepfake scams and to verify sensitive communications. For example, employees should be skeptical of any urgent voice message or video that seems even slightly off. They must double-check unusual requests (especially involving money or confidential data) through secondary channels (like calling back a known number). Make it company policy that no major action is taken based on electronic communications alone without verification.

- Strengthen Verification Processes: Build robust verification protocols for financial transactions and executive communications. This might include multi-factor authentication for approvals, code words for confirming identity, or mandatory pause-and-verify steps for any request that seems odd. An incident in 2019 already highlighted that recognizing a voice is no longer enough to confirm someone’s identity – so treat video and audio with the same caution as you would a suspicious email.

- Deploy AI-Powered Detection Tools: Incorporate deepfake detection technology into your cybersecurity arsenal. Specialized software or services can analyze incoming content (emails with video attachments, voicemail recordings, social media videos about your brand) and flag possible fakes. Advanced AI detection systems can catch subtle inconsistencies in audio and video that humans would miss. Many tech and security firms are now offering detection as a service, and some social media platforms are building it into their moderation processes. Use these tools to automatically screen content – like an “anti-virus” for deepfakes – so you get alerts in real time.

- Regular Drills and Preparedness: Update your incident response plan to include deepfake scenarios. Conduct simulations (like a fake “CEO video” emergency drill) to test how your team would react. Just as companies run phishing simulations, run a deepfake drill to ensure your communications, PR, and security teams know the protocol if a fake video surfaces. This might involve quickly assembling a crisis team, notifying platform providers to take down the content, and issuing public statements. Practicing these steps can greatly reduce reaction time under real pressure.

- Monitor and Respond in Real Time: Assign personnel or use services to continuously monitor for mentions of your brand and key executives online. If a deepfake targeting your company does appear, swift action is crucial. The faster you identify it’s fake (with the help of detection AI) and respond publicly, the better you can contain false narratives. Have a clear response playbook: who assesses the content, who contacts legal and law enforcement if needed, and who communicates to the public. Being prepared can turn a potential nightmare into a managed incident.

Integrating these measures ensures that your deepfake defense is both technical and human. No single tool is a silver bullet – even a 98% accurate detector works best in concert with good practices. Companies that have embraced these strategies treat deepfake risk as a when-not-if issue. They are actively “baking deepfake detection into their security and compliance practices,” as analysts advise. By doing so, businesses not only protect themselves from fraud and reputational damage but also bolster stakeholder confidence. In a world where AI can imitate anyone, a robust verification and detection strategy becomes a cornerstone of digital trust.

Looking ahead, we can expect deepfake detectors to be increasingly common in enterprise security stacks. Just as spam filters and anti-malware became standard, content authentication and deepfake scanning will likely become routine. Forward-thinking companies are already exploring partnerships with AI firms to integrate detection APIs into their video conferencing and email systems. The investment in these tools is far cheaper than the cost of a major deepfake debacle. With threats evolving, businesses must stay one step ahead – and this 98% accuracy detector is a promising tool to help them do exactly that.

Protect Your Business with TTMS AI Solutions

At Transition Technologies MS (TTMS), we help organizations strengthen their defenses against digital threats by integrating cutting-edge AI tools into cybersecurity strategies. From advanced document analysis to knowledge management and e-learning systems, our AI-driven solutions are designed to ensure trust, compliance, and resilience in the digital age. Partner with TTMS to safeguard your brand reputation and prepare for the next generation of challenges in deepfake detection and beyond.

FAQ

How can you tell if a video is a deepfake without specialized tools?

Even without an AI detector, there are some red flags that a video might be a deepfake. Look closely at the person’s face and movements – often, early deepfakes had unnatural eye blinking or facial expressions that seem “off.” Check for inconsistencies in lighting and shadows; sometimes the subject’s face lighting won’t perfectly match the scene. Audio can be a giveaway too: mismatched lip-sync or robotic-sounding voices might indicate manipulation. Pause on individual frames if possible – distorted or blurry details around the edges of faces (especially between transitions) can signal something is amiss. While these clues can help, sophisticated deepfakes today are much harder to spot with the naked eye, which is why tools and detectors are increasingly important.

Are there laws or regulations addressing deepfakes that companies should know about?

Regulation of deepfakes is starting to catch up as the technology’s impact grows. Different jurisdictions have begun introducing laws to deter malicious use of deepfakes. For example, China implemented regulations requiring that AI-generated media (deepfakes) be clearly labeled, and it bans the creation of deepfakes that could mislead the public or harm someone’s reputation. In the European Union, the upcoming AI Act treats manipulative AI content as high-risk and will likely enforce transparency obligations – meaning companies may need to disclose AI-generated content and could face penalties for harmful deepfake misuse. In the United States, there isn’t a blanket federal deepfake law yet, but some states have acted: Virginia was one of the first, criminalizing certain deepfake pornography and impersonations, and California and Texas have laws against deepfakes in elections. Additionally, existing laws on fraud, defamation, and identity theft can apply to deepfake scenarios (for instance, using a deepfake to commit fraud is still fraud). For businesses, this regulatory landscape means two things: you should refrain from unethical uses of deepfakes in your operations and marketing (to avoid legal trouble and backlash), and you should stay informed about emerging laws that protect victims of deepfakes – such laws might aid your company if you ever need to take legal action against parties making malicious fakes. It’s wise to consult legal experts on how deepfake-related regulations in your region could affect your compliance and response strategies.

Can deepfake creators still fool a 98% accurate detector?

It’s difficult but not impossible. A 98% accurate detector is extremely good, but determined adversaries are always looking for ways to evade detection. Researchers have shown that by adding specially crafted “noise” or artifacts (called adversarial examples) into a deepfake, they can sometimes trick detection models. It’s an AI cat-and-mouse game: as detectors improve, deepfake techniques adjust to become more sneaky. That said, fooling a top-tier detector requires a lot of expertise and effort – the average deepfake circulating online right now is unlikely to be that expertly concealed. The new universal detector raises the bar significantly, meaning most fakes out there will be caught. But we can expect deepfake creators to try developing countermeasures, so ongoing research and updated models will be needed. In short, 98% accurate doesn’t mean invincible, but it makes successful deepfake attacks much rarer.

What should a company do if a deepfake of its CEO or brand goes public?

Facing a deepfake attack on your company requires swift and careful action. First, internally verify the content – use detection tools (like the 98% accuracy detector) to confirm it’s fake, and gather any evidence of how it was created if possible. Activate your crisis response team immediately; this typically involves corporate communications, IT security, legal counsel, and executive leadership. Contact the platform where the video or audio is circulating and report it as fraudulent content – many social networks and websites have policies against deepfakes, especially those causing harm, and will remove them when alerted. Simultaneously, prepare a public statement or press release for your stakeholders. Be transparent and assertive: inform everyone that the video/audio is a fake and that malicious actors are attempting to mislead the public. If the deepfake could have legal ramifications (for example, stock manipulation or defamation), involve law enforcement or regulators as needed. Going forward, conduct a post-incident analysis to improve your response plan. By reacting quickly and communicating clearly, a company can often turn the tide and prevent lasting damage from a deepfake incident.

Are deepfake detection tools available for businesses to use?

Yes – while some cutting-edge detectors are still in the research phase, there are already tools on the market that businesses can leverage. A number of cybersecurity companies and AI startups offer deepfake detection services (often integrated into broader threat intelligence platforms). For instance, some provide APIs or software that can scan videos and audio for signs of manipulation. Big tech firms are also investing in this area; platforms like Facebook and YouTube have developed internal deepfake detection to police their content, and Microsoft released a deepfake detection tool (Video Authenticator) a few years ago. Moreover, open-source projects and academic labs have published deepfake detection models that savvy companies can experiment with. The new 98% accuracy “universal” detector itself may become commercially or publicly available after further development – if so, it could be deployed by businesses much like antivirus software. It’s worth noting that effective use of these tools also requires human oversight. Businesses should assign trained staff or partner with vendors to implement the detectors correctly and interpret the alerts. In summary, while no off-the-shelf solution is perfect, a variety of deepfake detection options do exist and are maturing rapidly.