Companies around the world are increasingly focusing on protecting their data – and it’s easy to see why. The number of cyberattacks is growing year by year, and their scale and technological sophistication mean that even well-secured organizations can become potential targets. Phishing, ransomware, and so-called zero-day exploits that take advantage of unknown system vulnerabilities have become part of everyday reality. In the era of digital transformation, remote work, and widespread use of cloud computing, every new access point increases the risk of a data breach.

In the context of Data Privacy In AI-Powered e-learning, security takes on a particularly critical role. Educational platforms process personal data, test results, and often training materials that hold significant value for a company. Any breach of confidentiality can lead to serious financial and reputational consequences. An additional challenge comes from regulations such as GDPR, which require organizations to maintain full transparency and respond immediately in the event of an incident. In this dynamic environment, it’s not just about technology – it’s about trust, the very foundation of effective and secure AI and data security e-learning.

1. Why security in AI4E-learning matters so much

Artificial intelligence in corporate learning has sparked strong emotions from the very beginning – it fascinates with its possibilities but also raises questions and concerns.

Modern AI-based solutions can create a complete e-learning course in just a few minutes. They address the growing needs of companies that must quickly train employees and adapt their competencies to new roles. Such applications are becoming a natural choice for large organizations – not only because they significantly reduce costs and shorten the time required to prepare training materials, but also due to their scalability (the ability to easily create multilingual versions) and flexibility (instant content updates).

It’s no surprise that AI and data privacy e-learning has become a key topic for companies worldwide. However, a crucial question arises: are the data entered into AI systems truly secure? Are the files and information sent to such applications possibly being used to train large language models (LLMs)? This is precisely where the issue of AI and cyber security e-learning takes center stage – it plays a key role in ensuring privacy protection and maintaining user trust.

In this article, we’ll take a closer look at a concrete example – AI4E-learning, TTMS’s proprietary solution. Based on this platform, we’ll explain what happens to files after they are uploaded to the application and how we ensure data security in e-learning with AI and the confidentiality of all entrusted information.

2. How AI4E-learning protects user data and training materials

What kind of training can AI4E-learning create? Practically any kind. The tool proves especially effective for courses covering changing procedures, certifications, occupational health and safety (OHS), technical documentation, or software onboarding for employees.

These areas were often overlooked by organizations in the past – mainly due to the high cost of traditional e-learning. With every new certification or procedural update, companies had to assemble quality and compliance teams, involve subject-matter experts, and collaborate with external providers to create training. Now, the entire process can be significantly simplified – even an assistant can create a course by implementing materials provided by experts.

AI4E-learning supports all popular file formats – from text documents and Excel spreadsheets to videos and audio files (mp3). This means that existing training assets, such as webinar recordings or filmed classroom sessions, can be easily transformed into modern, interactive e-learning courses that continue to support employee skill development.

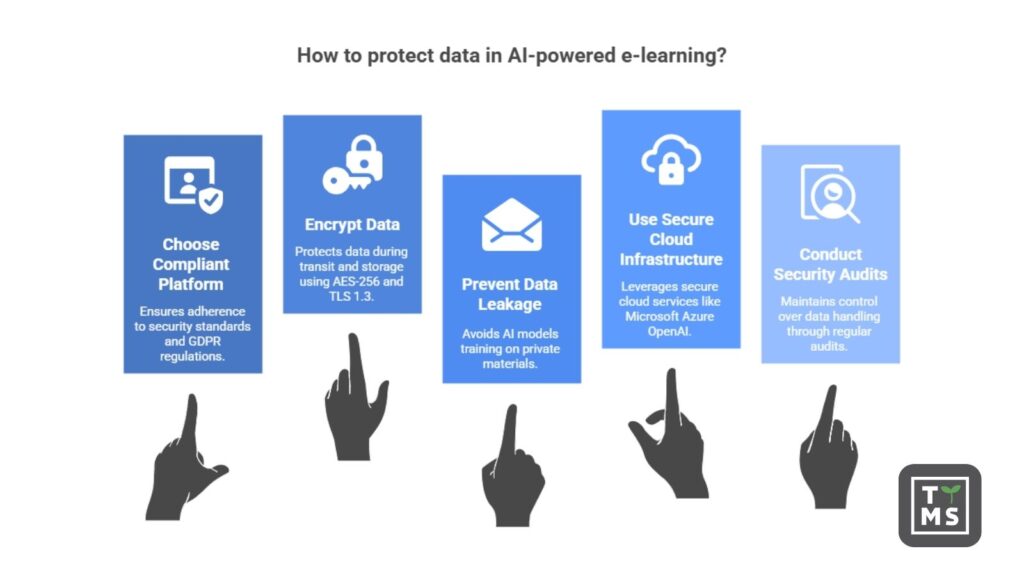

From the standpoint of AI and data security e-learning, information security is the foundation of the entire solution – from the moment a file is uploaded to the final publication of the course.

At the technological level, the platform applies advanced security practices that ensure both data integrity and confidentiality. All files are encrypted at rest (on servers) and in transit (during transfer), following AES-256 and TLS 1.3 standards. This means that even in the case of unauthorized access, the data remains useless to third parties.

In addition, the AI models used within the system are protected against data leakage – they do not learn from private user materials. When needed, they rely on synthetic or limited data, minimizing the risk of uncontrolled information flow.

Cloud data security is a crucial component of modern AI and cyber security e-learning solutions. AI4E-learning is supported by the Azure OpenAI infrastructure operating within the Microsoft 365 environment, ensuring compliance with top corporate security standards. Most importantly, training data is never used to train public AI models – it remains fully owned by the company.

This allows training departments and instructors to maintain complete control over the process – from scenario creation and approval to final publication.

AI4E-learning is also scalable and flexible, designed to meet the needs of growing organizations. It can rapidly transform large collections of source materials into ready-to-use courses, regardless of the number of participants or topics. The system supports multilingual content, enabling fast translation and adaptation for different markets. Thanks to SCORM compliance, courses can be easily integrated into any LMS – from small businesses to large international enterprises.

Through this approach, AI4E-learning combines technological innovation with complete data oversight and security, making it a trusted platform even for the most demanding industries.

3. Security standards and GDPR compliance

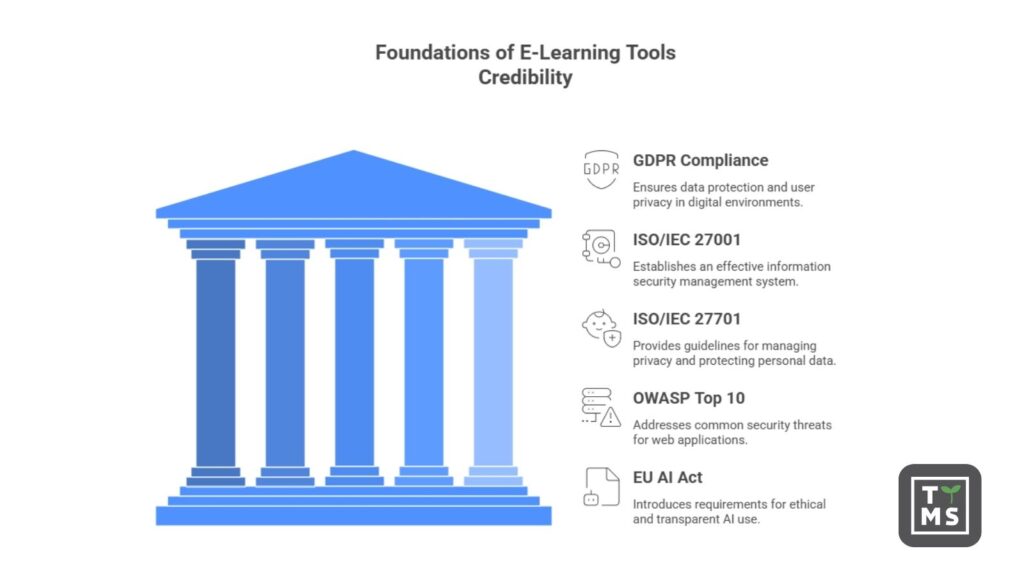

Every AI-powered e-learning application should be designed and maintained in compliance with the security standards applicable in the countries where it operates. This is not only a matter of legal compliance but, above all, of trust – users and institutions must be confident that their data and training materials are processed securely, transparently, and under full control.

Therefore, it is crucial for software providers to confirm that their solutions comply with international and local data security standards. Among the most important regulations and norms forming the foundation of credibility for AI and data security e-learning platforms are:

- GDPR (General Data Protection Regulation) – Data protection in line with GDPR is the cornerstone of privacy in the digital environment.

- ISO/IEC 27001 – The international standard for information security management.

- ISO/IEC 27701 – An extension of ISO/IEC 27001 focused on privacy protection.

- ISO/IEC 42001 — Global Standard for Artificial Intelligence Management Systems (AIMS), ensuring responsible development, delivery, and use of AI technologies.

- OWASP Top 10 – A globally recognized list of the most common security threats for web applications, key to AI and cyber security e-learning.

It’s also worth mentioning the new EU AI Act, which introduces requirements for algorithmic transparency, auditability, and ethical data use in machine learning processes. In the context of Data Privacy In AI-Powered e-learning, this means ensuring that AI systems operate effectively, responsibly, and ethically.

4. What this means for companies implementing AI4E-learning

Data protection in AI and data privacy e-learning is no longer just a regulatory requirement – it has become a strategic pillar of trust between companies, their clients, partners, and course participants. In a B2B environment, where information often relates to operational processes, employee competencies, or contractor data, even a single breach can have serious reputational and financial consequences.

That’s why organizations adopting solutions like AI4E-learning increasingly look beyond platform functionality – they prioritize transparency and compliance with international security standards such as ISO/IEC 27001, ISO/IEC 27701 and ISO/IEC 42001.

Providers who can demonstrate adherence to these standards gain a clear competitive edge, proving that they understand the importance of data security in e-learning with AI and can ensure data protection at every stage of the learning process.

In practice, companies choosing AI4E-learning are investing not only in advanced technology but also in peace of mind and credibility – both for their employees and their clients. AI and data security have become central elements of digital transformation, directly shaping organizational reputation and stability.

5. Why partner with TTMS to implement AI‑powered e‑learning solutions

AI‑driven e‑learning rollouts require a partner that combines technological maturity with a rigorous approach to security and compliance. For years, TTMS has delivered end‑to‑end corporate learning projects—from needs analysis and instructional design, through AI‑assisted content automation, to LMS integrations and post‑launch support. This means we take responsibility for the entire lifecycle of your learning solutions: strategy, production, technology, and security.

Our experience is reinforced by auditable security and privacy management standards. We hold the following certifications:

- ISO/IEC 27001 – systematic information security management,

- ISO/IEC 27701 – privacy information management (PIMS) extension,

- ISO/IEC 42001 – global standard for AI Management Systems (AIMS),

- ISO 9001 – quality management system,

- ISO/IEC 20000 – IT service management system,

- ISO 14001 – environmental management system,

- MSWiA License (Poland) – work standards for software development projects for police and military.

By partnering with TTMS, you gain:

- secure, regulation‑compliant AI‑powered e‑learning implementations based on proven standards,

- speed and scalability in content production (multilingual delivery, “on‑demand” updates),

- an architecture resilient to data leakage (encryption, no training of models on client data, access controls),

- integrations with your ecosystem (SCORM, LMS, M365/Azure),

- measurable outcomes and dedicated support for HR, L&D, and Compliance teams.

Ready to accelerate your learning transformation with AI—securely and at scale? Get in touch to see how we can help: TTMS e‑learning.

Who is responsible for data security in AI-powered e-learning?

The responsibility for data security in e-learning with AI lies with both the technology provider and the organization using the platform. The provider must ensure compliance with international standards such as ISO/IEC 27001, 27001 and 42001, while the company manages user access and permissions. Shared responsibility builds a strong foundation of trust.

How can data be protected when using AI-powered e-learning?

Protection begins with platforms that meet AI and data security e-learning standards, including AES-256 encryption and GDPR compliance. Ensuring that models do not learn from user data eliminates risks related to privacy breaches.

Is using artificial intelligence in e-learning safe for data?

Yes – as long as the platform follows the right AI and cyber security e-learning principles. In corporate-grade solutions like AI4E-learning, data remains encrypted, isolated, and never used to train public models.

Can data sent to an AI system be used to train models?

No. In secure corporate environments, like those of AI and data privacy e-learning, user data stays within a closed infrastructure, ensuring full control and transparency.

Does implementing AI-based e-learning require additional security procedures?

Yes. Companies should update their internal rules to reflect Data Privacy In AI-Powered e-learning requirements, defining verification, access control, and incident response processes.