Introduction: An astonishing number of employees are pasting company secrets into public AI tools – one 2025 report found 77% of workers have shared sensitive data via ChatGPT or similar AI. Generative AI has rapidly become the No. 1 channel for corporate data leaks, putting CIOs and CISOs on high alert. Yet the allure of GPT’s productivity and insights is undeniable. For large enterprises, the question is no longer “Should we use AI?” but “How can we use GPT on our own terms, without risking our data?”

The answer emerging in boardrooms is to build a private GPT layer – essentially, your company’s own ChatGPT-style AI, run within your security perimeter. This approach lets you harness cutting-edge GPT models as a powerful reasoning engine, while keeping proprietary information safely under your control. In this article, we’ll explore how big companies can stand up a private GPT-powered AI assistant, covering the architecture (GPT APIs, vector databases, access controls, encryption), best practices to keep it accurate (and non-hallucinatory), realistic cost estimates from ~$50K to millions, and the strategic benefits of owning your AI brain. Let’s dive in.

1. Why Enterprises Are Embracing Private GPT Layers

Public AI services like ChatGPT, Google Bard, or Claude showed what’s possible with generative AI – but they raise red flags for enterprise use. Data privacy, compliance, and control are the chief concerns. Executives worry about where their data is going and whether it might leak or be used to train someone else’s model. In fact, regulators have started clamping down (the EU’s AI Act, GDPR, etc.), even temporarily restricting tools like ChatGPT over privacy issues. Security incidents have proven these fears valid: employees inadvertently creating “shadow AI” risks by pasting confidential info into chatbots, and prompt injection attacks or data breaches exposing chat logs. Moreover, relying on a third-party AI API means unpredictable changes or downtime – not acceptable for mission-critical systems.

All these factors are fueling a shift. 2026 is shaping up to be the year of “Private AI” – enterprises deploying AI stacks inside their own environment, tuned to their data and governed by their rules. In a private GPT setup, the models are fully controlled by the company, data stays in a trusted environment, and usage is governed by internal policy. Essentially, AI stops being a public utility and becomes part of your core infrastructure. The payoff? Companies get the productivity and intelligence boost of GPT, without compromising on security or compliance. It’s the best of both worlds: AI innovation and enterprise-grade oversight.

2. Private GPT Layer Architecture: Key Components and Security

Standing up a private GPT-powered assistant requires integrating several components. At a high level, you’ll be combining a large language model’s intelligence with your enterprise data and wrapping it in strict security. Here’s an overview of the architecture and its key pieces:

- GPT Model (Reasoning Engine via API or On-Prem): At the core is the large language model itself – for example, GPT-4/5 accessed through an API (OpenAI, Azure OpenAI, etc.) or a self-hosted LLM like LLaMA on your own servers. This is the brain that can understand queries and generate answers. Many enterprises start by calling a vendor’s GPT API for convenience, then may graduate to hosting fine-tuned models internally for more control. Either way, the GPT model provides the natural language reasoning and generative capability.

- Vector Database (Enterprise Knowledge Base): A private GPT is only as helpful as the knowledge you give it. Instead of trying to stuff your entire company wiki into the model’s prompt, you use a vector database (like Pinecone, Chroma, Weaviate, etc.) to store embeddings of your internal documents. Think of this as the AI’s “long-term memory.” When a user asks something, the system converts the query into a vector and finds semantically relevant documents from this database. Those facts are then fed into GPT to ground its response. This Retrieval-Augmented Generation (RAG) approach means GPT can draw on your proprietary knowledge base in real time, rather than just its training data. (For example, you might embed PDFs, SharePoint files, knowledge base articles, etc. so that GPT can pull in the latest policy or report when answering a question.)

- Orchestration Layer (Query Processing & Tools): To make the magic happen, you’ll need some middleware (often a custom application or use of frameworks like LangChain). This layer handles the workflow: accepting user queries, performing the vector search, constructing the prompt with retrieved data (“context”), calling the GPT model API, and formatting the answer. It can also include tool integrations or function calling – for instance, GPT might decide to call a calculator or database lookup function mid-conversation. The orchestration logic ensures the GPT model gets the right context and that the user gets a useful, formatted answer (with source citations, for example).

- Access Control & Authorization: Unlike public ChatGPT, a private GPT must respect internal permissions. Strong access control mechanisms are built in so users only retrieve data they’re allowed to see. This can be done by tagging vectors with permissions and filtering results based on the query initiator’s role/credentials. Advanced setups use context-based access control (CBAC), which dynamically decides if a piece of content should be served to a user based on factors like role, content sensitivity, and even anomaly detection (e.g. blocking a finance employee’s query if it tries to pull HR data). In short, the system enforces your existing data security policies – the AI only answers with data that user is cleared to access.

- Encryption & Data Security: All data flowing through the private GPT layer should be encrypted at rest and in transit. This means encrypting the vector database contents, any cached conversation logs, etc., preferably with keys that your company controls (e.g. using a cloud Key Vault or on-prem HSM). If using cloud services, enterprise plans often allow bringing your own encryption keys for data stores. This way, even if an attacker or cloud insider accessed the raw database, the contents are gibberish without your key. Additionally, communication between components (the app, vector DB, GPT API) is done over secure channels (HTTPS/TLS), and sensitive fields can be masked or hashed. Some organizations even encrypt the embeddings in the vector store to prevent reverse-engineering the original text. In practice, encryption at rest + in transit, with strict key management, provides a strong defense such that even a breach won’t easily expose plaintext data.

- Secure Deployment (VPC or On-Prem Environment): Equally important is where all these components run. Best practice is to deploy the entire AI stack in a contained, private network – for example, within a Virtual Private Cloud (VPC) on AWS/Azure/GCP, or on-premises data center – with no public internet access to the core components. This network isolation ensures that your vector DB, application server, and even the GPT model endpoint (if using a cloud API) are not reachable from the open internet. Access is only via your internal apps/VPN. Even if an API key leaked, an attacker couldn’t use it unless they’re on your network. This closed architecture greatly reduces the attack surface.

2.1 GPT as the Brain, Data as the Memory

In this architecture, GPT serves as the reasoning layer, and your enterprise data repository serves as the memory layer. The model provides the “brainpower” – understanding user inputs and generating fluent answers – while the vector database supplies the factual knowledge it needs to draw upon. GPT itself isn’t omniscient about your proprietary data (you wouldn’t want all that baked irretrievably into the model); instead, it retrieves facts as needed. For example, GPT might know how to formulate a step-by-step explanation, but when asked “What is our warranty policy for product X?”, it will pull the exact policy text from the vector store and incorporate that into its answer. This division of labor lets the AI give accurate, up-to-date, and context-specific responses. It’s very much like a human: GPT is the articulate expert problem-solver, and your databases and documents are the reference library it uses to ensure answers are grounded in truth.

3. Keeping the AI Up-to-Date and Minimizing “Hallucinations”

One major advantage of a private GPT layer is that you can keep its knowledge current without constantly retraining the underlying model. In a RAG (retrieval-augmented) design, the model’s memory is essentially your vector database. Updating the AI’s knowledge is as simple as updating your data source: when new or changed information comes in (a new policy, a fresh batch of reports, updated procedures), you feed it into the pipeline (chunk and embed the text, add to the vector DB). The next user query will then find this new content. There’s no need to fine-tune the base GPT on every data update – you’re injecting up-to-date context at query time, which is far more agile. Good practice is to set up an automated ingestion process or schedule (e.g. re-index the latest documents nightly or whenever changes are published) to keep the vector store fresh. This ensures the AI isn’t giving answers based on last quarter’s data when this quarter’s data is available.

Even with current data, GPT models can sometimes hallucinate – that is, confidently generate an answer that sounds plausible but is false or not grounded in the provided context. Minimizing these hallucinations is critical in enterprise settings. Here are some best practices to ensure your private GPT stays accurate and on-track:

- Ground the Model in Context: Always provide relevant context from your knowledge base for the model to use, and instruct it to stick to that information. By prefacing the prompt with, “Use the information below to answer and don’t add anything else,” the AI is less likely to go off-script. If the user query can’t be answered with known data, the system can respond with a fallback (e.g. “I’m sorry, I don’t have that information.”) rather than guessing. The more your answers are based on real internal documents, the less room for the model’s imagination to introduce errors.

- Regularly Curate and Validate Data: Ensure the content in your vector database is accurate and authoritative. Archive or tag outdated documents so they aren’t used. It’s also worth reviewing what sources the AI is drawing from – for important topics, have subject matter experts vet the reference materials that feed the AI. Essentially, garbage in, garbage out: if the knowledge base is clean and correct, the AI’s outputs will be too.

- Tune Prompt and Parameters: You can reduce creative “flights of fancy” by configuring the model’s generation settings. For instance, using a lower temperature (a parameter that controls randomness) will make GPT’s output more deterministic and fact-focused. Prompt engineering helps as well – e.g., instruct the AI to include source citations for every fact (which forces it to stick to the provided sources), or to explicitly say when it’s unsure. A well-crafted system prompt and consistent style guidelines will guide the model to behave reliably.

- Hallucination Monitoring and Human Oversight: In high-stakes use cases, implement a review process. You might build automatic checks for certain red-flag answers (to catch obvious errors or policy violations) and route those to a human reviewer before they reach the end-user. Also consider a feedback loop: if users spot an incorrect answer, there should be a mechanism to correct it (update the data source or adjust the AI’s instructions). Many enterprises set up automated checks and human-in-the-loop review for critical outputs, with clear policies on when the AI should abstain or escalate to a person. Tracking the AI’s performance over time – measuring accuracy, looking at cases of mistakes – will let you continuously harden the system against hallucinations.

In practice, companies find that an internal GPT agent, when constrained to talk only about what it knows (your data), is far less prone to making things up. And if it does err, you have full visibility into how and why, which helps in refining the system. Over time, your private GPT becomes smarter and more trusted, because you’re continuously feeding it validated information and catching any stray hallucinations before they cause harm.

4. What Does It Cost to Build a Private GPT Layer?

When proposing a private GPT initiative, one of the first questions leadership will ask is: What’s this going to cost? The answer can vary widely based on scale and choices, but we can outline some realistic ranges. Broadly, a small-scale deployment might cost on the order of $50,000 per year, whereas a large enterprise-grade deployment can run in the millions of dollars annually. Let’s break that down.

For a pilot or small departmental project, costs are relatively modest. You might integrate a GPT-4 API with a few hundred documents and a handful of users. In this scenario, the expenses come from API usage fees (OpenAI charges per 1,000 tokens, which might be a few hundred dollars to a couple thousand per month for light usage), plus the development of the integration and any cloud services (vector DB, application hosting). Initial setup and integration could be done with a small team in weeks – think in the tens of thousands for labor. In fact, one small business implementation reported an initial integration cost around $50,000, with ongoing operational costs of ~$2,000/month. That puts the first-year cost in the ballpark of $70–80K, which is feasible for many mid-sized companies to experiment with private GPT.

Now, for a full-scale enterprise rollout, the costs scale up significantly. You’re now supporting possibly thousands of users and queries, strict uptime requirements, advanced security, and continuous improvements. A recent industry analysis found that CIOs often underestimate AI project costs by up to 10×, and that the real 3-year total cost of ownership for enterprise-grade GPT deployments ranges from $1 million up to $5 million. That averages out to perhaps $300K–$1.5M per year for a large deployment. Why so high? Because transforming a raw GPT API into a robust enterprise service has many hidden cost factors beyond just model fees:

- Development & Integration: Building the custom application layers, doing security reviews, connecting to your data sources, and UI/UX work. This includes things like authentication, user interface (chat front-end or integrations into existing tools), and any custom training. Estimates for a full production build can range from a few $100K in development costs upward depending on complexity.

- Infrastructure & Cloud Services: Running a private GPT layer means you’ll likely incur cloud infrastructure costs for hosting the vector database, databases for logs/metadata, perhaps GPU servers if you host the model or use a dedicated instance, and networking. Additionally, premium API plans or higher-rate limits may be needed as usage grows. Don’t forget storage and backup costs for all those embeddings and chat history. These can amount to tens of thousands per month for a large org.

- Ongoing Operations & Support: Just like any critical application, there are recurring costs for maintaining and improving the system. This includes monitoring tools, debugging and optimizing prompts, updating the knowledge base, handling model upgrades, and user support/training. Many organizations also budget for compliance and security assessments continuously. A rule of thumb is annual maintenance might be 15–20% of the initial build cost. On top of that, training programs for employees, or change management to drive AI adoption, can incur costs as well.

In concrete terms, a large enterprise (think a global bank or Fortune 500 company) deploying a private GPT across the organization could easily spend $1M+ in the first year, and similar or more in subsequent years factoring in cloud usage growth and dedicated support. A mid-sized enterprise might spend a few hundred thousand per year for a more limited rollout. The range is wide, but the key is that it’s not just the $0.02 per API call – it’s the surrounding ecosystem that costs money: software development, data engineering, security hardening, compliance, and scaling infrastructure.

The good news is that these costs are coming down over time with new tools and platforms. Cloud providers are launching managed services (e.g. Azure’s OpenAI with enterprise security, AWS Bedrock, etc.) that handle some heavy lifting. There are also out-of-the-box solutions and startups focusing on “ChatGPT for your data” that can jump-start development. These can reduce time-to-value, though you’ll still pay in subscriptions or service fees. Realistically, an enterprise should plan for at least a mid six-figure annual budget for a serious private GPT deployment, with the understanding that a top-tier, global deployment might run into the low millions. It’s an investment – but as we discuss next, one that can yield significant strategic returns if done right.

5. Benefits and Strategic Value of a Private GPT Layer

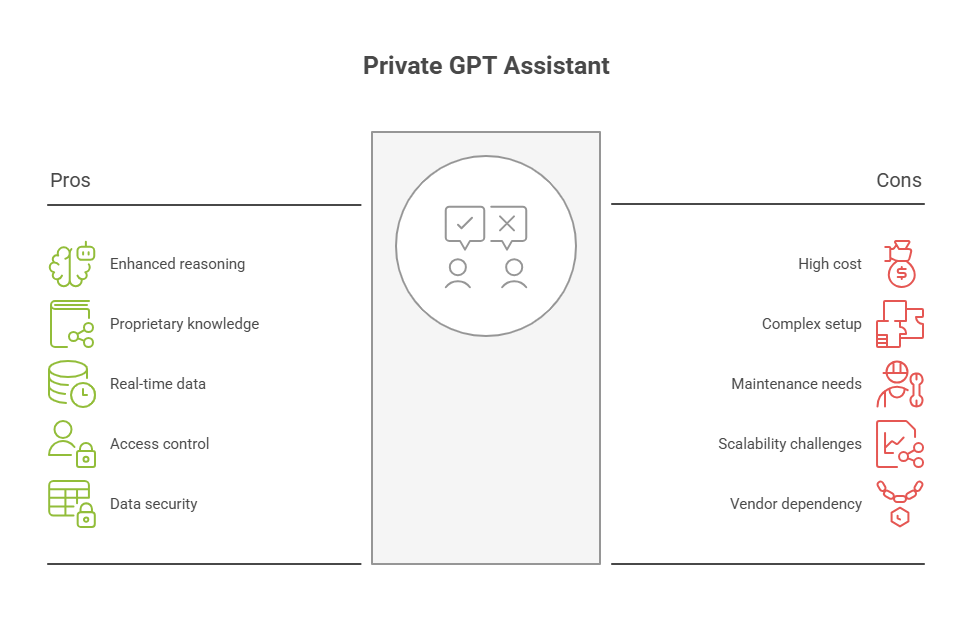

Why go through all this effort and expense to build your own AI layer? Simply put, a private GPT offers a strategic trifecta for large organizations: security, knowledge leverage, and control. Here are some of the major benefits and value drivers:

- Complete Data Privacy & Compliance: Your GPT operates behind your firewall, using your encrypted databases – so sensitive data never leaves your control. This dramatically lowers the risk of leaks and makes it much easier to comply with regulations (GDPR, HIPAA, financial data laws, etc.), since you aren’t sending customer data to an external service. You can prove to auditors that all AI data stays in-house, with full logging and oversight. This benefit alone is the reason many firms (especially in finance, healthcare, government) choose a private AI route. As one industry expert noted about customer interactions, you get the AI’s speed and scale “while keeping full ownership and control of customer data.”

- Leverage of Proprietary Knowledge: A public GPT like ChatGPT has general knowledge up to a point in time, but it doesn’t know your company’s unique data – your product specs, internal process docs, client reports, etc. By building a private layer, you unlock the value of that treasure trove of information. Employees can get instant answers from your documents, clients can interact with an AI that knows your latest offerings, and decisions can be made with insights drawn from internal data that competitors’ AI can’t access. In essence, you’re turning your siloed corporate knowledge base into an interactive, intelligent assistant available 24/7. This can shorten research cycles, improve customer service (with faster, context-rich responses), and generally make your organization’s collective knowledge far more accessible and actionable.

- Customization and Tailored Intelligence: With a private AI, you can customize the model’s behavior and training to your domain and brand. You might fine-tune the base model on your industry jargon or special tasks, or simply enforce a style guide and specific answer formats through prompting. The AI can be aligned to your company’s voice, whether that’s a formal tone or a fun one, and it can handle domain-specific questions that a generic model might fumble. This tailored intelligence means better relevance and usefulness of responses. For example, a bank’s private GPT can deeply understand banking terminology and regulations, or a tech company’s AI can provide code examples using its internal APIs. Such fine-tuning and context leads to a solution that feels like it truly “gets” your business.

- Reliability, Control and Integration: Running your own GPT layer gives you far more control over performance and integration. You’re not subject to the whims of a third-party API that might change or rate-limit you unexpectedly. You can set your own SLA (service levels) and scale the infrastructure as needed. If the model needs an update or improvement, you decide when and how to deploy it (after proper testing). Moreover, a private GPT can be deeply integrated into your systems – it can perform actions (with proper safeguards) like retrieving data from your CRM, generating reports, or triggering workflows. Because you govern it, you can connect it to internal tools that a public chatbot could never access. This tight integration can streamline operations (imagine an AI assistant that not only answers a policy question but also pulls up the relevant record from your database). In short, you gain a dependable AI “colleague” that you can continuously improve, monitor, and trust, much like any other critical internal application.

- Strategic Differentiator: In the bigger picture, having a robust private AI capability can be a competitive advantage. It enables new use cases – from hyper-personalized customer service to intelligent automation of routine tasks – that set your company apart. And you achieve this without sacrificing confidentiality. Companies that figure out how to deploy AI widely and safely will outpace those that are still hesitating due to security worries. There’s also a talent angle: employees, especially younger ones, expect modern AI tools at work. Providing a private GPT assistant boosts productivity and can improve employee satisfaction by eliminating tedious search and analysis work. It signals that your organization is forward-thinking but also responsible about technology. All of these benefits ultimately drive business value: faster decision cycles, better customer experiences, lower operational costs, and a stronger positioning in the market.

In summary, building your own private GPT layer is an investment in innovation with guardrails. It allows your enterprise to tap into the incredible power of GPT-style AI – boosting efficiency, unlocking knowledge, delighting users – while keeping the keys firmly in your own hands. In a world where data is everything, a private GPT ensures your crown jewels (your data and insights) stay protected even as you put them to work in new ways. Companies that successfully implement this will have an AI infrastructure that is safe, scalable, and tailored to their needs, giving them a distinct edge in the AI-powered economy.

Ready to Build Your Private GPT Solution?

If you’re exploring how to implement a secure, scalable AI assistant tailored to your enterprise needs, see how TTMS can help. Our experts design and deploy private GPT layers that combine innovation with full data control.

FAQ

How is a private GPT layer different from using ChatGPT directly?

Using ChatGPT (the public service) means sending your queries and data to an external, third-party system that you don’t control. A private GPT layer, by contrast, is an AI chatbot or assistant that your company hosts or manages. The key differences are data control and customization. With ChatGPT, any information you input leaves your secured environment; with a private GPT, the data stays within your company’s servers or cloud instance, often encrypted and access-controlled. Additionally, a private GPT layer is connected to your internal data – it can look up answers from your proprietary documents and systems – whereas public ChatGPT only knows what it was trained on (general internet text up to a certain date) and anything the user explicitly provides in the prompt. Private GPTs can also be tweaked in behavior (tone, compliance with company policy, etc.) in ways that a public, one-size-fits-all service cannot. In short: ChatGPT is like a powerful but generic off-the-shelf AI, while a private GPT layer is your organization’s own AI assistant, trained and governed to work with your data under your rules.

Do we need to train our own model to build a private GPT layer?

Not necessarily. In many cases you don’t have to train a brand new language model from scratch. Most enterprise implementations use a pre-existing foundation model (like GPT-4 or an open-source LLM) and access it via an API or by hosting a copy, without changing the core model weights. You can achieve a lot by using retrieval (feeding the model your data as context) rather than training. That said, there are scenarios where you might fine-tune a model on your company’s data for improved performance. Fine-tuning means taking a base model and training it further on domain-specific examples (e.g., Q&A pairs from your industry). It can make the model more accurate on specialized tasks, but it requires expertise, and careful handling to avoid overfitting or exposing sensitive info from training data. Many companies start without any custom model training – they use the base GPT model and focus on prompt engineering and retrieval augmentation. Over time, if you find the model consistently struggling with certain proprietary jargon or tasks, you could pursue fine-tuning or choose a model that better fits your needs. In summary: training your own model is optional – it’s a possible enhancement, not a prerequisite for a private GPT layer.

What data can we use in a private GPT layer’s knowledge base?

You can use a wide range of internal data – essentially any text-based information that you want the AI to be able to reference. Common sources include company manuals, policy documents, wikis, knowledge bases, SharePoint sites, PDFs, Word documents, transcripts of meetings or support calls, software documentation, spreadsheets (which can be converted to text or Q&A format), and even database records converted into readable text. The process typically involves ingesting these documents into a vector database: splitting text into chunks, generating embeddings for each chunk, and storing them. There’s flexibility in format – unstructured text works (the AI can handle natural language), and you can also include metadata (like tags for document type, creation date, sensitivity level, etc.). It’s wise to focus on high-quality, relevant data: the AI will only be as helpful as the information it has. So you might start with your top 1,000 Q&A pairs or your product documentation, rather than every single email ever written. Sensitive data can be included since this is a private system, but you should still enforce access controls (so, for example, HR documents only surface for HR staff queries). In short, any information that is in text form and that your employees or clients might ask about is a candidate for the knowledge base. Just ensure you have the rights and governance to use that data (e.g., don’t inadvertently feed in personal data without proper safeguards if regulations apply).

How do we ensure our private GPT layer doesn’t leak sensitive information?

Preventing leaks is a top priority in design. First, because the system is private, it’s not training on your data and then sharing those weights publicly – so one company’s info won’t suddenly pop out in another’s AI responses (a risk you might worry about with public models). Within your organization, you ensure safety by implementing several layers of control. Access control is vital: the AI only retrieves and shows information that the requesting user is allowed to see. So if a regular employee asks something that involves executive-only data, the system should say it cannot find an answer, rather than exposing it. This is done via permissions on the vector database entries and context-based access checks. Next, monitoring and logging: every query and response can be logged (and even audited) so that you have a trail of who asked what and what was provided. This helps in spotting any unusual activity or potential data misuse. Another aspect is prompt design – you can instruct the model, via its system prompt, not to reveal certain categories of data (like personal identifiers, or to redact certain fields). And as mentioned earlier, encryption is used so that if someone somehow gains access to the stored data or the conversation logs, they can’t read it in plain form. Some organizations also employ data loss prevention (DLP) tools in tandem, which watch for things like a user trying to paste out large chunks of sensitive output. Finally, keeping the model up-to-date with content reductions (so it doesn’t hallucinate and accidentally fabricate something that looks real) plays a role in not inadvertently “leaking” falsified info. When all these measures are in place – encryption, strict access rights, careful prompt constraints, and oversight – a private GPT layer can be locked down such that it behaves like a well-trained, discreet employee, only sharing information appropriately and securely.

Can smaller companies also build a private GPT layer, or is it only for large enterprises?

While our discussion has focused on big enterprises, smaller organizations can absolutely build a private GPT solution, just often on a more limited scale. The concept is scalable – you could even set up a mini private GPT on a single server for a small business. In fact, there are open-source projects (like PrivateGPT and others) that allow you to run a GPT-powered Q&A on your own data locally, without any external API. These can be very cost-effective – essentially the cost of a decent computer and some developer time. Small and mid-sized companies often use cloud services like Azure OpenAI or AWS with a vector database service, which let you stand up a private, secure GPT setup relatively quickly and pay-as-you-go. The difference is usually in volume and complexity: a small company might spend $10k–$50k getting a basic private assistant running for a few use cases, whereas a large enterprise will invest much more for broader integration. One consideration is expertise – large companies have teams to manage this, but a small company might not have in-house AI engineers. That’s where third-party solutions or consultants can help package a private GPT layer for you. Also, if a company is very small or doesn’t have extremely sensitive data, they might opt for a middle ground like ChatGPT Enterprise (the managed service OpenAI offers), which promises data privacy and is easier to use (but not self-hosted). In summary, it’s not only for the Fortune 500. Smaller firms can do it too – the barriers to entry are coming down – but they should start with a pilot, weigh the costs/benefits, and perhaps leverage managed solutions to keep things simpler. As they grow, they can expand the private GPT’s capabilities over time.