EU AI Act Latest Developments: Code of Practice, Enforcement, Timeline & Industry Reactions

The European Union’s Artificial Intelligence Act (EU AI Act) is entering a critical new phase of implementation in 2025. As a follow-up to our February 2025 introduction to this landmark regulation, this article examines the latest developments shaping its rollout. We cover the newly finalized Code of Practice for general-purpose AI (GPAI), the enforcement powers of the European AI Office, a timeline of implementation from August 2025 through 2027, early reactions from AI industry leaders like xAI, Meta, and Google, and strategic guidance to help business leaders ensure compliance and protect their reputations.

General-Purpose AI Code of Practice: A Voluntary Compliance Framework

One of the most significant recent milestones is the release of the General-Purpose AI (GPAI) Code of Practice – a comprehensive set of voluntary guidelines intended to help AI providers meet the EU AI Act’s requirements for foundation models. Published on July 10, 2025, the Code was developed by independent experts through a multi-stakeholder process and endorsed by the European Commission’s new AI Office. It serves as a non-binding framework covering three key areas: transparency, copyright compliance, and safety and security in advanced AI models. In practice, this means GPAI providers (think developers of large language models, generative AI systems, etc.) are given concrete measures and documentation templates to ensure they disclose necessary information, respect intellectual property laws, and mitigate any systemic risks from their most powerful models.

Although adhering to the Code is optional, it offers a crucial benefit: a “presumption of conformity” with the AI Act. In other words, companies that sign on to the Code are deemed to comply with the law’s GPAI obligations, enjoying greater legal certainty and a lighter administrative burden in audits and assessments. This carrot-and-stick approach strongly incentivizes major AI providers to participate. Indeed, within weeks of the Code’s publication, dozens of tech firms – including Amazon, Google, Microsoft, OpenAI, Anthropic and others – had voluntarily signed on as early signatories, signalling their intent to follow these best practices. The Code’s endorsement by the European Commission and the EU’s AI Board (a body of member state regulators) in August 2025 further cemented its status as an authoritative compliance tool. Providers that choose not to adhere to the Code will face stricter scrutiny: they must independently prove to regulators how their alternative measures fulfill each requirement of the AI Act.

The European AI Office: Central Enforcer and AI Oversight Hub

To oversee and enforce the EU AI Act, the European Commission established a dedicated regulator known as the European AI Office in early 2024. Housed within the Commission’s DG CONNECT, this office serves as the EU-wide center of AI expertise and enforcement coordination. Its primary role is to monitor, supervise, and ensure compliance with the AI Act’s rules – especially for general-purpose AI models – across all 27 Member States. The AI Office has been empowered with significant enforcement tools: it can conduct evaluations of AI models, demand technical documentation and information from AI providers, require corrective measures for non-compliance, and even recommend sanctions or fines in serious cases. Importantly, the AI Office is responsible for drawing up and updating codes of practice (like the GPAI Code) under Article 56 of the Act, and it acts as the Secretariat for the new European AI Board, which coordinates national regulators.

In practical terms, the European AI Office will work hand-in-hand with Member States’ authorities to achieve consistent enforcement. For example, if a general-purpose AI model is suspected of non-compliance or poses unforeseen systemic risks, the AI Office can launch an investigation in collaboration with national market surveillance agencies. It will help organize joint investigations across borders when the same AI system is deployed in multiple countries, ensuring that issues like biased algorithms or unsafe AI deployments are addressed uniformly. By facilitating information-sharing and guiding national regulators (similar to how the European Data Protection Board works under GDPR), the AI Office aims to prevent regulatory fragmentation. As a central hub, it also represents the EU in international AI governance discussions and oversees innovation-friendly measures like AI sandboxes (controlled environments for testing AI) and SME support programs. For business leaders, this means there is now a one-stop European authority focusing on AI compliance – companies can expect the AI Office to issue guidance, handle certain approvals or registrations, and lead major enforcement actions for AI systems that transcend individual countries’ jurisdictions.

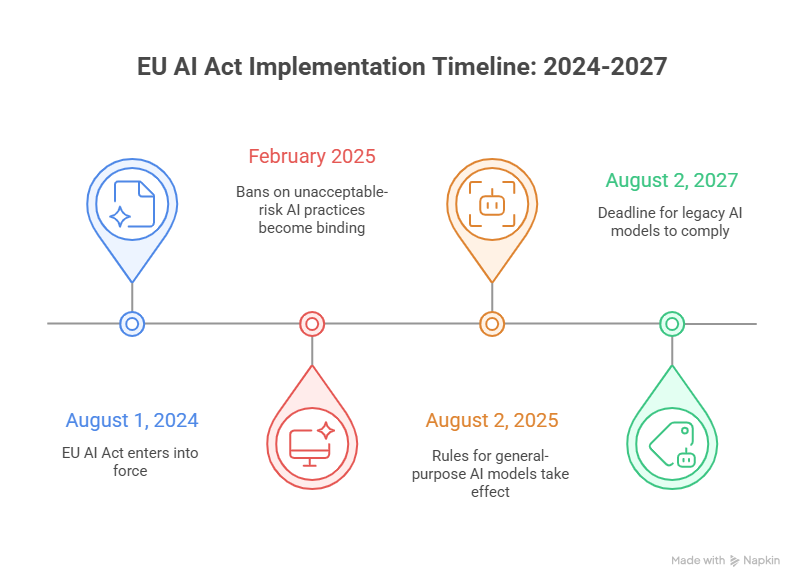

Timeline for AI Act Implementation: August 2025 to 2027

The EU AI Act is being rolled out in phases, with key obligations kicking in between 2025 and 2027. The regulation formally entered into force on August 1, 2024, but its provisions were not all active immediately. Instead, a staggered timeline gives organizations time to adapt. The first milestone came just six months in: by February 2025, the Act’s bans on certain “unacceptable-risk” AI practices (e.g. social scoring, exploitative manipulation of vulnerable groups, and real-time remote biometric identification in public for law enforcement) became legally binding. Any AI system falling under these prohibited categories must have been ceased or removed from the EU market by that date, marking an early test of compliance.

Next, on August 2, 2025, the rules for general-purpose AI models take effect. From this date forward, any new foundation model or large-scale AI system (meeting the GPAI definition) introduced to the EU market is required to comply with the AI Act’s transparency, safety, and copyright measures. This includes providing detailed technical documentation to regulators and users, disclosing the data used for training (at least in summary form), and implementing risk mitigation for advanced models. Notably, there is an important grace period for existing AI models that were already on the market before August 2025: those providers have until August 2, 2027 to bring legacy models and their documentation into full compliance. This two-year transitional window acknowledges that updating already-deployed AI systems (and retrofitting documentation or risk controls) takes time. During this period, voluntary tools like the GPAI Code of Practice serve as an interim compliance bridge, helping companies align with requirements before formal standards are finalized around 2027.

The AI Act’s remaining obligations phase in by 2026-2027. By August 2026 (two years post-entry into force), the majority of provisions become fully applicable, including requirements for high-risk AI systems in areas like healthcare, finance, employment, and critical infrastructure. These high-risk systems – which must undergo conformity assessments, logging, human oversight, and more – have a slightly longer lead time, with their compliance deadline at the three-year mark (around late 2027) according to the legislation. In effect, the period from mid-2025 through 2027 is when companies will feel the AI Act’s bite: first in the generative and general-purpose AI domain, and subsequently across regulated industry-specific AI applications. Businesses should mark August 2025 and August 2026 on their calendars for incremental responsibilities, with August 2027 as the horizon by which all AI systems in scope need to meet the new EU standards. Regulators have also indicated that formal “harmonized standards” for AI (technical standards developed via European standards organizations) are expected by 2027 to further streamline compliance.

Industry Reactions: What xAI, Google, and Meta Reveal

How have AI companies responded so far to this evolving regulatory landscape? Early signals from industry leaders provide a telling snapshot of both support and concern. On one hand, many big players have publicly embraced the EU’s approach. For example, Google affirmed it would sign the new Code of Practice, and Microsoft’s President Brad Smith indicated Microsoft was likely to do the same. Numerous AI developers see value in the coherence and stability that the AI Act promises – by harmonizing rules across Europe, it can reduce legal uncertainty and potentially raise user trust in AI products. This supportive camp is evidenced by the long list of initial Code of Practice signatories, which includes not just enterprise tech giants but also a range of startups and research-focused firms from Europe and abroad.

On the other hand, some prominent companies have voiced reservations or chosen a more cautious engagement. Notably, Elon Musk’s AI venture xAI made headlines in July 2025 by agreeing to sign only the “Safety and Security” chapter of the GPAI Code – and pointedly not the transparency or copyright sections. In a public statement, xAI said that while it “supports AI safety” and will adhere to the safety chapter, it finds the Act’s other parts “profoundly detrimental to innovation” and believes the copyright rules represent an overreach. This partial compliance stance suggests a concern that overly strict transparency or data disclosure mandates could expose proprietary information or stifle competitive advantage. Likewise, Meta (Facebook’s parent company) took a more oppositional stance: Meta declined to sign the Code of Practice at all, arguing that the voluntary Code introduces “legal uncertainties for model developers” and imposes measures that go “far beyond the scope of the AI Act”. In other words, Meta felt the Code’s commitments might be too onerous or premature, given that they extend into areas not explicitly dictated by the law itself (Meta has been particularly vocal about issues like open-source model obligations and copyright filters, which the company sees as problematic).

These divergent reactions reveal an industry both cognizant of AI’s societal risks and wary of regulatory constraints. Companies like Google and OpenAI, by quickly endorsing the Code of Practice, signal that they are willing to meet higher transparency and safety bars – possibly to pre-empt stricter enforcement and to position themselves as responsible leaders. In contrast, pushback from players like Meta and the nuanced participation of xAI highlight a fear that EU rules might undercut competitiveness or force unwanted disclosures of AI training data and methods. It’s also telling that some governments and experts share these concerns; for instance, during the Code’s approval, one EU member state (Belgium) reportedly raised objections about gaps in the copyright chapter, reflecting ongoing debates about how best to balance innovation with regulation. As the AI Act moves from paper to practice, expect continued dialogue between regulators and industry. The European Commission has indicated it will update the Code of Practice as technology evolves, and companies – even skeptics – will likely engage in that process to make their voices heard.

Strategic Guidance for Business Leaders

With the EU AI Act’s requirements steadily coming into force, business leaders should take proactive steps now to ensure compliance and manage both legal and reputational risks. Here are key strategic considerations for organizations deploying or developing AI:

- Audit Your AI Portfolio and Risk-Classify Systems: Begin by mapping out all AI systems, tools, or models your company uses or provides. Determine which ones might fall under the AI Act’s definitions of high-risk AI systems (e.g. AI in regulated fields like health, finance, HR, etc.) or general-purpose AI models (broad AI models that could be adapted to many tasks). This risk classification is essential – high-risk systems will need to meet stricter requirements (e.g. conformity assessments, documentation, human oversight), while GPAI providers have specific transparency and safety obligations. By understanding where each AI system stands, you can prioritize compliance efforts on the most critical areas.

- Establish AI Governance and Compliance Processes: Treat AI compliance as a cross-functional responsibility involving your legal, IT, data science, and risk management teams. Develop internal guidelines or an AI governance framework aligned with the AI Act. For high-risk AI applications, this means creating processes for thorough risk assessments, data quality checks, record-keeping, and human-in-the-loop oversight before deployment. For general-purpose AI development, implement procedures to document training data sources, methodologies to mitigate biases or errors, and security testing for model outputs. Many companies are appointing “AI compliance leads” or committees to oversee these tasks and to stay updated on regulatory guidance.

- Leverage the GPAI Code of Practice and Standards: If your organization develops large AI models or foundation models, consider signing onto the EU’s GPAI Code of Practice or at least using it as a blueprint. Adhering to this voluntary Code can serve as evidence of good-faith compliance efforts and will likely satisfy regulators that you meet the AI Act’s requirements during this interim period before formal standards arrive. Even if you choose not to formally sign, the Code’s recommendations on transparency (like providing model documentation forms), on copyright compliance (such as policies for respecting copyrighted training data), and on safety (like conducting adversarial testing and red-teaming of models) are valuable best practices that can improve your risk posture.

- Monitor Regulatory Updates and Engage: The AI regulatory environment will continue evolving through 2026 and beyond. Keep an eye on communications from the European AI Office and the AI Board – they will issue guidelines, Q&As, and possibly clarification on ambiguous points in the Act. It’s wise to budget for legal review of these updates and to participate in industry forums or consultations if possible. Engaging with regulators (directly or through industry associations) can give your company a voice in how rules are interpreted, such as shaping upcoming harmonized standards or future revisions of the Code of Practice. Proactive engagement can also demonstrate your commitment to responsible AI, which can be a reputational asset.

- Prepare for Transparency and Customer Communications: A often overlooked aspect of the AI Act is the emphasis on transparency not just to regulators but also to users. High-risk AI systems will require user notifications (e.g. that they are interacting with AI and not a human in certain cases), and AI-generated content may need labels. Start preparing plain-language disclosures about your AI’s capabilities and limits. Additionally, consider how you’ll handle inquiries or audits – if an EU regulator or the AI Office asks for your algorithmic documentation or evidence of risk controls, having those materials ready will expedite the process and avoid last-minute scrambles. Being transparent and forthcoming can also boost public trust, turning compliance into a competitive advantage rather than just a checkbox.

Finally, business leaders should view compliance not as a static checkbox but as part of building a broader culture of trustworthy AI. The EU AI Act has put ethics and human rights at the center of AI governance. Companies that align with these values – prioritizing user safety, fairness, and accountability in AI – stand to strengthen their brand reputation. Conversely, a failure to comply or a high-profile AI incident (such as a biased outcome or safety failure) could invite not only regulatory penalties (up to €35 million or 7% of global turnover for the worst violations) but also public backlash. In the coming years, investors, customers, and partners are likely to favor businesses that can demonstrate their AI is well-governed and compliant. By taking the steps above, organizations can mitigate legal risk, avoid last-minute fire drills as deadlines loom, and position themselves as leaders in the emerging era of AI regulation.

TTMS AI Solutions – Automate With Confidence

As the EU AI Act moves from paper to practice, organizations need practical tools that balance compliance, performance, and speed. Transition Technologies MS (TTMS) delivers enterprise-grade AI solutions that are secure, scalable, and tailored to real business workflows.

- AI4Legal – Automation for legal teams: accelerate document review, drafting, and case summarization while maintaining traceability and control.

- AI4Content – Document analysis at scale: process and synthesize reports, forms, and transcripts into structured, decision-ready outputs.

- AI4E-Learning – Training content, faster: transform internal materials into modular courses with quizzes, instructors’ notes, and easy editing.

- AI4Knowledge – Find answers, not files: a central knowledge hub with natural-language search to cut time spent hunting for procedures and know-how.

- AI4Localisation – Multilingual at enterprise pace: context-aware translations tuned for tone, terminology, and brand consistency across markets.

- AML Track – Automated AML compliance: streamline KYC, PEP and sanctions screening, ongoing monitoring, and audit-ready reporting in one platform.

Our experts partner with your teams end-to-end – from scoping and governance to integration and change management – so you get measurable impact, not just another tool.

Frequently Asked Questions (FAQs)

When will the EU AI Act be fully enforced, and what are the key dates?

The EU AI Act is being phased in over several years. It formally took effect in August 2024, but its requirements activate at different milestones. The ban on certain unacceptable AI practices (like social scoring and manipulative AI) started in February 2025. By August 2, 2025, rules for general-purpose AI models (foundation models) become applicable – any new AI model introduced after that date must comply. Most other provisions, including obligations for many high-risk AI systems, kick in by August 2026 (two years after entry into force). One final deadline is August 2027, by which providers of existing AI models (those that were on the market before the Act) need to bring those systems into compliance. In short, the period from mid-2025 through 2027 is when the AI Act’s requirements gradually turn from theory into practice.

What is the Code of Practice for General-Purpose AI, and do companies have to sign it?

The Code of Practice for GPAI is a voluntary set of guidelines designed to help AI model providers comply with the EU AI Act’s rules on general-purpose AI (like large language models or generative AI systems). It covers best practices for transparency (documenting how the AI was developed and its limitations), copyright (ensuring respect for intellectual property in training data), and safety/security (testing and mitigating risks from powerful AI models). Companies do not have to sign the Code – it’s optional – but there’s a big incentive to do so. If you adhere to the Code, regulators will presume you’re meeting the AI Act’s requirements (“presumption of conformity”), which gives you legal reassurance. Many major AI firms have signed on already. However, if a company chooses not to follow the Code, it must independently demonstrate compliance through other means. In summary, the Code isn’t mandatory, but it’s a highly recommended shortcut to compliance for those who develop general-purpose AI.

How will the European AI Office enforce the AI Act, and what powers does it have?

The European AI Office is a new EU-level regulator set up to ensure the AI Act is applied consistently across all member states. Think of it as Europe’s central AI “watchdog.” The AI Office has several important enforcement powers: it can request detailed information and technical documentation from companies about their AI systems, conduct evaluations and tests on AI models (especially the big general-purpose models) to check for compliance, and coordinate investigations if an AI system is suspected to violate the rules. While daily enforcement (like market checks or handling complaints) will still involve national authorities in each EU country, the AI Office guides and unifies these efforts, much like the European Data Protection Board does for privacy law. The AI Office can also help initiate penalties – under the AI Act, fines can be steep (up to €35 million or 7% of global annual revenue for serious breaches). In essence, the AI Office will be the go-to authority at the EU level: drafting guidance, managing the Code of Practice, and making sure companies don’t fall through the cracks of different national regulators.

Does the EU AI Act affect non-EU companies, such as American or Asian firms?

Yes. The AI Act has an extraterritorial scope very similar to the EU’s GDPR. If a company outside Europe is providing an AI system or service that is used in the EU or affects people in the EU, that company is expected to comply with the AI Act for those activities. It doesn’t matter where the company is headquartered or where the AI model was developed – what matters is the impact on the European market or users. For instance, if a U.S. tech company offers a generative AI tool to EU customers, or an Asian manufacturer sells a robot with AI capabilities into Europe, they fall under the Act’s provisions. Non-EU firms might need to appoint an EU representative (a local point of contact) for regulatory purposes, and they will face the same obligations (and potential fines) as European companies for non-compliance. In short, if your AI touches Europe, assume the EU AI Act applies.

How should businesses start preparing for EU AI Act compliance now?

To prepare, businesses should take a multi-pronged approach: First, educate your leadership and product teams about the AI Act’s requirements and identify which of your AI systems are impacted. Next, conduct a gap analysis or audit of those systems – do you have the necessary documentation, risk controls, and transparency measures in place? If not, start implementing them. It’s wise to establish an internal AI governance program, bringing together legal, technical, and operational stakeholders to oversee compliance. For companies building AI models, consider following the EU’s Code of Practice for GPAI as a framework. Also, update contracts and supply chain checks – ensure that any AI tech you procure from vendors meets EU standards (you may need assurances or compliance clauses from your providers). Finally, stay agile: keep track of new guidelines from the European AI Office or any standardization efforts, as these will further clarify what regulators expect. By acting early – well before the major 2025 and 2026 deadlines – businesses can avoid scrambling last-minute and use compliance as an opportunity to bolster trust in their AI offerings.