Unlocking ChatGPT 5 Modes: How Auto, Fast, Thinking, and Pro Really Work

Most of us use ChatGPT on autopilot – we type a question and wait for the AI to answer, without ever wondering if there are different modes to choose from. Yet these modes do exist, though they’re a bit tucked away in the interface and less visible than they once were. You can find them in the model picker, usually under options like Auto, Fast, Thinking, or Pro, and they each change how the AI works. But is it really worth exploring them? And how do they impact speed, accuracy, and even cost? That’s exactly what we’ll uncover in this article.

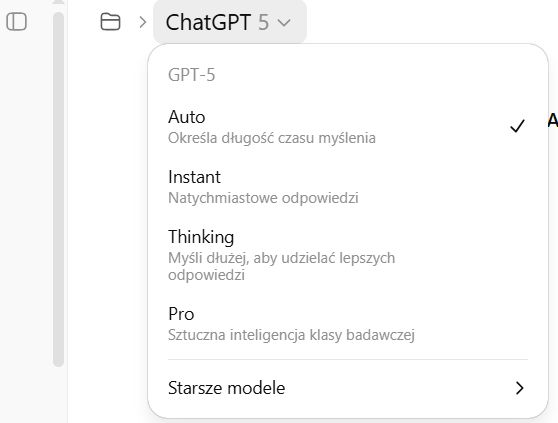

ChatGPT 5 introduces several modes of operation – Auto, Fast (sometimes called Instant), Thinking, and Pro – as well as access to older model versions. If you’re wondering what each of these modes does, when to switch between them (if at all), and how they differ in speed, quality, and cost, this comprehensive guide will clarify everything. We’ll also discuss which modes are best suited for everyday users versus business or professional users.

Each mode in GPT-5 is designed for a different balance of speed and reasoning depth. Below, we answer the key questions about these modes in an SEO-friendly Q&A format, so you can quickly find the information you need.

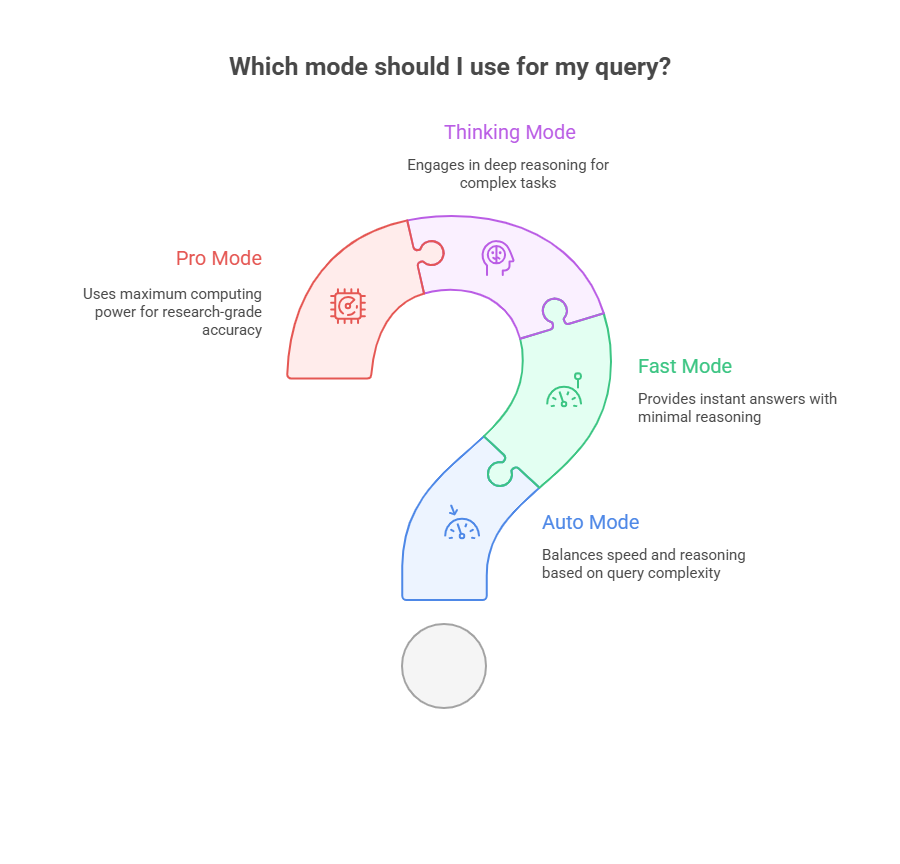

1. What are the new modes in ChatGPT 5 and why do they exist?

ChatGPT 5 (GPT-5) has transformed the old model selection into a unified system with four mode options: Auto, Fast, Thinking, and Pro. These modes exist to let the AI adjust how much “thinking” (computational effort and reasoning time) it should use for a given query:

- Auto Mode: This is the default unified mode. GPT-5 automatically decides whether to respond quickly or engage deeper reasoning based on your question’s complexity.

- Fast Mode: A mode for instant answers – GPT-5 responds very quickly with minimal extra reasoning. (This is essentially GPT-5’s standard mode for everyday queries.)

- Thinking Mode: A deep reasoning mode – GPT-5 will take longer to formulate an answer, performing more analysis and step-by-step reasoning for complex tasks.

- Pro Mode: A “research-grade” mode – the most advanced and thorough option. GPT-5 will use maximum computing power (even running parts of the task in parallel) to produce the most accurate and detailed answer possible.

These modes were introduced because GPT-5 is capable of dynamically adjusting its reasoning. In previous versions like GPT-4, users had to manually pick between different models (e.g. standard vs. advanced reasoning models). Now GPT-5 consolidates that into one system with modes, making it easier to get the right balance of speed vs. depth without constantly switching models. The Auto mode in particular means most users can just ask questions normally and let ChatGPT decide if a quick answer will do or if it should “think longer” for a better result.

2. How does ChatGPT 5’s Auto mode work?

The Auto mode is the intelligent default that makes GPT-5 decide on the fly how much reasoning is needed. When you have GPT-5 set to Auto, it will typically answer straightforward questions using the Fast approach for speed. If you ask a more complex or multi-step question, the system can automatically invoke the Thinking mode behind the scenes to give a more carefully reasoned answer.

In practice, Auto mode means you don’t have to manually select a model for most situations. GPT-5’s internal “router” analyzes your prompt and chooses the appropriate strategy:

- For a simple prompt (like “Summarize this paragraph” or “What’s the capital of France?”), GPT-5 will likely respond almost immediately (using the Fast response mode).

- For a complex prompt (like “Analyze this financial report and give insights” or a tricky coding/debugging question), GPT-5 may “think” for a bit longer before answering. You might notice a brief indication that it’s reasoning more deeply. This is GPT-5 automatically switching into its Thinking mode to ensure it works through the problem.

Auto mode is ideal for most users because it delivers the best of both worlds: quick answers when possible, and more thorough answers when necessary. You can always override it by manually picking Fast or Thinking, but Auto means less guesswork – the AI itself decides how long to think. If you ever explicitly want it to take its time, you can even tell GPT-5 in your prompt to “think carefully about this,” which encourages the system to engage deeper reasoning.

Tip: When GPT-5 Auto decides to think longer, the interface will indicate it. You usually have an option to “Get a quick answer” if you don’t want to wait for the full reasoning. This allows you to interrupt the deep thinking and force a faster (but potentially less detailed) reply, giving you control even in Auto mode.

3. What is the Fast (Instant) mode in GPT-5 used for?

The Fast mode (labeled “Fast – instant answers” in the ChatGPT model picker) is designed for speedy responses. In Fast mode, GPT-5 will generate an answer as quickly as possible without dedicating extra time to extensive reasoning. Essentially, this is GPT-5’s standard mode for everyday tasks that don’t require heavy analysis.

When to use Fast mode:

- Simple or routine queries: If you’re asking something straightforward (factual questions, brief explanations, casual conversation), Fast mode will give you an answer within a few seconds.

- Brainstorming and creative prompts: Need a quick list of ideas or a first draft of a tweet/blog? Fast mode is usually sufficient and time-efficient.

- General coding help: For small coding questions or debugging minor errors, Fast mode can provide answers quickly. GPT-5’s base capability is already high, so for many coding tasks you might not need the extra reasoning.

- Everyday business tasks: Writing an email, summarizing a document, responding to a common customer query – Fast mode handles these with speed and improved accuracy (GPT-5 is noted to have fewer random mistakes than GPT-4 did, even in its fast responses).

In Fast mode, GPT-5 is still quite powerful and more reliable than older GPT-4 models for common tasks. It’s also cost-efficient (lower compute usage means fewer tokens consumed, which matters if you have usage limits or are paying per token via the API). The trade-off is that it might not catch extremely subtle details or perform multi-step reasoning as well as the Thinking mode would. However, for the vast majority of prompts that are not highly complex, Fast mode’s answers are both quick and accurate. This is why Fast (or “Standard”) mode serves as the backbone for day-to-day interactions with ChatGPT 5.

4. When should you use the GPT-5 Thinking mode?

GPT-5’s Thinking mode is meant for situations where you need extra accuracy, depth, or complex problem-solving. When you manually switch to Thinking mode, ChatGPT will deliberately take more time (and tokens) to work through your query step by step, almost like an expert “thinking out loud” internally before giving you a result. You should use Thinking mode for tasks where a quick off-the-cuff answer might not be good enough.

Use GPT-5 Thinking mode when:

- The problem is complex or multi-step: If you ask a tough math word problem, a complex programming challenge, or an analytical question (e.g. “What are the implications of this scientific study’s results?”), Thinking mode will yield a more structured and correct solution. It’s designed to handle advanced reasoning tasks like these with higher accuracy.

- Precision matters: For example, drafting a legal clause, analyzing financial data for trends, or writing a medical report summary. In such cases, mistakes can be costly, so you want the AI to be as careful as possible. Thinking mode reduces the chance of errors and hallucinations even further by allocating more computation to verify facts and logic.

- Technical or detailed writing: If you need longer, well-thought-out content – such as an in-depth explanation of a concept, thorough documentation, or a step-by-step guide – the Thinking mode can produce a more comprehensive answer. It’s like giving the model extra time to gather its thoughts and double-check itself before responding.

- Coding complex projects: For debugging a large codebase, solving a tricky algorithm, or generating non-trivial code (like a full module or a complex function), Thinking mode performs significantly better. It’s been observed to greatly improve coding accuracy and can handle more elaborate tasks like multi-language code coordination or intricate logic that Fast mode might get wrong.

Trade-offs: In Thinking mode, responses are slower. You might wait somewhere on the order of 10-30 seconds (depending on the complexity of your request) for an answer, instead of the usual 2-5 seconds in Fast mode. It also uses more tokens and computing resources, meaning it’s more expensive to run. If you’re on ChatGPT Plus, there are even usage limits for how many Thinking-mode messages you can send per week (because each such response is heavy on the system). However, those downsides are often justified when the question is important enough. The mode can deliver dramatically improved accuracy – for example, internal OpenAI benchmarks showed huge jumps in performance (several-fold improvements on certain expert tasks) when GPT-5 is allowed to think longer.

In summary, switch to Thinking mode for high-stakes or highly complex prompts where you want the best possible answer and you’re willing to wait a bit longer for it. For everyday quick queries, it’s not necessary – the default fast responses will do. Many Plus users might use Thinking mode sparingly for those tough questions, while relying on Auto/Fast for everything else.

5. What does GPT-5 Pro mode offer, and who really needs it?

GPT-5 Pro mode is the most advanced and resource-intensive mode available in ChatGPT 5. It’s often described as “research-grade intelligence.” This mode is only available to users on the highest-tier plans (ChatGPT Pro or ChatGPT Business plans) and is intended for enterprise-level or critical tasks that demand maximum accuracy and thoroughness. Here’s what Pro mode offers and who benefits from it:

- Maximum accuracy through parallel reasoning: GPT-5 Pro doesn’t just think longer; it also can think more broadly. Under the hood, Pro mode can run multiple reasoning threads in parallel (imagine consulting an entire panel of AI experts simultaneously) and then synthesize the best answer. This leads to even more refined responses with fewer mistakes. In testing, GPT-5 Pro set new records on difficult academic and professional benchmarks, outperforming the standard Thinking mode in many cases.

- Use cases for Pro: This mode shines in high-stakes, mission-critical scenarios:

- Scientific research and healthcare: e.g. analyzing complex biomedical data, discovering drug candidates, or interpreting medical imaging results (where absolute precision is vital).

- Finance and legal: e.g. risk modeling, auditing complex financial portfolios, generating or reviewing legal contracts with extreme accuracy – tasks where an error could cost a lot of money or have legal implications.

- Large-scale enterprise analytics: e.g. processing lengthy confidential reports, performing deep market analysis, or powering a virtual assistant that needs to reliably handle very complex queries from users.

- AI development: If you’re a developer building AI-driven applications (like agents that plan and act autonomously), GPT-5 Pro provides the most consistent reasoning depth and reliability for those advanced applications.

- Who needs Pro: Generally, businesses and professionals with intensive needs. For a casual user or even most power-users, the standard GPT-5 (and occasional Thinking mode) is usually enough. Pro mode is targeted at enterprise users, research institutions, or AI enthusiasts who require that extra edge in performance – and are willing to pay a premium for it.

Drawbacks of Pro mode: The word “Pro” implies it’s not for everyone. First, it’s expensive – both in terms of subscription cost and computational cost. As of 2025, ChatGPT Pro subscriptions run at a much higher price (around $200 per month) compared to the standard Plus plan, and that buys you the privilege of using this powerful mode without the normal usage caps. Also, each Pro mode response consumes a lot of compute (and tokens), so from an API or cost perspective it’s the priciest option (roughly double the token cost of Thinking mode, and ~10 times the cost of a quick response). Second, speed: Pro mode is the slowest to respond. Because it’s doing so much work under the hood, you might wait 20-40 seconds or more for a single answer. In interactive chat, that can feel lengthy. Lastly, Pro mode currently has a couple of limitations in features (for instance, certain ChatGPT tools like image generation or the canvas feature may not be enabled with GPT-5 Pro, due to its specialized nature).

Bottom line: GPT-5 Pro is a potent tool if you truly need the highest level of AI reasoning and are in an environment where accuracy outweighs all other concerns (and cost is justified by the value of the results). It’s likely overkill for everyday needs. Most users, even many developers, won’t need Pro mode regularly. It’s more for organizations or individuals tackling problems where that extra 5-10% improvement in quality is worth the extra expense and time.

6. How do the modes differ in speed and answer quality?

Each mode in ChatGPT 5 strikes a different balance between speed and the depth/quality of the answer:

- Fast mode is the quickest: It typically responds within a couple of seconds for a prompt. The answers are high-quality for normal questions (much better than older GPT-3.5 or even GPT-4 in many cases), but Fast mode will not always catch very subtle nuances or deeply reason through complicated instructions. Think of Fast mode answers as “good enough and very fast” for general purposes.

- Thinking mode is slower but more thorough: When GPT-5 Thinking is engaged, response times slow down (often 10-30 seconds depending on complexity). The quality of the answers, however, is more robust and detailed. GPT-5 Thinking will handle multi-step reasoning tasks significantly better. For example, if a Fast mode answer might occasionally miscalculate or simplify a complex answer, the Thinking mode is far more likely to get it correct and provide justification or step-by-step details in its response. In terms of quality, you can expect far fewer factual errors or “hallucinations” in Thinking mode responses, since the AI took extra time to verify and cross-check its answer internally.

- Pro mode is the most meticulous (and slowest): GPT-5 Pro will take even more time than Thinking mode for a response, as it uses maximum compute. It might explore several potential solutions internally before finalizing an answer, which maximizes the quality and correctness. The answers from Pro mode are usually the most detailed, well-structured, and accurate. You might notice they contain deeper insights or handle edge cases that the other modes might miss. The trade-off is that Pro mode responses can easily take half a minute or more, and you wouldn’t use it unless you truly need that level of depth.

In summary:

- Speed: Fast > Thinking > Pro (Fast is fastest, Pro is slowest).

- Answer depth/quality: Pro > Thinking > Fast (Pro gives the most advanced answers, Fast gives concise answers).

- Everyday effectiveness: For most simple queries, all modes will do fine; you won’t necessarily notice a quality difference on an easy question. The differences become apparent on challenging tasks. Fast mode might give a decent but not perfect answer, Thinking mode will give a correct and well-explained answer, and Pro mode will give an exceptionally detailed answer with minimal chance of error.

It’s also worth noting that GPT-5’s base quality (even in Fast mode) is a leap over previous generations. Many users find that even quick answers from GPT-5 are more accurate and nuanced than what GPT-4 produced. So speed doesn’t degrade quality as much as you might think for typical questions – it mainly matters when the question is particularly difficult.

7. Do different GPT-5 modes use more tokens or cost more to use?

Yes, the modes do differ in terms of token usage and cost, though it might not be obvious at first glance. The general rule is: the more thinking a mode does, the more tokens and cost it will incur. Here’s how it breaks down:

- Fast mode (Standard GPT-5): This mode is the most token-efficient. It generates answers quickly without a lot of internal computation, so it tends to use only the tokens needed for the answer itself. If you’re using the ChatGPT subscription, there’s no direct “cost” per message beyond your subscription, but Fast mode also consumes your message quota more slowly (because each answer is concise and doesn’t involve hidden extra tokens). If you were using the API, Fast mode’s underlying model has the lowest price per 1000 tokens (OpenAI has indicated something on the order of $0.002 per 1K tokens for GPT-5 Standard, which is even a bit cheaper than GPT-4 was).

- Thinking mode: This mode is resource-intensive, meaning it will use more tokens internally to reason through the problem. When GPT-5 “thinks,” it might be effectively doing multi-step reasoning which uses up extra tokens behind the scenes (these don’t all show up in the answer, but they count towards computation). The cost per token for this mode is higher (roughly 5× the cost of standard mode on the API side). In ChatGPT Plus, using Thinking mode too often is limited – for instance, Plus users can only initiate a certain number of Thinking-mode messages per week (because each one is expensive to run on the server). So effectively, each Thinking response “costs” much more in terms of your usage allowance. In practical terms, expect that a deep Thinking answer might consume significantly more of your message limits than a quick answer would.

- Pro mode: Pro mode is the most expensive per use. It not only carries a higher token cost (approximately double that of Thinking mode per token, or about 10× the base cost of Fast mode), but it often produces longer answers and does a lot of work internally. This is why Pro mode is reserved for the highest-paying tier – it would be infeasible to offer unlimited Pro responses at a low price point. If you have a Pro subscription or enterprise access, you effectively have no hard limit on GPT-5 usage, but your cost is the hefty monthly fee instead. If you were using an API equivalent, Pro mode would be quite costly per 1000 tokens. The benefit is that because Pro is so accurate, in theory you might save money by not having to repeat queries or fix mistakes – but you’d only worry about that if you’re using GPT-5 for high-value tasks.

In terms of token usage in answers, deeper modes often yield longer, more detailed replies (especially if the task warrants it). That means more output tokens. Also, they reduce the chance you’ll need to ask follow-up questions or clarifications (which themselves would consume more tokens), which is another way they can be “cost-effective” despite higher per-message cost. But if you’re on the free plan or Plus, the main thing to know is that the heavy modes will hit your usage limits faster:

- Free users only get a very limited number of GPT-5 messages and just 1 Thinking-mode use per day on free tier. This is because Thinking uses a lot of resources.

- Plus users get more (currently around 160 messages per 3 hours for GPT-5, and up to 3,000 Thinking messages per week maximum). If a Plus user sticks to Fast/Auto primarily, they can get a lot of answers within those caps; if they use Thinking for every query, they’ll hit weekly limits much sooner.

- Pro/Business users have “unlimited” use, but that comes at the high subscription cost.

So, in conclusion, each mode does “cost” differently: Fast mode is cheapest and most token-efficient, Thinking mode costs several times more per question, and Pro is premium priced. If you’re concerned about token usage (say, for API billing or hitting message caps), use the heavier modes only when needed. Otherwise, the Auto mode will handle it for you, using extra tokens only when it determines the value of a better answer is worth the cost.

8. Should you manually switch modes or let ChatGPT decide automatically?

For most users, letting GPT-5 Auto mode handle it is the simplest and often the best approach. The auto-switching system was built to spare you from micromanaging the model’s behavior. By default, GPT-5 will not waste time “overthinking” an easy question, and similarly it won’t give you a shallow answer to a really complex prompt – it will adjust as needed. That said, there are scenarios where manually choosing a mode makes sense:

- When you know you need a deep analysis: If you’re about to ask something very complex and you want to ensure the highest accuracy (and you have access to Thinking mode), you might manually switch to Thinking mode before asking. This guarantees GPT-5 spends maximum effort, rather than waiting to see if it might decide to do so. For example, a data scientist preparing a detailed report might directly use Thinking mode for each query to get thorough answers.

- When you’re in a hurry for a simple answer: If GPT-5 (Auto) starts “Thinking…” but you actually just want a quick answer or a brainstorm, you can click “Get a quick answer” or simply switch to Fast mode for that question. Sometimes the AI might be overly cautious and begin deep reasoning when you didn’t need it – in those cases, forcing Fast mode will save you time.

- When conserving usage: If you’re on a limited plan and near your cap, you might stick to Fast mode to maximize the number of questions you can ask, since Thinking mode would burn through your quota faster. Conversely, if you have plenty of headroom and need a top-notch answer, you can use Thinking mode more liberally.

- Using Pro mode deliberately: If you’re one of the users with Pro access, you’ll likely switch to Pro mode only for the most critical queries. It doesn’t make sense to use Pro for every single chat message due to the slower speed – better to reserve it for when you have a genuinely high-value question that justifies it.

In short, Auto mode is usually sufficient and is the recommended default for both casual and many professional interactions. You only need to manually switch modes in special cases: either to force extra rigor or to force extra speed. Think of manual mode switching as an override for the AI’s decisions. The system’s pretty good at picking the right mode on its own, but you remain in control if you disagree with its choice.

9. Are older models like GPT-4 still available in ChatGPT 5?

Yes, older models are still accessible in the ChatGPT interface under a “Legacy models” section – but you may not need to use them often. With the rollout of GPT-5:

- GPT-4 (often labeled GPT-4o or other variants) is available to paid users as a legacy option. If you have a Plus, Business, or Pro account, you can find GPT-4 in the model picker under legacy models. This is mainly provided for compatibility or specific use cases where someone might want to compare answers or use an older model on prior conversations.

- Additionally, OpenAI has allowed access to some intermediate models (like GPT-4.1, GPT-4.5, or older 3.5 models often labeled as

o3,o4-mini, etc.) for certain subscription tiers, but these are hidden unless you enable “Show additional models” in your settings. Plus users, for example, can see a few of those, while Pro users can see slightly more (like GPT-4.5). - By default, if you don’t specifically switch to an older model, all your chats will use GPT-5 (Auto mode). And if you open an old chat that was originally with GPT-4, the system may automatically load it with the GPT-5 equivalent to continue the conversation. So OpenAI has tried to transition seamlessly such that GPT-5 handles most things going forward.

Do you need the older models? For the majority of cases, no. GPT-5’s Standard/Fast mode is intended to replace GPT-4 for everyday use, and it’s better at almost everything. There might be a rare instance where an older model had a particular style or a specific capability you want to replicate – then you could switch to it. But generally, GPT-5’s intelligence and the Auto mode’s adaptability mean you won’t often have to manually use GPT-4 or others. In fact, some of the older GPT-4 variants might be slower or have lower context length compared to GPT-5, so unless you have a compatibility reason, it’s best to let GPT-5 take over.

One thing to note: if you exceed certain usage limits with GPT-5 (especially on the free tier), ChatGPT will automatically fall back to a “GPT-5 mini” or even GPT-3.5 temporarily until your limit resets. This is done behind the scenes to ensure free users always get some service. In the UI, it might not clearly say it switched, but the quality might differ. Paid users won’t experience this fallback except when they intentionally use legacy models.

In summary, older models are there if you need them, but GPT-5’s modes are now the main focus and cover almost all use cases that older models did – typically with better results.

10. Which GPT-5 mode is best for business users versus general users?

The choice of mode can depend on who you are and what you’re trying to accomplish. Let’s break it down for individual (general) users and business users or professionals:

- General Users / Individuals: If you’re an everyday user (for personal projects, learning, or casual use), you’ll likely be perfectly satisfied with the default GPT-5 Auto mode, using Fast responses most of the time and occasionally letting it dip into Thinking mode when you ask a harder question. A ChatGPT Plus subscription might be worthwhile if you use it very frequently, since it gives you more GPT-5 usage and access to manual Thinking mode when you need it. However, you probably do not need GPT-5 Pro mode. The Pro tier is expensive and geared toward unlimited heavy use, which average users don’t usually require. In short, general users should stick with the standard GPT-5 (Auto/Fast) for speed and ease, and use Thinking mode for those few cases where you want a deep dive answer. This will keep your costs low (or your Plus subscription fully sufficient) while still giving you excellent results.

- Business Users / Professionals: For business purposes, the stakes and scale often increase. If you run a business integrating ChatGPT, or you’re using it in a professional setting (for instance, to assist with your work in finance, law, engineering, customer service, etc.), you need to consider accuracy and reliability carefully:

- Small Business or Plus for Professionals: Many professional users will find that a Plus account with GPT-5’s Thinking mode available is enough. You can manually invoke Thinking mode for those complex tasks like data analysis or report generation, ensuring high quality when needed, while keeping most interactions quick and efficient in standard mode. This approach is cost-effective and likely sufficient unless your domain is extremely sensitive.

- Enterprises or High-Stakes Use: If you’re an enterprise user or your work involves critical decision-making (say, a medical AI tool, or a financial firm doing big analyses), GPT-5 Pro might be worth the investment. Businesses benefit from Pro mode’s extra accuracy and from the unlimited usage it offers. There’s no worry about hitting message caps, which is important if you have many employees or customers interacting with the system. Moreover, the larger context window on the Pro plan (GPT-5 Pro supports dramatically bigger inputs, up to 128K tokens context for Fast and ~196K for Thinking, according to OpenAI) allows analysis of very large documents or datasets in one go – a huge plus for enterprise use cases.

- Cost-Benefit: Businesses should weigh the cost of the Pro subscription (or Business plan) against the value of the improved outputs. If a single mistake avoided by Pro mode could save your company thousands of dollars, then using Pro mode is justified. On the other hand, if your use of AI is more routine (like answering common customer questions or writing marketing content), the standard GPT-5 might already be more than capable, and a Plus plan at a fraction of the cost will do the job.

In summary, for general users: stick with Auto/Fast, use Thinking sparingly, and you likely don’t need Pro. For business users: start with GPT-5’s standard and Thinking modes; if you find their limits (in accuracy or usage caps) hindering your mission-critical tasks, then consider upgrading to Pro mode. GPT-5 Pro is predominantly aimed at businesses, research labs, and power users who truly need that unparalleled performance and can justify the expense. Everyone else will find GPT-5’s default modes already a significant upgrade that addresses both casual and moderately complex needs effectively.

11. Final Thoughts: Getting the Most Out of ChatGPT 5’s Modes

ChatGPT 5’s new modes – Auto, Fast, Thinking, and Pro – give you a flexible toolkit to get the exact type of answer you need, when you need it. For most people, letting Auto mode handle things is easiest, ensuring you get fast responses for simple questions and deeper analysis for tough ones without manual effort. The system is designed to optimize speed and intelligence automatically.

However, it’s great that you have the freedom to choose: if you ever feel a response needs to be more immediate or more thorough, you can toggle to the corresponding mode. Keep an eye on how each mode performs for your use case:

- Use Fast mode for quick, on-the-fly Q&A and save precious time.

- Invoke Thinking mode for those problems where you’d rather wait a few extra seconds and be confident in the answer’s accuracy and detail.

- Reserve Pro mode for the rare instances where only the best will do (and if your resources allow for it).

Remember, all GPT-5 modes leverage the same underlying advancements that make this model more capable than its predecessors: improved factual accuracy, better following of instructions, and more context capacity. Whether you’re a curious individual user or a business deploying AI at scale, understanding these modes will help you harness GPT-5 effectively while managing speed, quality, and cost according to your needs. Happy chatting with GPT-5!

12. Want More Than Chat Modes? Discover Bespoke AI Services from TTMS

ChatGPT is powerful, but sometimes you need more than a mode toggle – you need custom AI solutions built for your business. That’s where TTMS comes in. We offer tailored services that go beyond what any off-the-shelf mode can do:

- AI Solutions for Business – end-to-end AI integration to automate workflows and unlock operational efficiency. (See https://ttms.com/ai-solutions-for-business/)

- Anti-Money Laundering Software Solutions – AI-powered AML systems that help meet regulatory compliance with precision and speed. (See https://ttms.com/anti-money-laundry-software-solutions/)

- AI4Legal – legal-tech tools using AI to support contract drafting, review, and risk analysis. (See https://ttms.com/ai4legal/)

- AI Document Analysis Tool – extract, validate, and summarize information from documents automatically and reliably. (See https://ttms.com/ai-document-analysis-tool/)

- AI-E-Learning Authoring Tool – build intelligent training and learning modules that adapt and scale. (See https://ttms.com/ai-e-learning-authoring-tool/)

- AI-Based Knowledge Management System – structure and retrieve organizational knowledge in smarter, faster ways. (See https://ttms.com/ai-based-knowledge-management-system/)

- AI Content Localization Services – localize content across languages and cultures, using AI to maintain nuance and consistency. (See https://ttms.com/ai-content-localization-services/)

If your goals include saving time, reducing costs, and having AI work for you rather than just alongside you, let’s talk. TTMS crafts AI tools not just for “general mode” but for your exact use case – so you get speed when you need speed, and depth when you need rigor.

Does switching between ChatGPT modes change the creativity of answers?

Yes, the choice of mode can influence how creative or structured the output feels. In Fast mode, responses are more direct and efficient, which is useful for brainstorming short lists of ideas or generating quick drafts. Thinking mode, on the other hand, allows ChatGPT to explore more options and refine its reasoning, which often leads to more original or nuanced results in storytelling, marketing, or creative writing. Pro mode takes this even further, producing well-polished, highly detailed content, but it comes with longer wait times and higher costs.

Which ChatGPT mode is most reliable for coding?

For simple coding tasks such as generating small functions, fixing syntax errors, or writing snippets, Fast mode usually performs well and delivers answers quickly. However, when working on complex projects that involve debugging large codebases, designing algorithms, or ensuring higher reliability, Thinking mode is a better choice. Pro mode is reserved for scenarios where absolute precision matters, such as enterprise-level software or mission-critical applications. In short: use Fast for convenience, Thinking for accuracy, and Pro only when failure isn’t an option.

Do ChatGPT modes affect memory or context length?

The modes themselves don’t directly change the memory of your conversation or the context size. All GPT-5 modes share the same underlying architecture, but the subscription tier determines the maximum context length available. For example, Pro plans unlock significantly larger context windows, which makes it possible to analyze or generate content across hundreds of pages of text. So while Fast, Thinking, and Pro modes behave differently in terms of reasoning depth, the real impact on memory and context length comes from the plan you are using rather than the mode itself.

Can free users access all ChatGPT modes?

No, free users have very limited access. Typically, the free tier allows only Fast (Auto) mode, with an occasional option to test Thinking mode under strict daily limits. Access to Pro mode is reserved exclusively for paid subscribers on the highest tier. Plus subscribers can use Auto and Thinking regularly, but only Business or Pro users have unrestricted access to the full range of modes. This limitation is due to the high computational costs associated with Thinking and Pro modes.

Is there a risk in always using Pro mode?

The main “risk” of using Pro mode is not about accuracy, but about practicality. Pro mode delivers the most thorough and precise results, but it is also the slowest and the most expensive option. If you rely on it for every single question, you may find that you’re spending more time and resources than necessary for simple tasks that Fast or Thinking could easily handle. For most users, Pro should be reserved for the toughest or most critical challenges. Otherwise, it’s more efficient to let Auto mode decide or to use Fast for everyday queries.

Does ChatGPT switch modes automatically, or do I need to do it manually?

ChatGPT 5 offers both options. In Auto mode, the system decides automatically whether a quick response is enough or if it should engage in deeper reasoning. That means you don’t need to worry about switching manually – the AI adjusts to the complexity of your query on its own. However, if you prefer full control, you can always manually select Fast, Thinking, or Pro in the model picker. In practice, Auto is recommended for everyday use, while manual switching makes sense if you explicitly want either maximum speed or maximum accuracy.