Probably, everyone is aware today of the need to analyze the data in their organization. Not only that, today we can attempt to analyze data also from outside our ecosystem – these can be, for example, publicly available or purchased demographic data, data about our position in relation to the competition, content analysis from broadly understood social media or analysis of various data streams in real time (or more often – almost real). The current question, therefore, is not “do we have to do this?” but “how to do it efficiently?”

A lot of time has passed since the times when solutions of this type were reserved only for the largest and were usually based on a dedicated data warehouse. Today, advanced analyzes can be performed on a countless number of tools of various types – from on-premises (in your own server room) to cloud-based, from open-source to fully commercial ones. It would seem that with such opportunities, organizations will start to improve the use of data. However, looking globally, it did not happen, although we definitely have leaders who derive amazing benefits from the implemented analytical solutions, a significant part of companies struggle with great difficulties in this area.

What are the problems with current analytical solutions?

Below I will focus on three by. me the most important problems. Of course, there are more problems, but the following ones can “spoil the blood” the most:

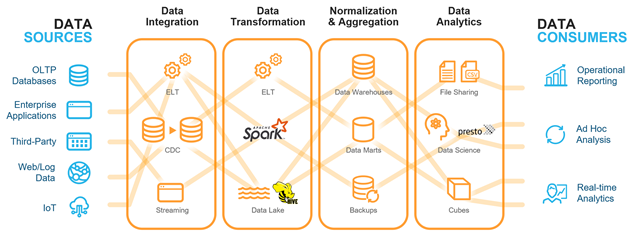

Using many technologies in large solutions makes sense when we have specific needs or when we care about specific / unique features of a given technology. Behind this specific narrow specialization of individual elements of the solution, however, there is a very serious administrative problem – the more technologies are used, the more difficult it is to harmonize the operation of the entire system. It also takes more and more specialized knowledge to do it right (several operating philosophies + several programming languages). Ultimately, this means that to operate such a solution, a larger crew is needed, which has to look after such a system. An additional problem is the fact that we create clearly separated areas and we cannot easily move developers to different episodes because we use different technologies in each episode.

- Data processing in silos

If we leave such an opportunity, each department or even a team will start building their solutions as best they can, but in isolation from the holistic view of the business. I would not like to be misunderstood here – I truly admire people who try to solve the organizational problem with access to reports (or even their complete absence) on their own, creating solutions for their own needs, often with great commitment. Along the way, however, they often implement complex business conditions specific to their point of view. Therefore, looking from the level of the entire organization, such an approach very quickly ends with a question such as “What is our sales result?” we get many different answers depending on who we ask – Marketing “knows its own”, Sales sees something else, Logistics also has its version of the truth. Making decisions in such an environment is very difficult and sharing different versions is time-consuming and creates conflicts.

- Immortal Excel

It is amazing how many companies (including global corporations) base their main reporting solutions, in some area, on honest Excel. For the sake of clarity, I myself think that Excel can perfectly extend the capabilities of the reporting platform, e.g. in the area of Ad-Hoc analysis, but relying 100% only on Excel is asking for trouble. The main problems of such solutions are: the tendency to produce a very large number of report files, which no one looks at after a short time, problems with automatic data update – most solutions of this type, at some stage, unfortunately have to be “pushed” manually, which is time-consuming and it unnecessarily involves people and it is difficult to share files while maintaining an appropriate security layer (eg the central office sees everything and the regions only see “their” data). For a more complete list of the problems associated with this approach, see “Excel Hell” – it’s a presentation, that I’m about ot publish in a near future.

However, there is hope: these problems, by definition, disappear with the proper implementation of the Business Intelligence platform.

By the way, the spontaneous formation of silos, which I wrote about earlier, may also appear because the organization has badly implemented self-service BI solutions – a good example is the implementation of Power BI without an appropriate path to include a new tool in the company’s ecosystem. The pumped-up “transition” to Power BI with no training, no vision, and no cross-departmental collaboration will most likely end up with existing problems simply being raised to a more “modern and fashionable” level with no special benefit to the organization. For example, from the last evaluation at the client’s site – can a situation in which the company has over 30,000 (thirty thousand!) Published Power BI reports and probably twice as many unpublished reports as desirable? How to find the right and most up-to-date one in such a thicket of reports?

Simplification of the solution while increasing its possibilities

Fortunately, today we have solutions that have been designed from scratch to solve all the above-mentioned problems in the area of analytics.

One such technology is Snowflake. I described the features of Snowflake in an earlier article, so now I would like to focus on how implementing Snowflake allows you to simplify the architecture of the entire solution.

Placing Snowflake as a central data repository significantly simplifies the entire solution – the service can receive data from various sources, the service is both a Data Lake layer and a data warehouse (EDW – Enterprise Data Warehouse) layer, and thanks to high performance and dynamic scaling, we can do without an additional layer indirect (e.g. dedicated analytical services) to serve data for analytical tools. What I have already mentioned allows you to resign from the additional services classically used in this type of solutions, and this is not the end – Snowflake can be fully operated from the SQL language level, which is a very important and not always exposed positive feature. SQL is widely known and used by developers, analysts, statisticians, etc., and this allows you to think about completing your crew without fear, as opposed to services supported by specific languages that only a handful of people know.

It is also very important to look to the future – if we plan to launch Data Science, will Snowflake be for me? Until recently, the answer was not so obvious, but from the moment when it is possible to execute Python code directly on Snowflake, we get the possibility of efficient data processing of huge volumes of data (as is usually the case in the area of advanced analytics) without the need to “drag” data to the next services or – in the unfortunately very popular but incorrect approach – to your workstation. With Snowflake, the data remains safe in the area of our platform, and the results of the experiments are obtained faster and in a more organized way.

The possibility of secure data sharing is also important, thanks to which we can additionally simplify the stage of data exchange with our partners. It is a trend that will develop strongly and this kind of democratization of access to data will allow for even better analysis and a fuller understanding of the processes taking place not only in our company but also in its widely understood environment.

Summary

I could write a while longer about the great risk of using “over-combined architecture” – the departure of an employee who knew the unique technical aspects of the solution used, incorrect optimization of a sophisticated service, or difficulties in administration and harmonization of several different and not necessarily well-cooperating elements become the curse of such solutions. Of course, there are “players” who, by investing huge budgets, can squeeze the maximum benefits from such architectures, but looking realistically at the matter, the risk that we will not succeed with this approach is simply large. Without maintaining a large and “redundant” team, we cannot sleep well.

At this point, I hope that it is clearly visible how the selection of a universal and at the same time endowed with unique features technology such as Snowflake can significantly increase our capabilities and greatly improve our security in the area of analytics. Finally, we can also expect considerable savings if we honestly count the time and resources that we spent on maintaining and developing complex architecture. Therefore, the “make it simple” approach is the one that I advise even the biggest clients, because nothing enjoys the business as quickly as materializing results of work and high efficiency of the solution. On the other hand, nothing “saddens” the business as much as postponing the deadline for completing a phase only because of technical problems “because we still have to configure something” or “our components of the solution do not work together as we expected” – I assure you that no one (be it a developer or a client) does not want to be in this situation. So I encourage you once again: “make it simple”!

Norbert Kulski – Transition Technologies MS